Home

Services

About us

Blog

Contacts

Forestry Drones, Reimagined: Myco-Seeding, Flying Edge Networks, and Bioacoustic Early-Warning

1.Myco-Seeding at Scale: AI-Mapped, LiDAR-Driven Reforestation with Smart Seedpods

2.The Flying Edge: UAV Data Mules, Forest LoRaWAN and Emergency Mesh for Fire Ops

3.Bioacoustic and Hyperspectral Guardians: Ultra-Early Detection of Pests, Stress & Wildlife Impacts

Notable pilots & vendors

Myco-Seeding at Scale: AI-Mapped, LiDAR-Driven Reforestation with Smart Seedpods

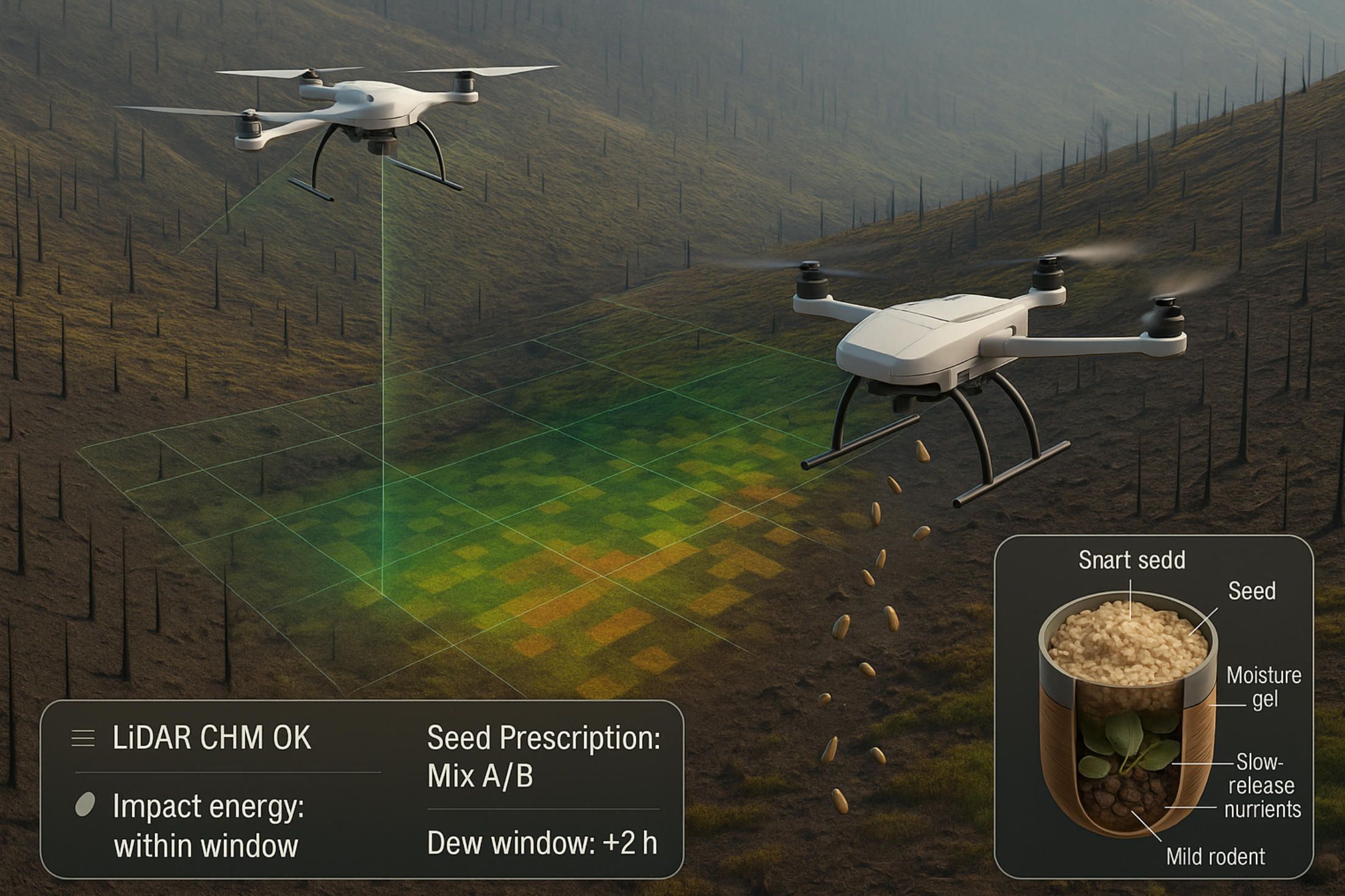

The old picture of “drones dropping seeds” undersells what modern forestry teams can actually orchestrate. At scale, successful aerial reforestation is equal parts geospatial science, seed-technology, and field-grade software that turns complex terrain into millions of micro-decisions. In this section, we unpack the full pipeline—from 3-D terrain intelligence and variable-rate “seed prescriptions” to capsule engineering with mycorrhiza and post-drop audit—so you can see where custom mobile apps from an IoT app development company like A-Bots.com sit in the loop.

1) Terrain intelligence: from raw point clouds to plantable micro-sites

Everything starts with mapping—not just pretty orthophotos, but high-fidelity, tree-scale structure. UAVs equipped with LiDAR produce dense point clouds that are converted into Digital Elevation Models (DEM), Digital Surface Models (DSM), and, crucially, Canopy Height Models (CHM). From these layers, we infer slope, aspect, roughness, insolation proxies, and candidate bare-soil patches that can accept a capsule without bouncing, puddling, or shading out. Forestry trials have shown that UAV-LiDAR captures individual-tree structure well enough to guide silvicultural decisions; processing workflows around DJI L1-class sensors are now routine and field-repeatable (MDPI).

LiDAR is not just for forests; lessons from precision agriculture—stand reconstruction, canopy geometry, and terrain-aware prescriptions—carry over directly to wildland restoration. That cross-pollination matters because we are not placing uniform seed rain; we are placing species and capsule types where they have the best odds given micro-topography and moisture (PMC).

A practical way to think about site selection is as a scoring function over a micro-grid (1–5 m cells):

Si=w1⋅Radiationi+w2⋅TWIi+w3⋅(1−Roughnessi)+w4⋅NurseVegProximityi−w5⋅FrostRiski

Weights are species- and season-specific (e.g., a shade-tolerant conifer wants a different mix than a heat-stressed hardwood). The mission planner promotes cells with high Si and adequate impact angle (derived from slope + height AGL) so capsules seat into mineral soil rather than thatchy litter.

2) Variable-rate “seed prescriptions” instead of uniform drops

Once micro-sites are scored, we don’t fly a single global density. We generate variable-rate prescriptions—polygons carrying species mix, capsule type, and per-hectare density—akin to VRT (Variable Rate Technology) in agriculture. In forestry, that means fewer seeds wasted in improbable locations and more spent where aspect and moisture argue for it. A-Bots.com’s mission apps can compile these polygons to onboard formats the UAV’s flight computer can consume, switching between payload canisters or firing regimes on the fly. The agronomic playbook for VRT (controllers, map-based setpoints, feedback from sensors) is well documented and adapts cleanly to aerial seeding (Ask IFAS - Powered by EDIS).

A useful heuristic is to reserve 10–20% of the mission for “exploration” passes that deliberately sample marginal SiSi bands to extend your species response map. The mobile app should tag those passes so post-flight analytics don’t confound them with production runs.

3) Capsules that behave like micro-nurseries (and why mycorrhiza matters)

A seed in thin, drying ash will rarely win without help. That’s why modern seed-vessels act like micro-nurseries: a biodegradable shell carries a tailored blend—seed + substrate + slow-release nutrients + water-holding agents + phytohormones—and, increasingly, beneficial fungi. Literature on UAV-supported regeneration has long argued for species- and condition-specific payloads, including mycorrhizal/bacterial symbionts and even predator deterrents baked into the matrix. Field systems also use gustatory/olfactory deterrents (think capsaicin) to reduce rodent predation—details that sound small but have outsize impact on survival curves (MDPI, WIRED).

The mycorrhizal piece is not hand-waving. Forest research (including conifers) shows pre- or peri-planting mycorrhization improves survival and early vigor; you’re effectively bootstrapping the symbiosis that helps the seedling access water and nutrients under stress. In capsule workflows, that translates to inoculum positioned to contact the radicle quickly, surviving the drop, and staying viable across expected moisture/temperature swings (US Forest Service).

Innovation is also happening in how capsules meet soil. “E-seed” carriers from university labs self-drill when wetted by rain, using passive morphing to seat seeds below the desiccation zone—no batteries, just smart materials. That’s a leap for steep or crusted soils where kinetic energy alone isn’t reliable (GRASP Lab).

4) Ballistics, timing, and swarm choreography

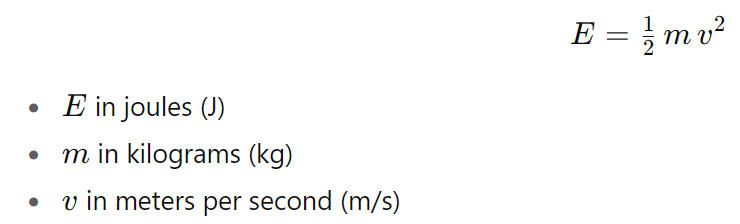

Delivery is not a “sprinkle”—it’s ballistics plus timing. The goal is to deliver enough energy to breach litter without ricochet or fragmentation. If mm is capsule mass and vv impact velocity, the kinetic energy

must exceed a site-specific seating threshold that your app can estimate from a quick litter/soil survey. In practice, teams vary height AGL, firing angle, and capsule casing to hit that window while staying within safety envelopes.

Timing is the other half. At scale, crews schedule flights into dew and rain windows so the capsule’s water-holding gel hydrates when it hits ground, not six hours later. Some startups emphasize monsoon-aligned campaigns; forestry agencies piloting seedballs tie drone sorties to fronts and cloudbursts. Capacity now makes such opportunistic scheduling meaningful—e.g., AirSeed has publicly stated rates around 40,000 pods/day per drone, and Dendra reports payloads on the order of hundreds of kilograms per day with traceability. Translation: you can wait for the right weather and still finish the block (news.mongabay.com, airseedtech.comdendra.io).

5) What the field is actually seeing (and why auditability matters)

Claims vary because species, climate, and post-fire substrates vary. Reported successes include a Japanese pilot where AI-guided drones shot biodegradable capsules into wildfire burn scars in Kumamoto, with press accounts citing ~80% sprouting in trials—a striking number that, if sustained beyond first flush, points to the strength of micro-site targeting plus capsule engineering. At the same time, independent reporting has cautioned that drone-delivered seeds can underperform transplants or hand-placed seed without careful micro-siting and protection, which is precisely why we push mycorrhizal blends, predator deterrents, and post-drop monitoring. Laboratory or controlled-site 80% survival for specific pods (e.g., work in Brazil) should be interpreted as an upper bound; field reality demands site-by-site baselines and transparent audits (greenMe, WIRED, Al Jazeera).

Your software should assume skepticism: each mission writes an auditable ledger—seedlot IDs, capsule recipe, polygon prescription, weather window, UAV telemetry—so survival curves can be computed honestly, not hand-waved in press releases. That’s where offline-first mobile is critical: crews must capture and sign data far from coverage and sync later with WORM-style append-only logs.

6) Post-drop verification: orthos today, structure tomorrow

Monitoring isn’t just “fly a photo once.” You want an MLOps-ready pipeline:

- Immediate orthomosaics (RGB) to confirm coverage, spot delivery voids, and cross-check drop density against the prescription.

- Early emergence flights (RGB + multispectral if available) at 2–6 weeks to detect chlorophyll signatures and differentiate germinants from weed flush.

- Structural rescan (LiDAR) at 3–12 months to estimate survival and height increment via CHM differencing; this attenuates the “false positives” from weeds that fooled RGB. Pair this with a small field truth set to calibrate model bias.

UAV-assisted reforestation studies show exactly this coupling—UAV sensing for both site characterization and follow-up—and recent work on individual-tree segmentation with UAV-LiDAR makes per-seedling survival estimates realistic, not aspirational (Taylor & Francis Online).

To avoid counting “green dots” that won’t make it to year three, the app should favor cohort-based survival (e.g., 90-day, 180-day, and 360-day) and let ecologists swap in species-specific health indices. That data, in turn, retrains the micro-site scoring model upstream—closing the loop between mapping, capsule design, and placement.

7) Species mixes, mosaics, and the “don’t plant a monoculture by drone” rule

Planting speed is seductive, but restoration is not a race for uniform canopy. Restoration teams increasingly treat prescriptions as mosaics: pockets of pioneer shrubs to fix nitrogen and shade soil, nurse species to shelter conifer germinants, and only then the commercial or keystone species. Several vendors now support multi-species capsules or rapid canister swaps so a single sortie lays down a successional gradient rather than a monoculture carpet. Dendra, for example, emphasizes biodiversity and per-bag traceability of seed mixes; AirSeed highlights capsule engineering and species diversity in its positioning.

In fire-altered soils, that mosaic can include fungus-forward capsules to anchor early symbiosis and moisture management. Where predators are severe, the deterrent-enhanced recipes from the literature deserve testing on small blocks before scale-up.

8) Safety, compliance, and biosecurity in the air

Drone-based reforestation touches airspace, environmental permitting, and seed biosecurity. Your app should embed geo-compliance (no-fly zones, wildlife closures, cultural sites), seedlot provenance tracking, and capsule recipe whitelists. When missions operate in smoky, mountainous terrain, tethered relays or mesh repeaters keep pilots and rangers in the loop—another job for a rugged mobile client with telemetry overlays and offline sync that tolerates long comms gaps.

9) Where A-Bots.com fits: the flying edge app you actually need

A-Bots.com builds the connective tissue: the mobile mission app and services behind it. In practice that means:

- LiDAR-aware planning: ingest DEM/CHM, run micro-site scoring on-device, and compile variable-rate prescriptions to the airframe.

- Capsule/seedlot QA: barcode/QR workflows, recipe versioning, and mixing checklists so what’s in the canister matches the polygon—no surprises on launch.

- Telemetry & audit: per-shot logs, weather window capture, and WORM audit chains; if a block underperforms, you know whether to blame recipe, timing, or placement.

- Monitoring & MLOps: post-drop flight templates, model-assisted germinant detection, and survival dashboards that converge with field plots over time.

- Offline-first reliability: the app works in radio-silent backcountry and syncs when the crew is back in range.

This is IoT app development tuned for the flying edge: drones, capsules, sensors, and people in one operational loop.

Quick reality check, backed by evidence

- Throughput is real, with public statements around tens of thousands of pods per drone per day and heavy payloads per platform—useful for waiting on ideal moisture windows (news.mongabay.com, dendra.io).

- Capsule engineering is decisive—mycorrhiza, nutrients, and deterrents are not “nice-to-haves” but core to survival under post-fire stress (US Forest Service).

- Field outcomes vary, from celebrated high sprouting in Japanese wildfire trials to sober assessments warning of low survival without precise placement and protection. Plan for both in your software and protocols (greenMe).

If you want, I can extend this section with a hands-on “mission recipe” (species mix, capsule variants, flight parameters, and monitoring schedule) for your target biome—and wire it to a buildable feature list for A-Bots.com’s drone control & analytics app.

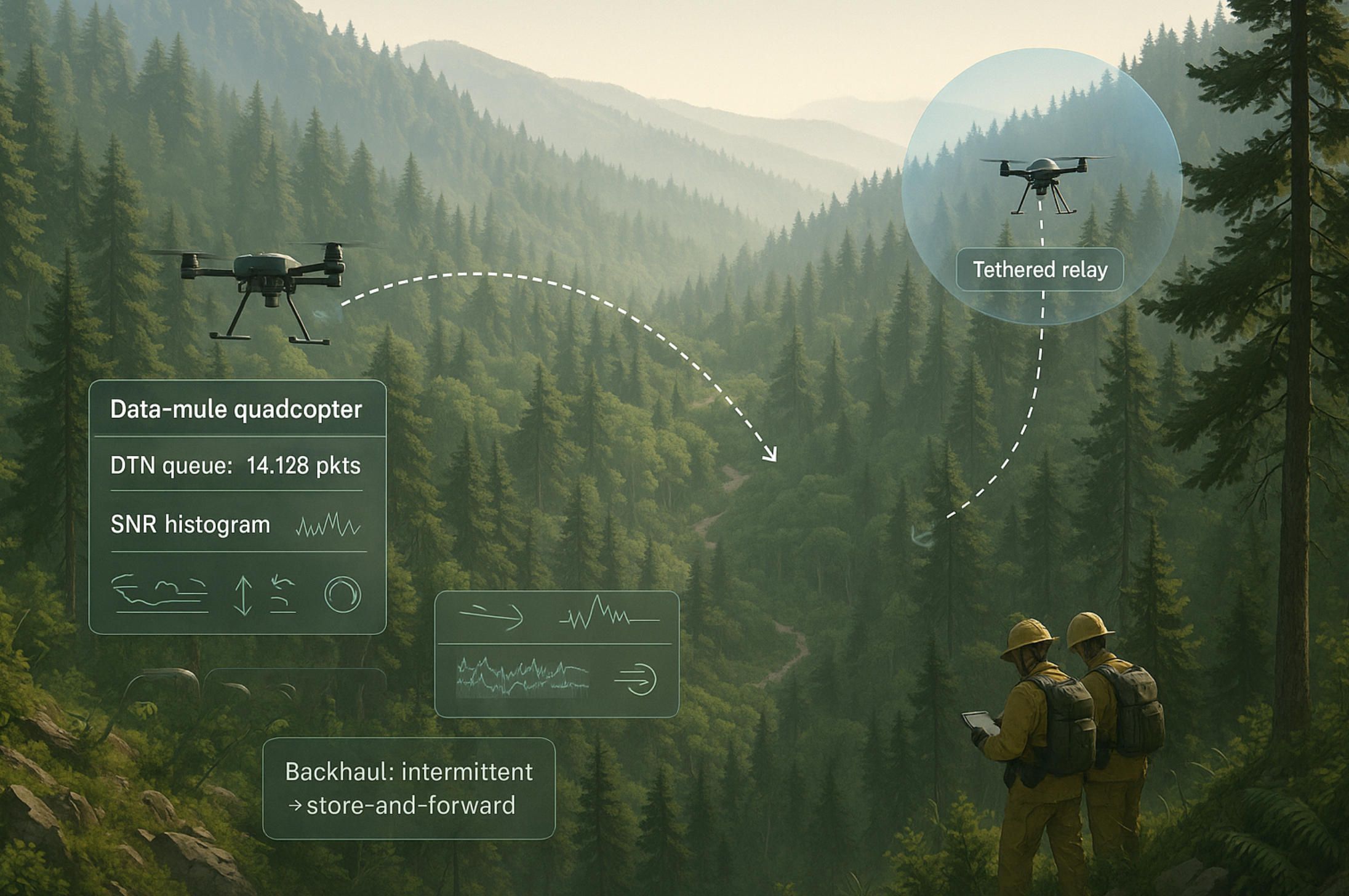

The Flying Edge: UAV Data Mules, Forest LoRaWAN & Emergency Mesh for Fire Ops

Forests are radio-silent by design: deep canopies, steep ravines, and no grid. To make sensing and incident response actually work there, you need a flying edge—drones that shuttle data for low-power sensors, spin up pop-up connectivity for crews, and close the loop between fire risk, detection, and action. This section lays out the architecture and the algorithms, then shows where a purpose-built mobile app from A-Bots.com (your IoT app development partner) fits in. Recent research on UAV–WSN integration and aerial data aggregation backs the pattern.

1) A telemetry fabric that survives the forest

The baseline: a LoRa/LoRaWAN sensor underlay for microclimate, fuel moisture, soil conditions, camera traps, and ultra-early fire detection. Commercial systems like Dryad Silvanet demonstrate minutes-level wildfire alerts using solar IoT nodes and gateways; the same fabric can also carry forest-health signals year-round. In practice, ridge-top gateways see far but not everywhere; valley bottoms and lee slopes stay dark—exactly where UAVs step in as ferry boats. A mature evidence base now frames early fire detection along four pillars—ground sensors, UAVs, camera networks, satellites—so the “fabric + drones” combo is a pragmatic, layered approach.

2) UAVs as data mules and flying LoRaWAN gateways

When backhaul is intermittent by design, you switch to Delay-Tolerant Networking (DTN) and data mules: quadcopters sweep pre-planned corridors, snarf packets opportunistically (LoRa/BLE), and dump them when they surface near a gateway or cell signal. This model is orthodox DTN—founded in the classic “MULE” architecture and extended recently with BLE+DTN hybrids and LoRaWAN flying gateways that buffer and forward when the internet reappears. Field and lab work show the essentials: multi-channel LoRa gateways on drones, local storage in offline mode, then a push to the network server once uplink is available.

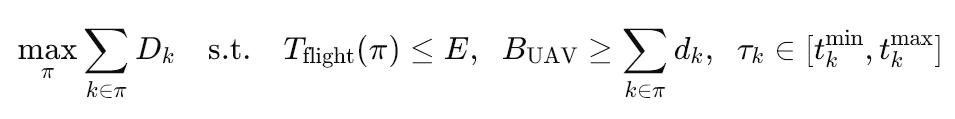

Scheduling the mule. Route planning is a time-window VRP under energy and buffer constraints. A simple, field-ready objective is to maximize retrieved payload while respecting endurance E and link budgets:

Here Dk is expected data at cluster k, dk realized bytes, BUAV buffer, and [tkmin,tkmax] “listen windows” when nodes are awake or when thermal/turbulence are acceptable. In practice you fly a two-layer policy: (1) a backbone loop that guarantees worst-case latency for safety-critical sensors (fire, intrusion), and (2) opportunistic side-sweeps only when state-of-charge and winds allow. Forestry-specific experiments (LoRa on tree farms; UAV-assisted forestry monitoring) confirm that a hovering drone-gateway can reliably harvest uplinks from dispersed ground nodes.

What the aircraft carries. A real flying gateway stack is boring on purpose: LoRa concentrator + SBC host + GNSS (for time) + dual radio (LTE/5G or Wi-Fi) for backhaul. In offline mode the gateway stores frames locally; in connected mode it tunnels to the LoRaWAN server. Your mobile app should expose gateway health (SNR histograms, packet loss, duty-cycle headroom) and provide “store-and-forward” guarantees so ecologists and rangers trust the pipeline (MDPI).

3) Pop-up connectivity for wildfire operations: LTE/5G and tethered mesh

When a fire hits and towers go down, airborne cells and tethered relays give incident commanders coverage in minutes—not hours. AT&T’s Flying COW® tests showed 5G service from a drone at ~450 ft can cover ~10 sq mi; in U.S. incidents the same concept rides on FirstNet for public-safety traffic during wildfires. For longer hauls, tethered systems (power + fiber/copper over the line) hold station for tens of hours, hoisting cameras or radios to 50–90 m and acting as secure, high-bandwidth relays that don’t need constant battery swaps. Fire agencies increasingly field tethered platforms (e.g., Fotokite Sigma, Elistair Orion/HL) exactly for this persistent overwatch and comms-relay role (commercialuavnews.com, KEYE, shephardmedia.com, FOTOKITE).

Why tethers matter at the fireline. The tether is a power umbilical and a hardline for data, which reduces jamming risk and simplifies spectrum deconfliction near lots of radios. Vendors and integrators document wildfire and refinery-fire deployments where a single mast-level drone stabilized comms and ISR for hours. Recent technical reviews (2025) catalog these capabilities and common payload stacks; national deployments (e.g., Greece) underline their wildfire relevance (Heliguy™, Unmanned Systems Technology).

What the A-Bots.com app actually does in this stack

A-Bots.com builds the field-grade mobile app and services that make the flying edge usable by foresters and incident commanders:

- DTN-aware flight planner. Generate mule routes from sensor heatmaps, time windows, and endurance; visualize expected retrieval versus state-of-charge; auto-replan under gusts or link loss.

- Multi-radio orchestration. Configure LoRa parameters (spreading factor, bandwidth) per polygon; switch the gateway between “harvest” and “backhaul” modes; expose SNR, PER, and ADR behavior in plain English.

- WORM audit + offline-first. Log every harvested frame with GNSS time and location; sign logs locally; sync when back in range—no dropped accountability, even off-grid.

- Fire-ops comms module. One-tap presets to bring up a tethered relay, lay mesh overlays on topo maps, and pin ICS markers (Div A, Branch, Safety Zone).

- Interops. Northbound connectors to LoRaWAN servers, Dryad-type wildfire systems, and CAD/AVL used by public-safety teams.

This is IoT app development tuned for canopies and crisis: sensors, drones, and people stitched together with software that still works when the network doesn’t.

Field notes you can trust

- UAV-gateway & data-mule patterns are well studied across WSN/IoT literature; modern variants use LoRaWAN and hybrid BLE+DTN to keep sensor power budgets tiny while UAVs ferry the bytes (PMC, MDPI).

- Tethered relays provide persistent comms: published vendor specs and trade coverage report multi-day station time and 50–90 m antenna heights, exactly what wildfire scenes need for a stable bubble (shephardmedia.com).

- Public-safety cellular from the air is not theoretical; Flying COWs have been tested and deployed for disaster recovery and wildfire response on FirstNet (commercialuavnews.com).

Bioacoustic & Hyperspectral Guardians: Ultra-Early Detection of Pests, Stress & Wildlife Impacts

Forests rarely tell you something is wrong until it’s visibly wrong. By the time crowns brown or bark sloughs, you’re late. The way out is to listen and see before symptoms go obvious: drones that capture hyperspectral signatures of pre-visual stress while running bioacoustic listening for chainsaws, gunshots, bats, and birds. The two modalities—light and sound—cover each other’s blind spots and create a field-hard early-warning lattice that ecologists and rangers can actually act on.

1) Seeing the invisible: red-edge shifts, water stress and “green-attack” pests

Hyperspectral payloads (VNIR/SWIR) pick up subtle changes—chlorophyll breakdown, water content, leaf chemistry—days to weeks before RGB imagery does. Research shows that for tree stress and pest “green-attacks” (e.g., Ips typographus in spruce), red-edge bands and NIR-related indices are often sufficient to separate healthy from early-infested individuals; full hyperspectral improves margin and robustness at stand scale. Recent studies confirm early detection with UAV multispectral/hyperspectral imagery, emphasizing red-edge-centric features and NIR indices (NDVI/BNDVI) for individual-tree discrimination.

A 2025 synthesis and follow-on work reinforce that UAV-borne hyperspectral enhances bark-beetle detection especially in the first phases of attack—exactly when you still have management options. Methodologically, teams fuse LiDAR-derived canopy height to localize individual crowns, then compute stress indices per crown to prevent understory clutter from skewing results.

Even when the cause isn’t insects, the physics holds. Controlled trials demonstrate that canopy stress (including herbicide-induced as a proxy) is detectable with UAV-mounted RedEdge and Headwall-class sensors, moving from qualitative “discoloration classes” to quantitative, repeatable thresholds. Emerging workflows evaluate existing hyperspectral indices against classification performance for “new or emerging stress” and keep only those that generalize.

In practice: your flight app tiles crowns, computes a compact feature vector—e.g., [ΔRE,NDVI,NDWI,PRI]]—and scores an anomaly

A=α ΔRE+β(1−NDVI)+γ(1−NDWI)+δ(1−PRI),

with species- and season-specific weights. Crowns whose AA exceeds a rolling, stand-level baseline are flagged for ground truth or immediate sanitation.

2) Listening for trouble: wildlife, poachers and power-tools

Bioacoustics complements spectra. In remote forests, persistent listening picks up what imagery can’t: activity of bats and birds (biodiversity proxies), illegal logging (chainsaws), gunshots, vehicles, even human chatter at odd hours. Field systems like Rainforest Connection prove that acoustic ML can deliver real-time alerts for chainsaws and gunshots and even detect precursors—human scouts—before the first cut.

Putting ears on drones unlocks mobile listening. Methodological work shows quadcopters can carry audible and ultrasound recorders to survey birds and bats; with careful airframe choice and mic placement, you minimize rotor noise and avoid biasing behavior. Fresh comparisons of drone thermal imagery + ultrasonic recordings vs. human counts report strong correlations for bat emergence, while miniaturization studies indicate that careful platform selection eliminates detectable disturbance. Translation: you can inventory wildlife with less intrusion, then route ranger patrols based on real activity.

In practice: the flight computer runs an on-edge classifier for “threat” events (chainsaw, gunshot) and species-specific acoustic templates. Only short embeddings or event snippets are stored to save bandwidth and protect privacy; full-fidelity audio is optional and governed by policy.

3) Fusing light + sound into an operational early-warning lattice

One sensor gives you signals; two give you cross-validation. A practical, field-ready loop looks like this:

- Patrol mode (listening + scouting): a quiet quadcopter or tethered platform records audio over trails and edges while a small multispectral block checks for fresh stress. Detections of chainsaws or gunshots become priority taskings for ground teams. RFCx-style acoustic logic can run on towers or trees; drones extend coverage to new pockets, carry fresh batteries, or harvest data from off-grid nodes (rfcx.org).

- Survey mode (crown-level stress): high-overlap, multi-band sorties tile crowns; the app computes anomaly scores, ranks high-risk clusters, and suggests ground plots. Early bark-beetle flags trigger trap placement or sanitation felling before red crowns appear.

- Incident mode (wildfire start): acoustic/thermal isn’t your only sentinel—gas-sensing nodes on trees alert to smoldering fires within minutes over LoRaWAN; a companion drone (e.g., Dryad’s Silvaguard concept) auto-launches to geo-confirm with IR video. Drones also stand up emergency mesh/LTE bubbles for crews.

4) What the A-Bots.com app does differently

This is where a purpose-built mobile stack matters. A-Bots.com (your partner for IoT app development) builds the field client and backend so rangers, ecologists, and wildfire teams can use the tech without babysitting it:

- Mission templates for “Patrol / Survey / Incident,” each with sensor presets (gain, sampling rate, band sets), wildlife-safe routes, and battery/weather gates.

- On-edge inference for hyperspectral anomaly scoring and acoustic event detection; store embeddings, not raw feeds, unless policy says otherwise.

- Crown registry & replay: LiDAR-aided crown tiling, per-crown history of stress scores, traps, and interventions—so you can prove that action preceded visible decline.

- Alert hygiene: deduplicate acoustics (chainsaw vs. river), add confidence and time-to-action SLAs, and thread alerts into ranger tasking.

- Offline-first data integrity: everything signs locally and syncs to an append-only ledger when the drone or crew comes back into coverage.

5) Guardrails: ethics, wildlife safety & reproducibility

Bioacoustic and spectral surveillance can be powerful—and sensitive. Your app should implement wildlife-safe flight envelopes (altitude over roosts, stand-off over colonies), audible/ultra-ultrasound quieting, and privacy filters that discard human speech by default. For science-grade credibility, every model decision is reproducible: versioned indices, calibration panels per flight, and ground-plot links that make your map more than a pretty picture. Recent bat-survey research shows disturbance can be minimized or eliminated with the right platform and procedure; bake those procedures into mission templates.

Why this matters now

- Early is everything: Red-edge/NIR signals and crown-level anomaly scores push decisions weeks earlier than RGB alone.(ScienceDirect, Frontiers).

- Sound carries intent: Chainsaws, gunshots, and scouting footsteps can be caught in time to deter, not just document (ScienceDirect, Hitachi Global).

- From alert to action: Tree-mounted wildfire sensors already trigger drones to verify ignition and guide crews with IR video in near-real time.

If you want, I can turn this into a deployment blueprint (sensor mix, flight cadence, indices to track, acoustic label sets, and human-in-the-loop review) and map it 1:1 to a feature backlog for A-Bots.com’s field app.

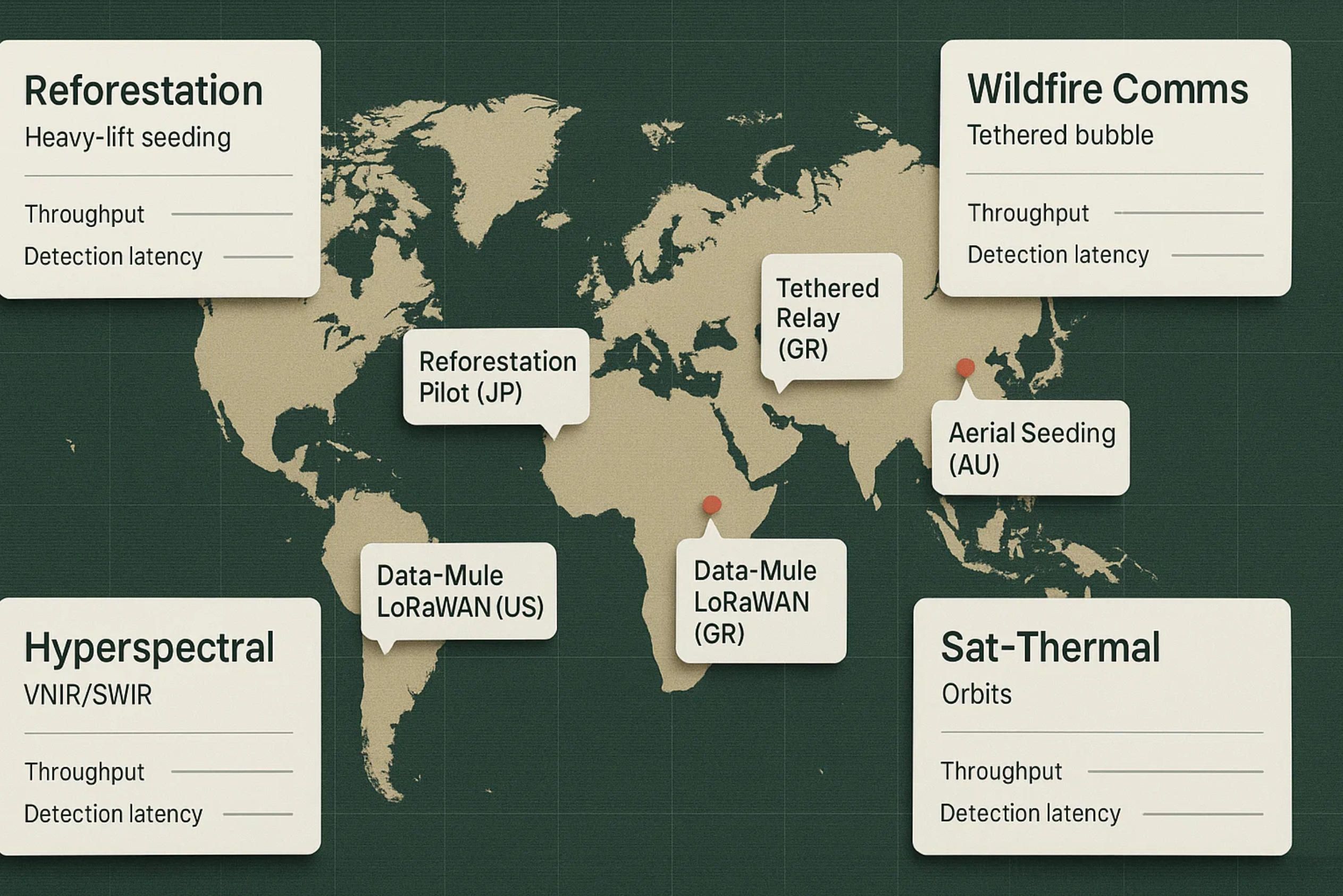

Notable pilots & vendors

Reforestation & smart seedpods

- Dendra Systems — large-payload aerial seeding with full traceability; the company cites up to ~700 kg of seed per drone per day and multi-species mixes, with AI mapping and post-drop monitoring built into the platform (dendra.io).

- Flash Forest (Canada) — government-backed pilots after wildfires using seed pods, automation and ML; recognized by Natural Resources Canada and profiled widely for scaling up in severe burn scars (Reasons to be Cheerful).

- AirSeed (Australia) — heavy-lift drones firing biodegradable seed pods; public claims range from ~40,000 pods/day per drone in field media to much higher figures on the company site, underscoring how throughput depends on terrain, pod mass, and mission profile (ABC, airseedtech.com).

- Marut Drones — “Seedcopter” (India) — CSR-driven aerial seeding service designed for remote, steep terrain; active commercial rollouts since 2023–24 (marutdrones.com).

- National Forest Foundation pilots (U.S.) — small-area drone seeding trials framed as complementary to hand planting; useful proof-of-concept for agencies (nationalforests.org).

- Kosovo pilot (SLK + Project 02) — seed-dropping drones distributing clay/mineral seedballs to counter illegal logging impacts; 1 ha in ~2 hours reported (Reuters).

- Japan trial stories (Kumamoto) — multiple media posts report ~80% sprouting for AI-guided, LiDAR-mapped capsule seeding after wildfires; treat as promising but unverified until peer-reviewed or agency-audited results land (greenitaly.net).

Wildfire connectivity, sensing & response

- Dryad Networks — Silvanet + Silvaguard — solar LoRaWAN gas sensors for minute-scale smoldering fire detection; in 2025 Dryad unveiled an AI drone (“Silvaguard”) that auto-launches on alerts to provide IR/optical confirmation. Ideal for pairing with UAV data-mule routes (Dryad).

- AT&T / FirstNet — Flying COW® — tethered “cell-on-wings” coverage used in U.S. wildfire incidents; tested 5G bubbles ~10 sq mi from ~450 ft AGL and deployed in California fires in 2025.

- Elistair & Fotokite (tethered drones) — persistent 50–90 m air-masts for comms and overwatch; 2025 contract to equip Greece’s fire service with 13 tethered systems highlights the wildfire use case (Elistair | Tethered Drone Company, FOTOKITE).

- Pano AI (cameras) & OroraTech (thermal satellites) — complementary fixed and orbital sentinels feeding into early-warning stacks that drones can verify on demand; 2025 saw Pano AI deployments with public feeds in Washington State and new funding, while OroraTech expanded a dedicated thermal constellation (FOREST dnr.wa.gov, The Wall Street Journal, OroraTech).

Bioacoustics & spectral payloads

- Rainforest Connection (RFCx) — real-time acoustic ML for chainsaws/gunshots and biodiversity; works as a fixed “listening” layer that UAVs can extend or service in data-mule mode (rfcx.org).

- AudioMoth / Wildlife Acoustics — widely used, affordable recorders for bat/bird surveys; pair with quiet airframes or tethered stations to minimize rotor noise in bioacoustic missions (openacousticdevices).

- Headwall, Specim, AgEagle/MicaSense — UAV-class hyperspectral (VNIR/SWIR) and multispectral sensors for pre-visual stress and pest detection: Headwall Hyperspec (250–2500 nm) with turnkey UAV packages; Specim AFX10/17 (VNIR/NIR) all-in-one gimbals; MicaSense Altum-PT & RedEdge-P for rugged, repeatable indices (Headwall Photonics).

LiDAR & forest mapping staples

- DJI Zenmuse L2 / YellowScan — dominant choices for canopy-height models, structure, and terrain under canopy; recent case studies and tech notes emphasize vegetation penetration and safety at higher AGLs. These sensors underpin micro-site scoring for seeding and precision monitoring (DJI, Heliguy™, YellowScan).

Where A-Bots.com fits

Across these stacks, the missing piece is operational software: offline-first field apps that plan missions from prescriptions, sync sensor data, run on-edge inference (acoustic + spectral), and keep a WORM audit for regulators and funders. That’s exactly where A-Bots.com comes in as an IoT app development partner for custom drone and edge workflows—sensor to decision, even when the forest has no signal.

✅ Hashtags

#ForestryDrones

#Reforestation

#LiDAR

#LoRaWAN

#UAV

#WildfireTech

#Bioacoustics

#Hyperspectral

#Seedpods

#Mycorrhiza

#DataMules

#EmergencyMesh

#TetheredDrones

#ForestHealth

#EarlyWarning

#FieldApps

#ABotsCom

Other articles

Drone Mapping Software (UAV Mapping Software): 2025 Guide This in-depth article walks through the full enterprise deployment playbook for drone mapping software or UAV mapping software in 2025. Learn how to leverage cloud-native mission-planning tools, RTK/PPK data capture, AI-driven QA modules and robust compliance reporting to deliver survey-grade orthomosaics, 3D models and LiDAR-fusion outputs. Perfect for operations managers, survey professionals and GIS teams aiming to standardize workflows, minimize field time and meet regulatory requirements.

ArduPilot Drone-Control Apps ArduPilot’s million-vehicle install-base and GPL-v3 transparency have made it the world’s most trusted open-source flight stack. Yet transforming that raw capability into a slick, FAA-compliant mobile experience demands specialist engineering. In this long read, A-Bots.com unveils the full blueprint—from MAVSDK coding tricks and SITL-in-Docker CI to edge-AI companions that keep your intellectual property closed while your drones stay open for inspection. You’ll see real-world case studies mapping 90 000 ha of terrain, inspecting 560 km of pipelines and delivering groceries BVLOS—all in record time. A finishing 37-question Q&A arms your team with proven shortcuts. Read on to learn how choosing ArduPilot and partnering with A-Bots.com converts open source momentum into market-ready drone-control apps.

Aerial Photography Mapping Software The article dissects a full-stack journey from flight data capture to enterprise roll-out of an aerial mapping platform. Readers learn why sensor stacks and RTK/PPK workflows matter, how AI boosts SfM and MVS speed, and what Kubernetes-driven GPU architecture keeps terabytes of imagery flowing on schedule. Real-world benchmarks, ISO-aligned security controls and an 18-week go-live roadmap show decision-makers exactly how A-Bots.com transforms proof-of-concept scripts into production-grade geospatial intelligence—with zero vendor lock-in and measurable carbon savings.

Custom Pet Care App Development This article maps how a mobile app development company like A-Bots.com approaches custom pet care app development as a platform, not a pile of features. We start with a Pet Identity Graph and Consent Ledger, layer multimodal Behavior AI from litter, feeders, wearables, and cameras, and then ship adaptive routines that keep working offline. Finally, we wire in the unglamorous but essential interoperability—FHIR bundles for clinics, claim-ready artifacts for insurers, AAHA-aligned microchip workflows, and shelter handoffs—so caregivers aren’t forced to be couriers. If you need custom pet care app development that’s trustworthy, explainable, and resilient in real life, this is the blueprint.

Mastering the Best Drone Mapping App From hardware pairing to overnight GPU pipelines, this long read demystifies every link in the drone-to-deliverable chain. Learn to design wind-proof flight grids, catch RTK glitches before they cost re-flights, automate orthomosaics through REST hooks, and bolt on object-detection AI—all with the best drone mapping app at the core. The finale shows how A-Bots.com merges SDKs, cloud functions and domain-specific analytics into a bespoke platform that scales with your fleet

Drone Mapping and Sensor Fusion Low-altitude drones have shattered the cost-resolution trade-off that once confined mapping to satellites and crewed aircraft. This long read unpacks the current state of photogrammetry and LiDAR, dissects mission-planning math, and follows data from edge boxes to cloud GPU clusters. The centrepiece is Adaptive Sensor-Fusion Mapping: a real-time, self-healing workflow that blends solid-state LiDAR, multispectral imagery and transformer-based tie-point AI to eliminate blind spots before touchdown. Packed with field metrics, hidden hacks and ROI evidence, the article closes by showing how A-Bots.com can craft a bespoke drone-mapping app that converts live flight data into shareable, decision-ready maps.

Drone Survey Software: Pix4D vs DroneDeploy The battle for survey-grade skies is heating up. In 2025, Pix4D refines its lab-level photogrammetry while DroneDeploy streamlines capture-to-dashboard workflows, yet neither fully covers every edge case. Our in-depth article dissects their engines, accuracy pipelines, mission-planning UX, analytics and licensing models—then reveals the “SurveyOps DNA” stack from A-Bots.com. Imagine a modular toolkit that unites terrain-aware flight plans, on-device photogrammetry, AI-driven volume metrics and airtight ISO-27001 governance, all deployable on Jetson or Apple silicon. Add our “60-Minute Field-to-Finish” Challenge and white-label SLAs, and you have a path to survey deliverables that are faster, more secure and more precise than any off-the-shelf combo. Whether you fly RTK-equipped multirotors on construction sites or BVLOS corridors in remote mining, this guide shows why custom software is now the decisive competitive edge.

Drone Detection Apps 2025 Rogue drones no longer just buzz stadiums—they disrupt airports, power grids and corporate campuses worldwide. Our in-depth article unpacks the 2025 threat landscape and shows why multi-sensor fusion is the only reliable defence. You’ll discover the full data pipeline—from SDRs and acoustic arrays to cloud-scale AI—and see how a mobile-first UX slashes response times for on-site teams. Finally, we outline a 90-day implementation roadmap that bakes compliance, DevSecOps and cost control into every sprint. Whether you manage critical infrastructure or large-scale events, A-Bots.com delivers the expertise to transform raw drone alerts into actionable, courtroom-ready intelligence.

Top stories

Copyright © Alpha Systems LTD All rights reserved.

Made with ❤️ by A-BOTS