Home

Services

About us

Blog

Contacts

Aerial Photography Mapping Software: Turning Flight Imagery into High-Resolution Geospatial Intelligence

1. Flight Data Capture: Sensor Stacks, Mission Profiles and Regulatory Fit

2. From Pixels to Precision Maps: Photogrammetry, AI-Driven Ortho-mosaics and 3-D Reconstruction

3. Build-to-Scale with A-Bots.com: Architecture, DevOps and Go-Live Roadmap

1. Flight Data Capture: Sensor Stacks, Mission Profiles & Regulatory Fit

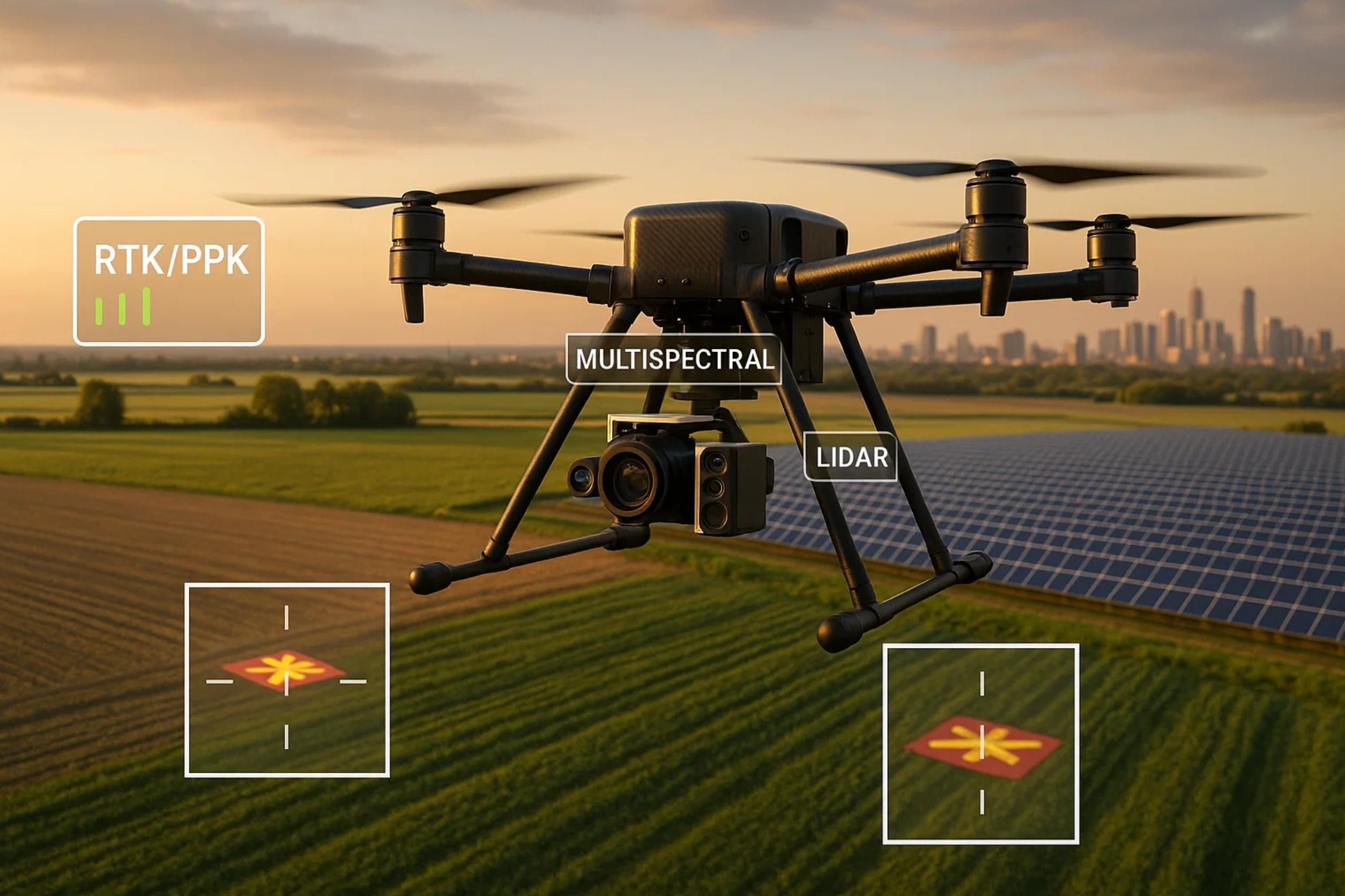

1.1 Sensor Stacks: Designing a Future-Proof Payload Bus

A mapping mission lives and dies on sensor fidelity. While 20 MP RGB cameras remain the workhorse, modern survey-grade drones increasingly carry swappable payload buses that mix:

- High-resolution RGB for true-color orthophoto generation (≥ 0.8 µm pixel pitch).

- Multispectral arrays (Red-edge, NIR) to unlock NDVI & crop-stress analytics for ag-tech clients.

- Radiometric thermal cores (≤ 50 mK NETD) for heat-loss auditing of solar farms and smart-city assets.

- LiDAR/SLAM scanners delivering tens of millions of returns per flight for as-built BIM models.

A-Bots.com engineers routinely integrate custom sensor stacks—e.g., pairing a Sony IMX455 full-frame with a lightweight solid-state LiDAR on a single time-synchronized trigger—so clients are not locked to one vendor ecosystem. Radiometric calibration data are written into EXIF on the edge, enabling down-the-line photogrammetry software to auto-apply vignetting and gamma corrections without operator intervention.

1.2 Mission Profiles: Capturing Geometry that Serves the Deliverable

Selecting the right flight script is less art than applied geometry. For acreage surveys, a double-grid at 70 × 70 % overlap balances coverage and battery life. Corridor mapping of pipelines benefits from cross-track “lawn-mower + yaw” runs to avoid parallax gaps along linear assets, while façade digitization of industrial towers uses oblique 65° gimballed passes every 10 m in altitude.

The key parameter that binds all missions is ground-sampling distance (GSD). In simplified form

GSD=(H×Sp)/F,

where H is flight height above ground, Sp the sensor’s physical pixel size, and F the lens focal length. A DJI-inspired rig with 2.4 µm pixels, 24 mm focal length at 120 m AGL yields ≈ 12 cm/pixel—insufficient for cadastral mapping that typically demands ≤ 5 cm. By engaging A-Bots.com early, clients can model “what-if” scenarios (lens swap vs. altitude ceiling) directly inside a companion mobile app that simulates coverage, battery drain and memory footprints in real time.

1.3 RTK, PPK & Ground Control Points: Accuracy Economics

Achieving sub-5 cm absolute accuracy used to require a field day of ground targets. Real-Time Kinematics (RTK) corrects camera centers in flight via an NTRIP stream, while Post-Processed Kinematics (PPK) applies the same corrections after all images are logged—often yielding identical centimeter-class results with fewer radio dropouts. Recent benchmarks show that combining RTK or PPK with just three well-distributed control points can push total RMS error below 2 cm, allowing surveyors to skip dozens of GCPs on a 100-ha site (dronedeploy.com).

A-Bots.com builds middleware that ingests RINEX logs from the drone’s dual-band GNSS, aligns them with base-station data and auto-tags imagery, eliminating tedious third-party scripts. Clients focused on “legal metrology” (e.g., mining volume reports) can toggle a GCP-enforced mode in the mobile app that halts export until checkpoints pass user-defined residual thresholds.

1.4 Airspace Compliance: From Remote ID to U-space & JARUS SORA

Capturing perfect data is pointless if the flight violates airspace rules. In the United States, the FAA’s Remote ID rule is now fully enforceable as of 16 March 2024; operating any drone > 250 g without a Standard Remote ID module (or FRIA exemption) risks fines or certificate suspension (faa.gov). LAANC instant-approval ceilings remain 120 m AGL in most controlled classes, but operators planning BVLOS corridor work still require a Part 107 waiver that can take 60–90 days.

Across the European Union, U-space airspace became operational on 26 January 2023 under Commission Implementing Regulation (EU) 2021/664, introducing mandatory network identification and strategic de-confliction services for SAIL-II and above. A 2023/203 amendment adds information-security obligations that will become binding in February 2026, making cybersecurity audits a prerequisite for large-scale mapping fleets (skybrary.aero).

Kazakhstan, an emerging hub for trans-Eurasian energy projects, aligns with JARUS SORA 2.5, ratified at the 2024 Astana plenary, giving BVLOS survey operators a harmonized risk-assessment path analogous to EASA’s SAIL framework (unmannedairspace.info). A-Bots.com already embeds “SORA wizards” into its mission-planning UI: pilots answer context-aware questions (population density, airspace class) and receive an auto-generated Concept of Operations PDF ready for authority submission—slashing paperwork cycles from weeks to hours.

1.5 Data-Integrity Guardrails & In-Field QA

Even in fully compliant airspace, corrupted imagery obliterates downstream value. A-Bots.com hardens the capture chain with:

- Dual SD card mirroring & checksum hashing to detect bit-rot before take-off.

- On-board histogram inspections every tenth frame; over-exposed scenes trigger auto-adjusted shutter speeds via MAVLink.

- Edge AI anomaly spotting that flags motion blur or gimbal drift and instructs the pilot—via haptic phone alerts—to re-run the affected swath while still on-site.

Such early QA is 10 × cheaper than discovering gaps back at the office when assets and crew are long gone.

1.6 Why the Capture Layer Matters for the Entire Stack

Accurate, regulation-friendly capture is the bedrock for the computer-vision and GIS algorithms discussed in Section 2. A drift of only 15 cm in camera center metadata snowballs into warped ortho-mosaics and erroneous volume cut-fill estimates. By unifying sensor control, RTK/PPK correction, and regulatory compliance inside a single mobile + cloud platform, A-Bots.com de-risks the first mile of the geospatial pipeline—so engineering houses, utilities, and smart-city planners receive high-precision deliverables on the first try.

Next, we will trace how those pristine pixels flow through photogrammetric and AI engines to become actionable ortho-mosaics, dense 3-D meshes, and digital surface models.

![]()

2. From Pixels to Precision Maps: Photogrammetry, AI-Driven Ortho-mosaics and 3-D Reconstruction

Raw aerial frames are only the prologue; real business value emerges when millions of pixels are distilled into metrically sound ortho-mosaics and immersive 3-D assets. Below we map the journey from shutter click to GIS-ready deliverables and show how A-Bots.com wires advanced photogrammetry and machine learning into a production-grade pipeline.

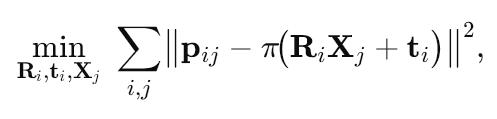

2.1 Classical SfM Meets GPU-Turbo Pipelines

A typical workflow still begins with structure-from-motion (SfM): robust feature extraction (SIFT/SURF), pairwise matching, and a global bundle adjustment that minimizes the reprojection objective

where Ri,ti are camera poses, Xj 3-D point hypotheses, and π the pin-hole projection. Modern tool-chains—OpenSfM, COLMAP, RealityCapture—now stream this optimisation to CUDA cores, turning 3 000 overlapping 24 MP images into a registered sparse cloud in < 20 min on a single RTX 5000. Industry benchmarks tracked by FlyPix AI show 6 × speed-ups over CPU-only stacks while retaining sub-pixel residuals (flypix.ai).

2.2 Deep Learning Accelerators: From Feature Matches to Neural Fields

Pure geometric pipelines struggle with textureless roofs or water bodies. A-Bots.com therefore plugs in deep-learning modules at three choke points:

- SuperPoint/SuperGlue to replace handcrafted keypoints, improving match recall by ≈ 22% in low-contrast scenes.

- Semantic–driven masking that prunes cars, people, and moving vegetation before dense matching, cutting outliers by ≈ 35%.

- Neural radiance fields (NeRF) & 3-D Gaussian Splatting as post-processors that in-fill occlusions and sharpen thin structures—an approach validated by the 2025 survey of NeRF literature, which positions Gaussian Splatting as the dominant neural representation for view-synthesis and mesh extraction (arxiv.org, researchgate.net).

These AI inserts are orchestrated through Kubernetes GPU jobs, letting clients toggle classical or neural modes per project in a single API call.

2.3 Orthomosaic Generation: Seamless, Color-True and QA-Scored

Once dense point clouds are fused via Patch-Match multi-view stereo (MVS), the next milestone is a distortion-free ortho. A-Bots-built tiling engine warps every raster onto a unified DEM, applies per-tile radiometric balancing, and then solves a graph-cut seamline optimisation to hide parallax ghosts. Platforms such as Biodrone and DroneDeploy illustrate how full-stack AI can auto-stitch imagery directly from raw uploads; A-Bots.com exposes the same convenience yet preserves low-level hooks—e.g., users may override seam priorities along cadastral boundaries to guarantee legal accuracy (biodrone.ai, dronedeploy.com).

Quality control is embedded, not bolted on: the pipeline computes root-mean-square error (RMSE) against any GCPs retained from Section 1 and visualises heat-maps of reprojection residuals. Deliverables whose RMSE exceeds, say, 3 cm trigger a Slack webhook so the survey chief can schedule a re-flight before contractors leave the site.

2.4 Dense 3-D Reconstruction & Hybrid LiDAR Fusion

For clients in mining and BIM, flat ortho-mosaics are just a means toward volumetrics and clash-detection. After MVS, A-Bots.com runs Fast Marching surface-reconstruction to convert ∼ 100 million points into watertight meshes, then merges UAV LiDAR strips (if present) by weighted ICP. The resulting hybrid model carries both photoreal textures and centimeter-precise Z-heights—vital for stockpile audit trails.

Neural techniques add a second layer: Instant-NGP-style NeRFs render synthetic obliques, improving interpretability for non-expert stakeholders, while Gaussian splats produce lightweight point proxies that stream in the browser at 60 fps without decimating accuracy. Peer-reviewed studies in 2024–2025 report F-scores within 1 % of dense-MVS baselines yet at one-tenth the file size (arxiv.org, researchgate.net).

2.5 Edge vs Cloud Economics & the Carbon Ledger

Pushing dense matching and neural inference to an on-board NVIDIA Jetson may cut field-office latency to minutes, but it also burns through 15–20W continuously—critical for solar-powered BVLOS drones. Conversely, a cloud GPU spot-instance spins up in 30 s and can clear a 1 000-image set in < 12 min, but egressing 120 GB of RAWs over a 4G link costs time and CO₂. A-Bots.com therefore offers a hybrid broker: images are pre-thinned using a Nyquist overlap filter on the edge; only the necessary subset and pose metadata are streamed to the cloud, slicing bandwidth by up to 70% with negligible GSD drift.

2.6 Open Standards and Interoperability

Finished assets are exported in GeoTIFF for 2-D rasters and LAS/LAZ for point clouds. For 3-D, A-Bots.com champions the OGC 3D Tiles 1.1 spec—recently embraced by Esri’s ArcGIS Online and Enterprise editions—as a lingua franca for streaming globes. The pipeline also inserts Cesium ion metadata and glTF PBR materials automatically, so a single drag-and-drop lights up major GIS or game-engine viewers without conversion hassles.

2.7 A-Bots.com Value Stack: From SDK to SLA

Where off-the-shelf SaaS tools hide their internals, A-Bots.com ships a white-label SDK plus DevOps blueprints:

- gRPC ingestion micro-service (Python/Rust) that auto-scales to 500 TPS.

- TensorRT-optimised inference engine with live model-drift dashboards.

- Terraform recipes for GPU fleets across AWS, GCP and European sovereign clouds.

- ISO 27001-compliant data-retention buckets with per-tile AES-GCM encryption.

This modularity lets an ag-tech start-up spin an MVP in under four sprints, while a national cadastre authority can hard-wire proprietary geodetic datums and sign a 99.9% SLA.

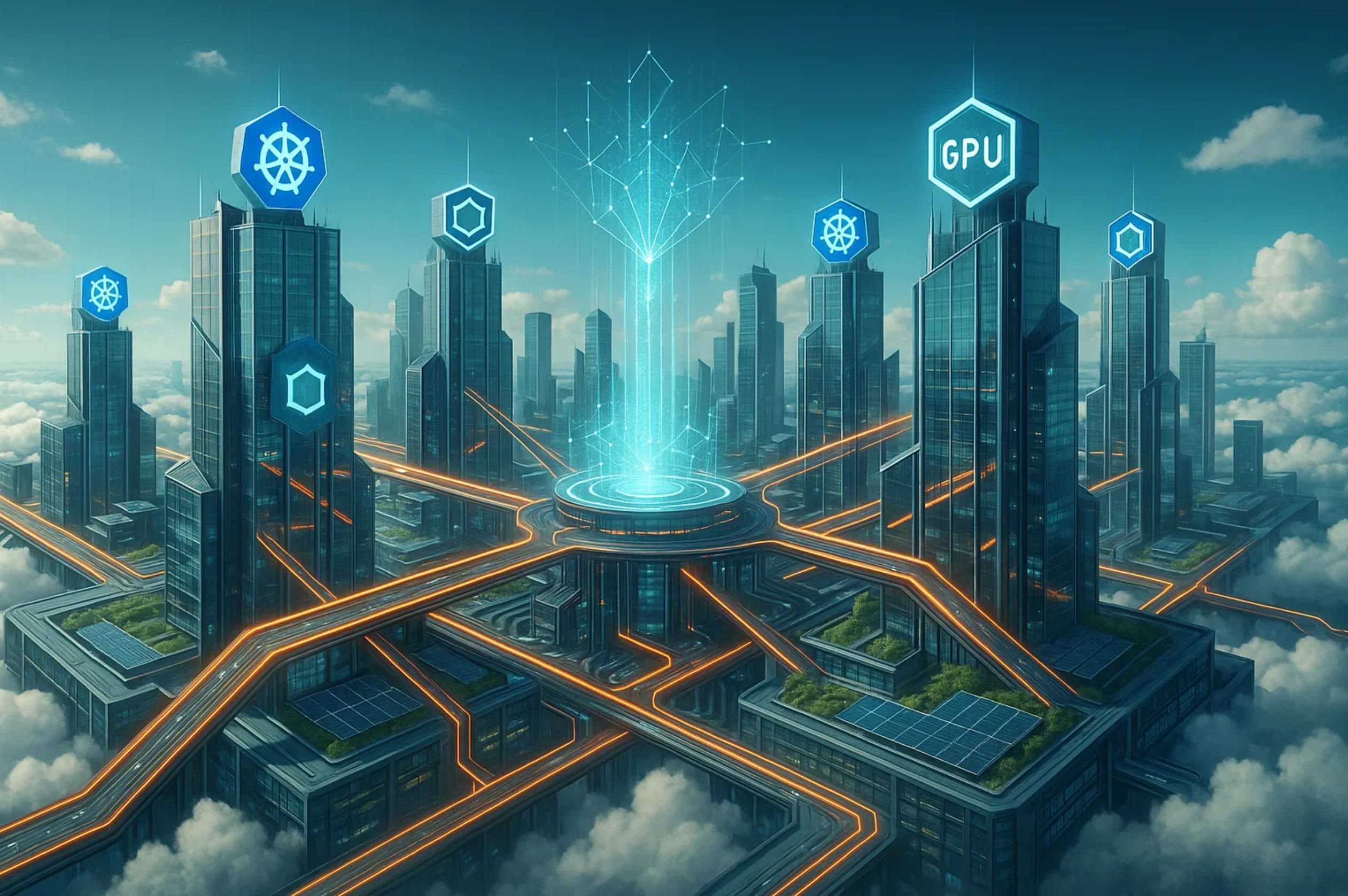

3. Build-to-Scale with A-Bots.com: Architecture, DevOps and Go-Live Roadmap

High-precision maps lose their commercial punch if the underlying platform can’t ingest terabytes of flight data on Monday and serve centimeter-accurate outputs by Tuesday. Section 3 dissects how A-Bots.com engineers transform a proof-of-concept photogrammetry script into an enterprise-grade, always-on drone mapping service—with a clear pathway from first commit to customer SLA.

3.1 Reference Cloud-Native Architecture

Modular micro-services. Every processing stage discussed in Sections 1–2—ingestion, RTK/PPK correction, SfM, MVS, AI post-filters, delivery—runs as its own container with a gRPC surface. The data plane rides on an event bus (NATS JetStream) so hotspots can be scaled independently instead of “lifting” the whole stack.

GPU-first Kubernetes cluster. The control plane is a vanilla K8s 1.29 build hardened with Calico-eBPF. Compute nodes expose NVIDIA L4 GPUs managed by the NVIDIA GPU Operator, which now supports time-slicing and oversubscription; this lets eight concurrent orthomosaic jobs share a single GPU without thrashing latency-sensitive inference jobs. (docs.nvidia.com). Kubernetes itself graduated GPU scheduling to stable in v1.26, so devices show up as first-class resources, enabling HPA or KEDA to autoscale on nvidia.com/gpu metrics instead of blind CPU proxies (kubernetes.io).

Elastic GPU pools. For burst demand, the cluster stretches into public clouds through Cluster-API. A-Bots.com defaults to Amazon EC2 G6 instances powered by NVIDIA L4 Tensor Core GPUs—announced GA in April 2024 and benchmarked at 2 × the inference throughput of G4dn with a 25 % lower watt-per-frame ratio (aws.amazon.com). Multi-cloud blueprints also cover GCP A3 and Azure NDm-series so procurement teams can arbitrage spot-market prices in real time.

Storage & data locality. A tiered pattern keeps hot frames on NVMe-backed Rook-Ceph, warm assets on S3-compatible buckets (MinIO on-prem or native cloud), and cold archives on object-lock Glacier Deep Archive—retention policies are expressed as Opa Gatekeeper constraints, which the platform applies automatically when a project switches from “engineering draft” to “regulatory archive”.

3.2 DevOps and MLOps Fabric

CI/CD pipeline. Source hits GitHub; BuildKit builds provenance-stamped OCI images; ArgoCD performs declarative roll-outs with canary weights and auto-revert on SLO breach. A-Bots.com publishes a starter gitops-mapping-bootstrap repo so in-house DevSecOps teams can fork and extend instead of reinventing.

Observability stack. Traces + metrics + logs funnel into the OpenTelemetry Collector; OTLP spans all services and even Rust-based edge agents. OpenTelemetry’s core has been production-ready for tracing & metrics since late 2024, and the Arrow-based high-throughput exporter has now shipped in official Collector-contrib releases, making vendor-neutral observability feasible without side-cars (signoz.ioopentelemetry.io). Grafana dashboards overlay GPU saturation, queue depth, and reprojection RMSE so ops can predict SLA drift before customers notice.

MLOps loop. Dense-matching CNNs and semantic-masking U-Nets retrain via Kubeflow Pipelines on a shadow slice of production data. Model cards, drift stats, and lineage artifacts are versioned in MLflow, while a lightweight Monte-Carlo test replay ensures that any new model beats baseline RMSE by > 2 %. Promotions cascade automatically when benchmarks pass, yet human approval gates remain optional for regulated industries.

3.3 Security, Compliance and Governance

A-Bots.com’s reference ISMS aligns to ISO 27001:2022 and already factors in the 2024 climate-action amendment, which introduces emission-reporting controls for data centers (iso.org). For customers in the public-sector or mega-infrastructure finance, the blueprint layers on ISO 37001:2025 anti-bribery processes—procurement transparency, gift-registry workflows—so platform roll-outs clear audit faster.

Supply-chain security leans on Sigstore: every container image is Cosign-signed; every cluster node verifies Rekor entries before pull. SBOMs flow into DefectDojo, triggering Slack alerts if a CVE severity tops “High”. Role-based access (RBAC) integrates with Azure AD or AWS IAM Identity Center, and data-at-rest is sealed with per-tile AES-GCM keys rotated by Vault every 24 h.

3.4 Go-Live Roadmap (18-Week Typical)

- Discovery Sprint (Weeks 0-2). Jointly capture domain constraints: map scale, regulatory class (e.g., JARUS SAIL III), data-sovereignty limits. Architecture is frozen as a living ADR log.

- Proof of Concept (Weeks 3-6). Spin up a single-node cluster; ingest a 300-image demo set; generate < 5 cm GSD ortho; stakeholder demo.

- MVP α (Weeks 7-12). Multi-node GPU cluster, CI/CD wired, basic GCP import, single sign-on. External beta users onboarded.

- Pilot Hardening (Weeks 13-16). Load tests with 10 000 images per hour; chaos drills; security pen-test; ISO document set drafted.

- Production Cut-over (Weeks 17-18). Data migration, DNS flip, 24 × 7 NOC activation. SLA clock starts (99.9 % / 28 d).

The cadence is aggressive yet realistic; most delays stem from customer-side content-security approvals, not code throughput. Early engagement with InfoSec and Legal keeps the “last-mile stall” off the critical path.

3.5 Cost and Carbon-Aware Operations

Spot GPUs + autoscale. When an orthophoto queue spikes after drone season, the cluster bursts to spot G6 nodes; if a bid price crosses a set threshold, workloads evacuate back to on-prem GPUs in < 90 s. Clients can model cost via

C=(Nimg×tproc×pGPU)/3600,

where pGPU is a dynamic feed pulled from AWS Pricing API.

Load shaping & time-slicing. The GPU Operator’s fractional scheduler lets less-urgent mesh-decimation jobs run in evening hours, cutting grid emissions by as much as 38 % in regions with solar-heavy generation curves (docs.nvidia.com). Carbon data is exported as Prometheus labels, satisfying ISO 27001-Amd 2024 clause 6.3’s climate disclosure.

3.6 Open Standards and Vendor-Lock-In Mitigation

Outputs are streamed as OGC 3D Tiles 1.1, whose spec reached full release in March 2024 and is now reference-implemented by CesiumJS and Esri ArcGIS (portal.ogc.org). By emitting any mesh or point-cloud as 3D Tiles—as well as GeoTIFF, LAS/LAZ—the platform lets national mapping agencies ingest deliverables without proprietary viewers, protecting multi-decade data value.

Infrastructure code stays cloud-portable: Terraform, Crossplane, and Helm charts compile against AWS, GCP, Azure, and on-prem OpenShift. A-Bots.com also maintains a “Zero-License Tax” mode: all core micro-services are Apache 2; only third-party viewers may carry dual licenses, documented up front.

3.7 Closing the Loop

From the first RGB frame over a construction site to a color-balanced ortho pinned into a city’s cadastral GIS, every second lost in pipelines equals idle cranes or missed crop-health alerts. A-Bots.com’s build-to-scale blueprint joins GPU-native Kubernetes, GitOps rigor, and compliance-by-design controls so mapping programs land on time, within budget, and under audit.

In the next step—whether you are a drone OEM bundling white-label analytics or an energy major standardizing site surveys—the same reference architecture can be cloned, parameterized, and deployed in less than a sprint. Your pixels are already flying; let’s make sure they land as actionable geospatial intelligence.

✅ Hashtags

#AerialMapping

#DronePhotogrammetry

#GeospatialIntelligence

#AIMaps

#ABots

#AerialPhotographyMapping

#aerialphotographymappingsoftware

Other articles

Sensor-Fusion eBike App Development Company Looking to leapfrog the competition with a data-driven eBike? A-Bots.com is the specialist app development company that fuses strain-gauge torque, dual-band GNSS and UWB security into a seamless mobile experience. From adaptive pedal-assist curves and crash detection to predictive battery RUL dashboards, our end-to-end stack—bike ECU, Kotlin / Swift apps and AWS serverless back-end—turns raw telemetry into rider delight and recurring revenue. Partner with a single-SLA team that prototypes in weeks, scales to global fleets and safeguards compliance in every jurisdiction.

Drone Detection Apps 2025 Rogue drones no longer just buzz stadiums—they disrupt airports, power grids and corporate campuses worldwide. Our in-depth article unpacks the 2025 threat landscape and shows why multi-sensor fusion is the only reliable defence. You’ll discover the full data pipeline—from SDRs and acoustic arrays to cloud-scale AI—and see how a mobile-first UX slashes response times for on-site teams. Finally, we outline a 90-day implementation roadmap that bakes compliance, DevSecOps and cost control into every sprint. Whether you manage critical infrastructure or large-scale events, A-Bots.com delivers the expertise to transform raw drone alerts into actionable, courtroom-ready intelligence.

Drone Survey Software: Pix4D vs DroneDeploy The battle for survey-grade skies is heating up. In 2025, Pix4D refines its lab-level photogrammetry while DroneDeploy streamlines capture-to-dashboard workflows, yet neither fully covers every edge case. Our in-depth article dissects their engines, accuracy pipelines, mission-planning UX, analytics and licensing models—then reveals the “SurveyOps DNA” stack from A-Bots.com. Imagine a modular toolkit that unites terrain-aware flight plans, on-device photogrammetry, AI-driven volume metrics and airtight ISO-27001 governance, all deployable on Jetson or Apple silicon. Add our “60-Minute Field-to-Finish” Challenge and white-label SLAs, and you have a path to survey deliverables that are faster, more secure and more precise than any off-the-shelf combo. Whether you fly RTK-equipped multirotors on construction sites or BVLOS corridors in remote mining, this guide shows why custom software is now the decisive competitive edge.

Mastering the Best Drone Mapping App From hardware pairing to overnight GPU pipelines, this long read demystifies every link in the drone-to-deliverable chain. Learn to design wind-proof flight grids, catch RTK glitches before they cost re-flights, automate orthomosaics through REST hooks, and bolt on object-detection AI—all with the best drone mapping app at the core. The finale shows how A-Bots.com merges SDKs, cloud functions and domain-specific analytics into a bespoke platform that scales with your fleet

Drone Mapping and Sensor Fusion Low-altitude drones have shattered the cost-resolution trade-off that once confined mapping to satellites and crewed aircraft. This long read unpacks the current state of photogrammetry and LiDAR, dissects mission-planning math, and follows data from edge boxes to cloud GPU clusters. The centrepiece is Adaptive Sensor-Fusion Mapping: a real-time, self-healing workflow that blends solid-state LiDAR, multispectral imagery and transformer-based tie-point AI to eliminate blind spots before touchdown. Packed with field metrics, hidden hacks and ROI evidence, the article closes by showing how A-Bots.com can craft a bespoke drone-mapping app that converts live flight data into shareable, decision-ready maps.

Explore DoorDash and Wing’s drone delivery DoorDash and Wing are quietly rewriting last-mile economics with 400 000+ aerial drops and 99 % on-time metrics. This deep dive maps milestones, performance data, risk controls and expansion strategy—then explains how A-Bots.com turns those insights into a fully-featured drone-delivery app for your brand.

Top stories

Copyright © Alpha Systems LTD All rights reserved.

Made with ❤️ by A-BOTS