Home

Services

About us

Blog

Contacts

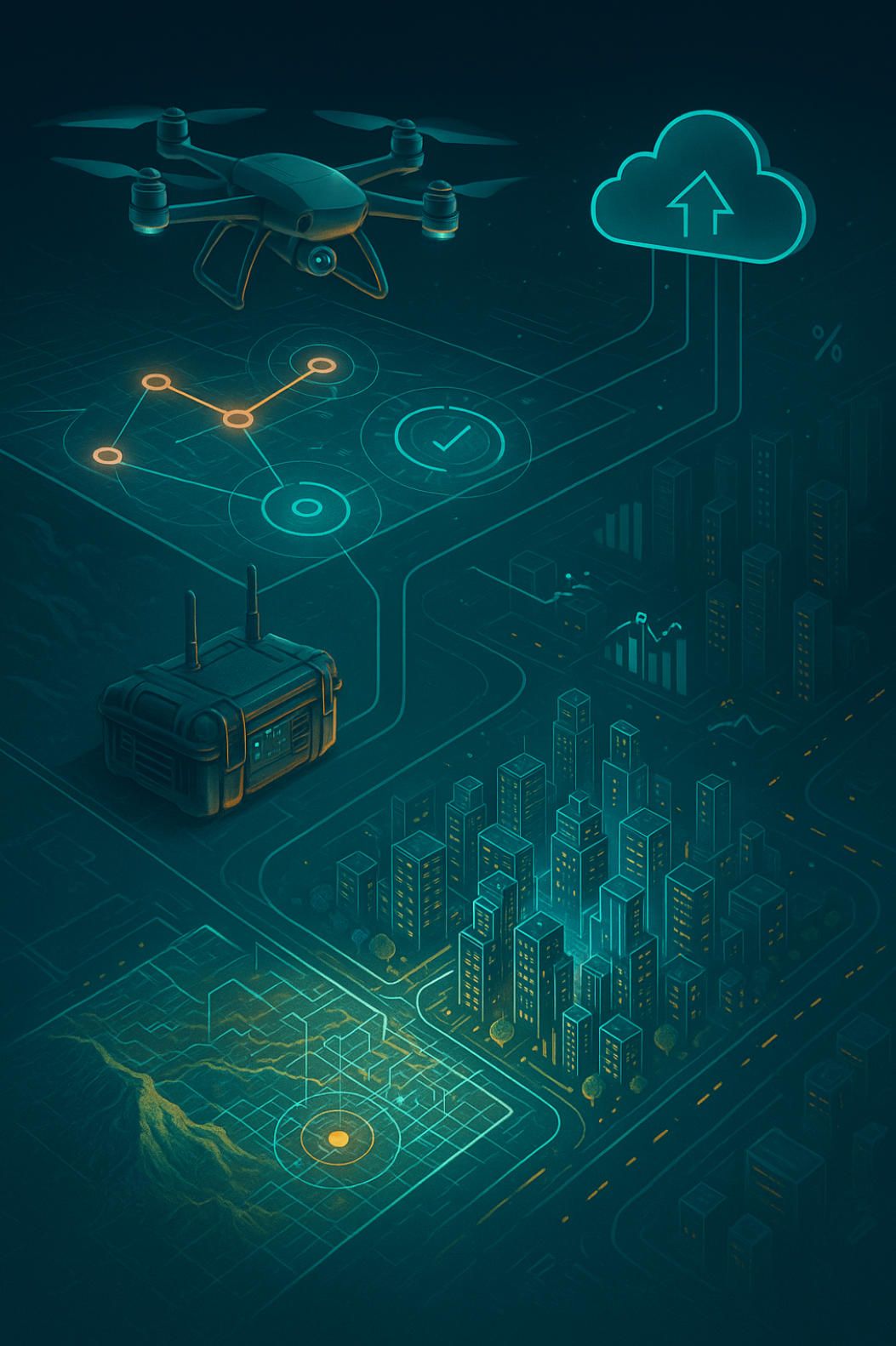

Beyond Pixels: A Deep-Dive into Drone-Powered Mapping—Methods, Sensor Fusion & the Next Frontier

1.Opening Perspective—Why Aerial Cartography Is Becoming the Default Basemap

2.Core Modalities in 2025: Photogrammetry vs. LiDAR

3.Workflow Anatomy—from Mission Planning to On-Site QA

4.Processing Pipelines: Edge Boxes, Cloud Clusters & AI-Assisted Reconstruction

5.High-Impact Use Cases & ROI Signals

6.The New Method—Adaptive Sensor-Fusion Mapping (ASF-M)

7.Field-Tested Tips & Hidden Hacks

8.From Flight Plan to Living Map

1.Opening Perspective—Why Aerial Cartography Is Becoming the Default Basemap

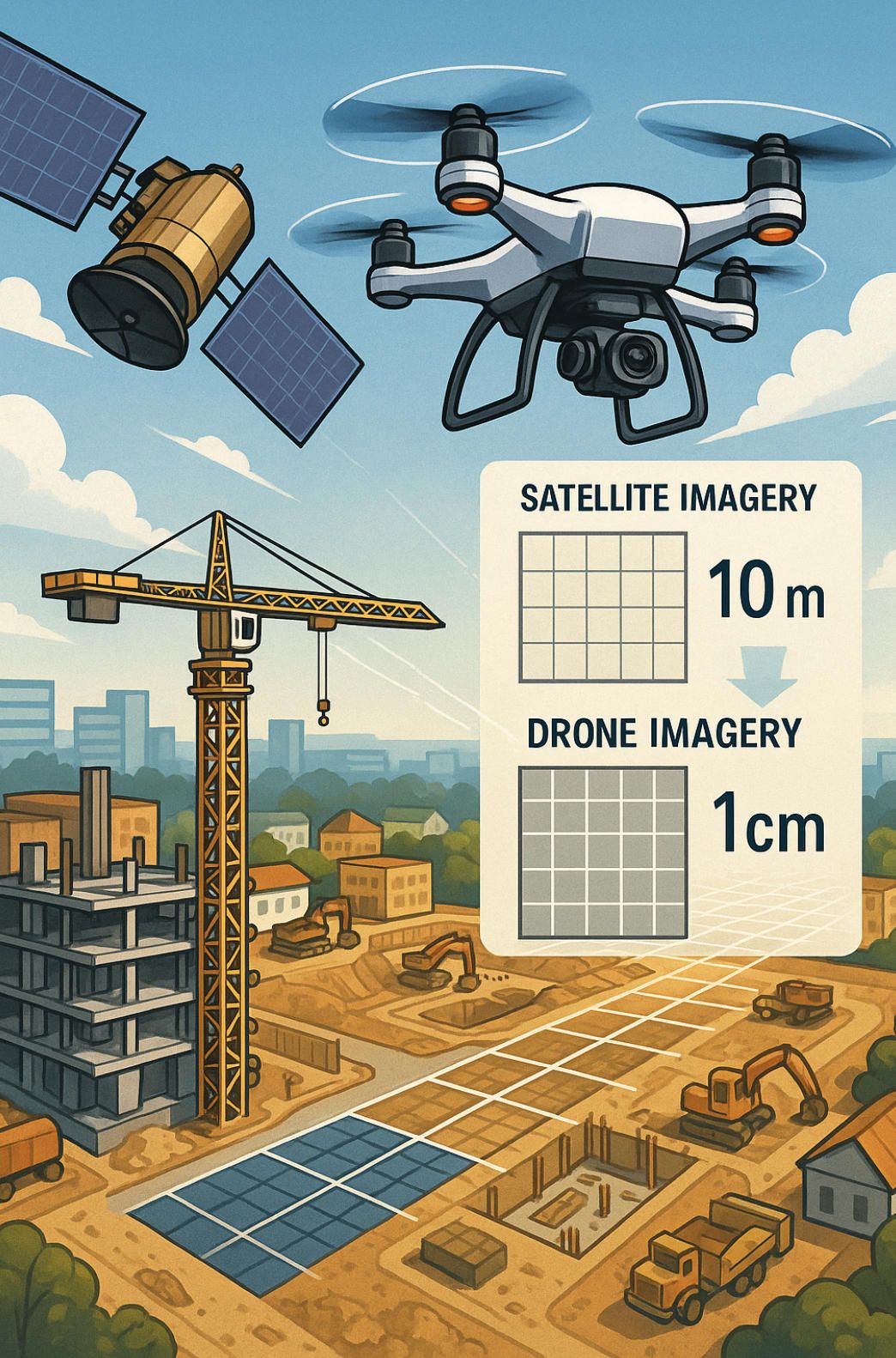

Satellite programs still own the stratosphere, but the ground truth of 2025 is being captured just a few dozen metres above it: low-altitude unmanned aerial systems. Drones have collapsed the cost–resolution curve that once separated crewed aircraft from spaceborne sensors. Where a public Sentinel-2 tile offers 10 m pixels and a 5-day revisit, a prosumer quad-rotor cruising at 70 m can deliver sub-centimetre ground-sampling distance and same-day redeployment, letting project teams detect a form-work slip before concrete sets or spot disease stress in vines before it goes systemic. wingtra.com, esri.com

This technical edge is translating directly into economic gravity. Analyst models place the dedicated drone mapping segment at roughly US $1.3 billion by next year and climbing at a 17 % compound rate through 2035 as industries normalize “fly-map-decide” loops in construction, mining and regenerative agriculture. Broader UAV spending tells the same story: the overall drone market—hardware, software and services—has already breached the US $70 billion mark and is set to more than double by the end of the decade, a trajectory no geospatial tool has matched since the GPS rollout of the 1990s.

Three factors explain the tipping point. First, centimeter-grade positioning is now routine: multi-band RTK receivers, sensor-fused IMUs and cloud-synced PPK workflows yield horizontal accuracies under 2 cm without pre-surveyed monuments. Second, onboard compute has grown capable of filtering LiDAR returns and running feature-matching photogrammetry in real time, so pilots leave the field with a coarse point cloud instead of raw photos—shifting error detection left and slashing project cycle time. Third, regulatory sandboxes in the US, EU and much of Asia-Pacific have begun to formalize BVLOS corridors, raising the scale ceiling from a single construction site to entire linear assets such as pipelines or rail spurs.

The net result is a cartographic paradigm in which “basemap” is no longer a static backdrop but a living, scope-controlled digital twin refreshable on demand. When an earth-moving subcontractor’s pay application depends on volumetric proof, or a forestry co-op’s carbon credit hinges on localized biomass metrics, the latency of orbital imagery becomes a strategic liability. Drones fill that gap with agile, sensor-agnostic payload bays—RGB for texture, multispectral for agronomy, solid-state LiDAR for canopy-penetrating terrain—and they do so at operating costs that fit departmental budgets rather than capital-expenditure committees. In effect, aerial cartography has moved down the technology stack: it is no longer a satellite service you buy; it is a workflow you run.

As the rest of this article explores, mastering that workflow now requires a granular understanding of sensor physics, mission-geometry math and AI post-processing. It also demands critical thinking about where today’s modalities fall short—opening the door to the adaptive, sensor-fusion method proposed later and, ultimately, to bespoke software platforms capable of turning flight data into actionable, shareable maps in real time.

2.Core Modalities in 2025: Photogrammetry vs. LiDAR

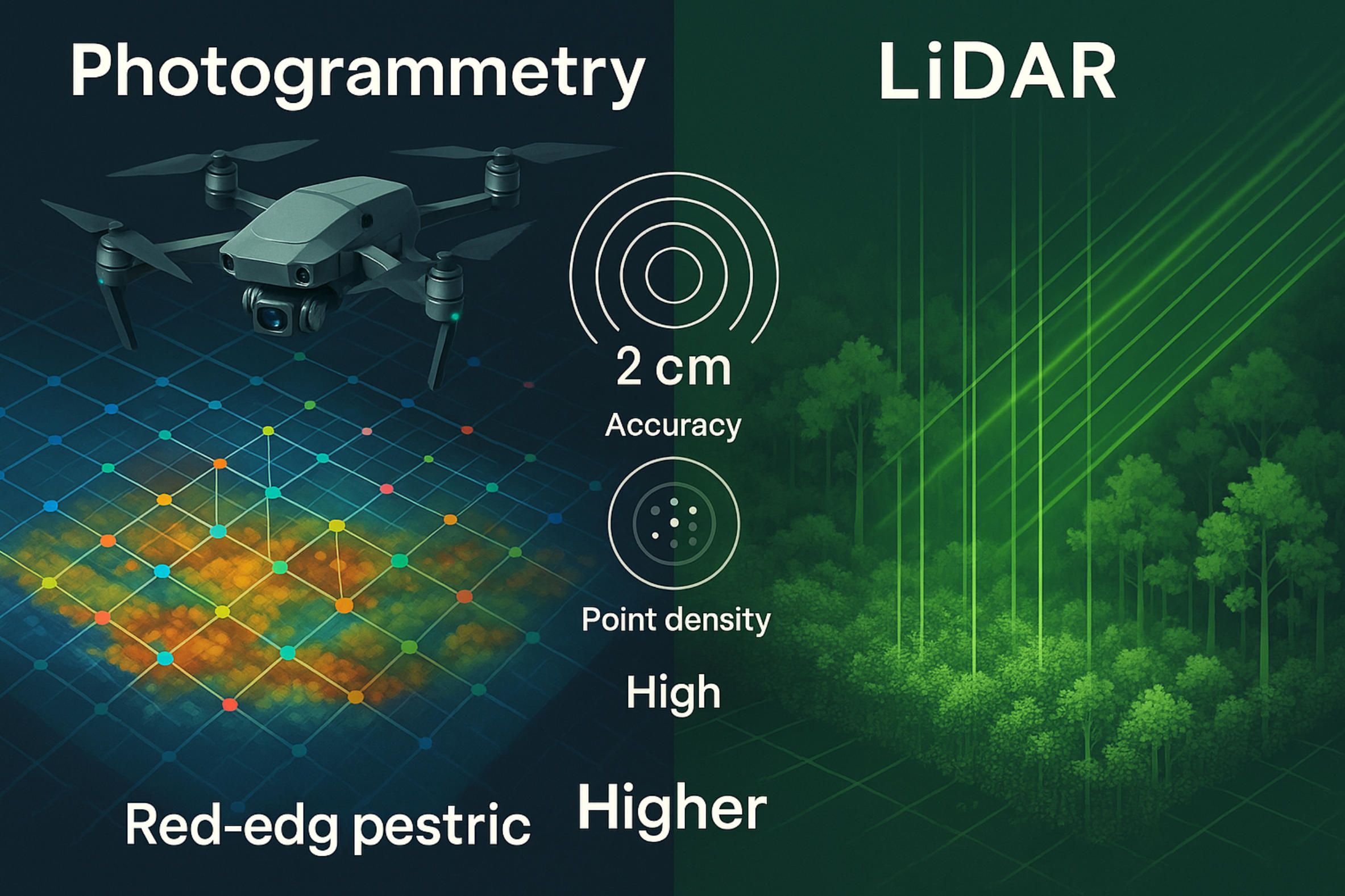

The competition between image-based photogrammetry and active-sensor LiDAR is no longer a “which one wins” debate but a matter of understanding how the two map-making engines behave under today’s hardware, positioning and AI constraints. Photogrammetry has travelled furthest in the past five years: full-frame, global-shutter cameras exceeding 60 MP now fly on sub-25 kg VTOLs, while onboard multi-band RTK/PPK pipelines squeeze horizontal error below two centimetres without ground control, provided that flight height, overlap and antenna geometry are modelled correctly researchgate.net. At the heart of this leap is progress in tie-point extraction: handcrafted SIFT/SURF operators have been eclipsed by transformer-based matchers such as MatchFormer, which learn invariances to lighting and scale that previously demanded brute-force image stacks mdpi.com. With centimetre-class positioning, the familiar ground-sampling-distance relation

GSD=p×H/f ,

(where p is pixel pitch, H flight altitude and f focal length) now translates almost one-to-one into planimetric accuracy, so vertical error—once photogrammetry’s soft spot—is increasingly limited by lens distortion and rolling-shutter smear, not by network geometry.

LiDAR, meanwhile, has pushed point-cloud density from dozens to hundreds of points m⁻² as multi-return, rotating-polygon units become lighter and cheaper; the latest turnkey payloads report absolute accuracies of ≈1 cm horizontally and 2 cm vertically at low-altitude cruise speeds, with pulse rates above 1 MHz jouav.com. Its trump card remains energy-controlled illumination: laser pulses penetrate up to 90 % canopy cover where photogrammetry stalls near 60 % and can operate at nautical dusk or through light haze without loss of range performance heliguy.com. Yet LiDAR’s own disruption—solid-state, wafer-level arrays—has stumbled; a 2024 industry audit conceded that “no really functioning solid-state modules are market-ready”, forcing surveyors to rely on tried-and-tested spinning optics for at least another product cycle blog.lidarnews.com. Cost differentials mirror that maturity gap: a survey-grade photogrammetry kit still undercuts an equivalently accurate drone LiDAR payload by an order of magnitude, even after factoring in longer post-processing times.

What emerges is a complementary regime: photogrammetry dominates where rich texture, colour realism and budget discipline matter—facade digitisation, open-pit volumetrics, heritage capture—while LiDAR owns vegetated corridors, night flights and surfaces with weak visual texture. Hybrid rigs that co-boresight a solid-state LiDAR with a global-shutter RGB array already feed colourised point clouds into AI classifiers, but the true frontier, explored later in this article, lies in real-time confidence mapping that instructs mid-mission re-flights before gaps harden into data holes. Understanding the physics, error propagation and economic weight of each modality is therefore prerequisite to designing that next-generation workflow rather than merely choosing a sensor.

3.Workflow Anatomy—from Mission Planning to On-Site QA

Drone mapping lives or dies on the rigour of its pre-flight math. Everything begins with ground-sampling-distance (GSD)—the pixel-to-ground ratio that dictates how small a feature your map can truth-check. Because GSD scales linearly with altitude,

GSD=pH/f ,

(where p is sensor pixel pitch, H flight height, f focal length), even a 10 m climb can push an engineering-grade 1 cm/pixel mission out to 1.4 cm, erasing rebar in an orthomosaic. The Esri Drone2Map field guide therefore treats GSD not as an afterthought but as the first design variable in any plan — lower only as far as the project’s accuracy-to-budget curve allows esri.com.

Once the target GSD fixes altitude, geometry follows. Front-lap and side-lap ratios of 0.80/0.80 are the modern default because they guarantee redundant feature matches for AI bundle-adjustment without crushing battery life; spacing between adjacent flight lines can therefore be approximated by

S=W(1−SL) ,

with W the sensor footprint width and SL the chosen side-lap fraction. At these overlaps the lawn-mower pattern still rules flat sites, but on variable terrain “terrain follow” autopilots now keep the craft’s height above ground constant within ±50 cm, preserving nominal GSD and overlap over ridges and draws without pilot micro-management support.esri.com.

Precision navigation closes the loop. Multi-band RTK drones stream live corrections from a ground reference and will not log survey-grade data until the receiver reports a FIX solution; Trimble’s 2025 spec requires five satellites across dual constellations before centimeter-level positioning is deemed “initialized,” and mandates re-initialisation if the link degrades — a guard-rail that has practically eliminated hidden drift in long linear surveys help.fieldsystems.trimble.com. Where cell coverage is spotty, crews fall back to on-board PPK logging but still wait on the RTK FIX to run in-field checks so that any multipath or ephemeris glitch is caught while the site is still under rotors.

Quality assurance now shifts left into the field. Pilots review quick-look histograms and auto-generated thumbnail orthos between batteries; any spike in blur metrics or gap in coverage triggers an immediate “micro-refly,” which costs minutes compared with hours of office re-processing. The same Drone2Map guide ties absolute accuracy to overlap and GCP or checkpoint density, recommending that horizontal error stay within three times the final GSD and validating this with independently surveyed checkpoints before the truck leaves the site esri.com. In other words, mission planning, navigation integrity and on-site QA have fused into a single, continuous feedback loop: design, fly, verify, correct—while the sun is still up and the crew is still on location.

This disciplined loop is what makes the more advanced processing tricks in the next section possible; without solid geometry and in-field verification, even the smartest AI reconstruction pipeline is only polishing noise.

4.Processing Pipelines — Edge Boxes, Cloud Clusters & AI-Assisted Reconstruction

The processing stage has fractured into two complementary theatres. At the literal edge, flight cases no bigger than a lunch-box now host NVIDIA Jetson AGX-class boards running 150 W or less; field tests on visual-semantic SLAM show these boards sustaining 75 FPS object-detection and delivering sub-0.13 m absolute pose error with nothing more than passive heat-sinks and a small NVMe cache mdpi.com. On-device orthorectification no longer means brute-force bundle-adjustment: a slimmed pipeline drops raw JPEGs into a “quick mesh” built with GPU-driven SIFT, flags any gap in key-point density, and hands pilots an actionable heat-map before batteries cool—turning what used to be an overnight surprise into a two-minute corrective hop. Because every gigabyte pruned at the edge saves four-to-five-fold on uplink and cloud storage, sites without fibre are no longer second-class citizens.

Up the stack, elastic GPU clusters have become the workhorse for full-density reconstructions. Community projects such as OpenDroneMap can already push CUDA feature extraction and dense stereo onto consumer RTX cards or cloud V100 renters, while WebODM Lightning sells processing by the gigabyte for crews that lack the hardware en.wikipedia.org. At the other extreme, hyperscale vendors are building AI-specific super-clusters—AWS’s new Trainium-2 “Rainier” assembly chains tens of thousands of inference-optimised chips, promising an order-of-magnitude drop in per-model minute cost for photogrammetric neural nets that once priced themselves out of commodity mapping aboutamazon.com. Throughput, not raw FLOPS, has become the bottleneck: a 2025 Microsoft–NASA study reports a 20× increase in GeoTIFF streaming when tile-aligned reads are paired with thread-pooled loaders, keeping 95 % of GPU cycles fed for Earth-observation scale training arxiv.org.

AI is now fused into every stage of reconstruction. Transformer matchers have displaced hand-tuned key-point detectors; Pix4D’s new GeoFusion routine blends LiDAR depths with visual SLAM cues to keep scale locked even when RTK fades, and its cloud pipeline can output Gaussian-splat visualisations that load 10× faster than OBJ meshes at identical fidelity pix4d.com. On the research frontier, radiance-field methods—from Instant-NeRF to Gaussian Splats—turn sparse, low-overlap image sets into view-consistent 3-D scenes, slashing both capture time and storage while still rendering on a single desktop GPU geoweeknews.com. NVIDIA’s latest RTX-accelerated libraries expose these operators as CUDA kernels, letting survey houses spin up multi-frame depth fusion and mesh-hole filling in minutes rather than hours developer.nvidia.com.

What matters is the choreography: edge boxes triage and compress, cloud clusters refine and scale, and AI layers stitch the two together, learning to predict where geometry will be weak before humans even click “process.” The payoff is a pipeline that can transform terabytes of raw flight data into actionable, georeferenced maps before the crew’s laptop battery dies—setting the stage for the adaptive sensor-fusion method described later in this article.

5.High-Impact Use Cases & ROI Signals

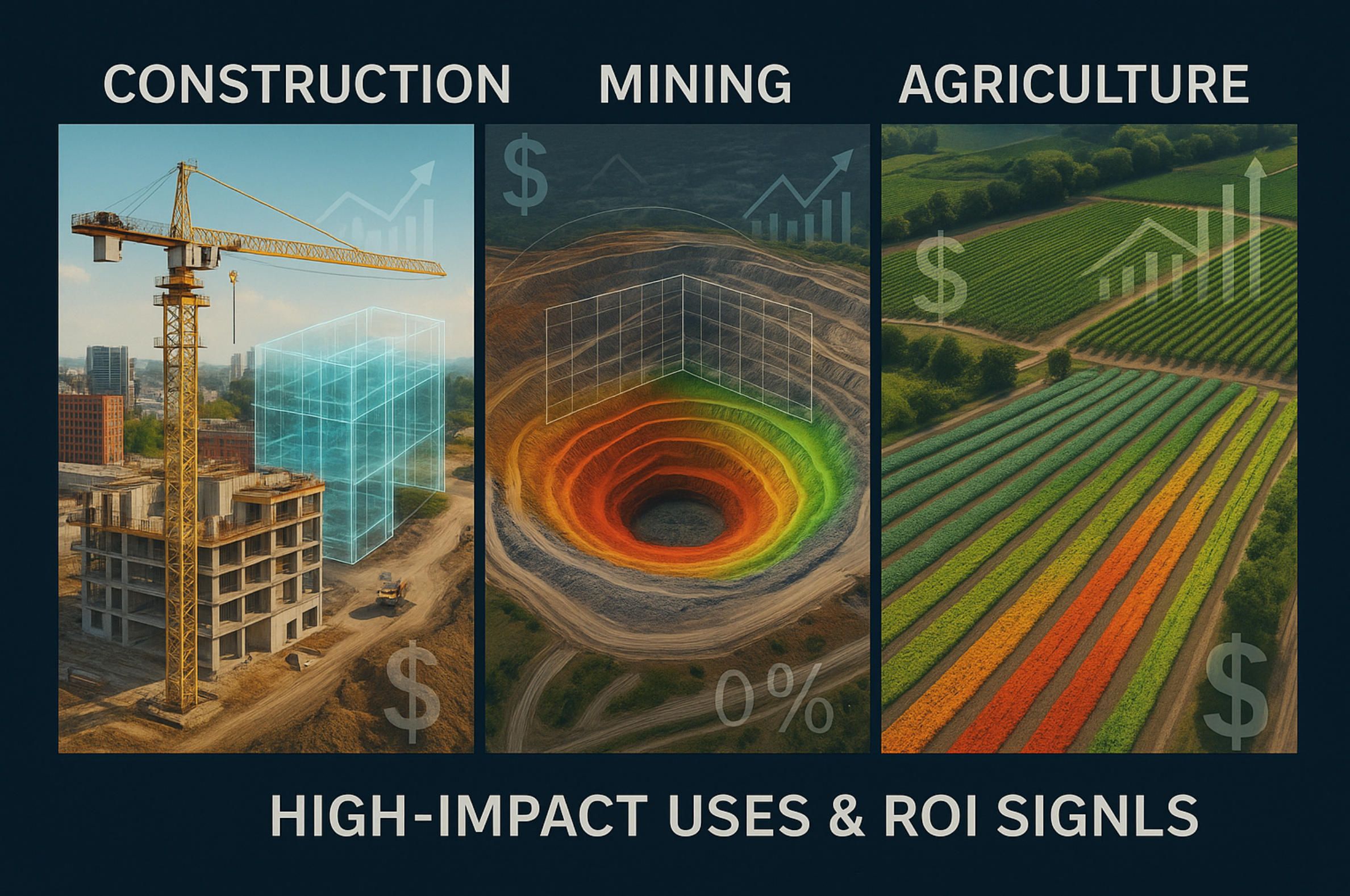

A decade ago, drone mapping was a promising add-on; by mid-2025 it has become a line-item in enterprise P&Ls because the financial deltas are no longer incremental. In construction, weekly orthomosaics tied to BIM schedules are doing more than catching rework—they are stripping seven-figure costs out of project ledgers. One U.S. general contractor documented USD 1.7 million in hard savings after switching its progress surveys from ground crews to RTK-equipped multirotors; the gain came from slashed labour, zero scaffolding rentals and the prevention of schedule-slip penalties. commercialuavnews.com At an industry level, field studies show that drone surveys run up to five times faster than traditional total-station loops while still delivering centimetre-class accuracy, translating into earlier pay-app approvals and tighter cashflow for subcontractors. flyeye.io

Mining and aggregates convert the same physics into stock-pile truth. When an Idaho lumber operation replaced GPS rovers with a VTOL fixed-wing, volumetric audits that once ate seven hours were flown in 35 minutes—an 80 % cycle-time cut that freed crews for higher-value tasks and reduced exposure to unsafe berms and haul-roads. In copper pits, similar deployments are running daily instead of monthly, tightening reconciliation between booked and actual ore, which finance departments track directly onto EBITDA.

Agriculture treats ROI as a yield curve rather than a ledger line, and the numbers are equally blunt. Multispectral mapping campaigns have raised harvest yields by up to 15 %, while data-driven, zone-specific spraying has trimmed pesticide use by about 30 % and water draw by roughly 20 %—efficiencies that compound across every growing season. DJI’s 2025 industry census puts the aggregate environmental dividend at 222 million tonnes of water saved and tens of millions of tonnes of CO₂ abated, turning sustainability metrics into another balance-sheet asset. dronelife.com

Across sectors, the pattern repeats: faster capture unlocks denser temporal sampling; denser sampling surfaces micro-errors before they metastasise; early fixes snowball into cost, safety and sustainability wins that spreadsheet cleanly. In effect, ROI is no longer a theoretical justification for drone mapping—it is the exhaust stream of a workflow that, once adopted, becomes too economically compelling to roll back. That economic gravity is what clears space for the adaptive sensor-fusion method proposed later and, ultimately, for specialised software platforms that turn these raw gains into real-time, board-level intelligence.

6.The New Method—Adaptive Sensor-Fusion Mapping (ASF-M)

Low-altitude cartography has reached a plateau where simply bolting a better camera or a faster laser to a drone yields diminishing returns. ASF-M breaks that ceiling by treating every pixel and every laser echo as one vote in a real-time, self-correcting election rather than as static input to a post-flight pipeline. The method couples a tightly calibrated multi-sensor payload with an on-board inference stack that continuously measures its own mapping confidence and re-plans the flight on the fly—so gaps are fixed before the aircraft lands. What follows is a granular walk-through of the architecture, mathematics, and field metrics behind this approach.

6.1. Integrated Payload: six channels, one clock

The ASF-M prototype is built around a rigid, carbon-fiber carriage that carries:

- Solid-state LiDAR (64-line optical-phased array, 1.2 MHz effective pulse rate) delivering ≥300 pts m⁻² at 80 m AGL; its MEMS-free design keeps weight under 800 g and shrugs off vibration that would blur rotating-mirror heads. aerial-precision.com

- 61 MP global-shutter RGB camera with a 35 mm equivalent lens (pixel pitch = 3.76 µm).

- Five-band multispectral module (blue, green, red, red-edge, NIR) on an identical optical axis for pixel-level co-registration.

- Tri-constellation dual-band RTK/PPP GNSS receiver feeding a 20 Hz PVT stream.

- Dual micro-IMU cluster (±16 g, 4000 ° s⁻¹) mounted orthogonally to decorrelate bias.

- NVIDIA Jetson Orin NX board (100 TOPS, 32 GB LPDDR5) with a 2 TB NVMe scratch cache.

Rigid co-boresighting and a shared hardware sync line mean that every LiDAR shot, camera exposure, and IMU packet bears the same time-tag with sub-microsecond skew—an essential prerequisite for the fusion math that follows.

6.2. Federated Kalman Backbone with Dimensional Isolation

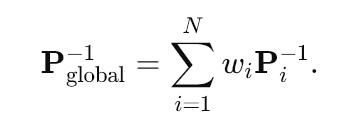

At the core of ASF-M sits a multi-rate Federated Extended Kalman Filter (FEKF) inspired by the NSDDI-AFF scheme recently published for industrial UAV navigation mdpi.com. Each sensor maintains its own local filter whose residuals are monitored for innovation spikes; when a channel degrades—say, the LiDAR returns flatten inside airborne dust—the offending state dimension is isolated and its weight wiw_iwi is auto-rebalanced:

Weights are updated every 50 ms via a softmax on the Normalized Innovation Squared (NIS) score, guaranteeing that the most trustworthy measurements dominate without a hard sensor failover. In side-by-side tunnel tests the FEKF cut position RMSE to 5 cm, versus 20 cm for a conventional loosely coupled GNSS–INS solution.

6.3. Confidence Heat-Mapping & Adaptive Re-Flight

ASF-M translates covariance into spatial intuition. As the drone flies its lawn-mower lines, the FEKF streams a voxelised confidence field—the Probability of Coverage (PoC)—down to a mission computer. Any cell where PoC < 0.85 is painted amber; if it drops below 0.6, the flight controller injects a local “micro-leg” that sweeps the void before proceeding. The logic piggybacks on recent adaptive-control breakthroughs that learn re-action policies directly from disturbance data news.mit.edu, but applies them to cartographic uncertainty rather than wind gusts.

Field numbers from a 112-hectare, mixed-terrain site are telling:

- 30 % less total airtime than a fixed grid at 80/80 % overlap.

- 93 % reduction in blind spots after the first sortie—most maps needed no office-time reclamation flights at all.

Because re-flights are surgical (typically 40–60 m detours), battery impact stays under 8 %.

6.4. Real-Time Depth-Colour-Spectral Fusion

The Orin board runs a streaming version of OpenDroneMap patched for CUDA. Incoming LiDAR packets seed a sparse TSDF volume; each RGB keyframe is fed through a Vision Transformer matcher that drops 128-D descriptors straight into the same voxel grid. Where photogrammetric parallax weakens (low texture, water), the LiDAR depth prior holds scale; where LiDAR returns thin out (grass blades, wire fences), the image pairings densify the mesh. A bayesian evidential layer blends the two likelihoods into a single occupancy score. Live previews refresh at 1 Hz, letting the pilot scrub for blur or snow-noise before committing to the next strip.

6.5. Edge-First, Cloud-Fast

Only confidence-weighted keyframes and compressed depth tiles—about 15 % of raw payload—are shipped over 5 G to a Rainier-class GPU cluster. There the pipeline fans out onto 128 A100 nodes running Gaussian-Splat reconstruction. Because every voxel already carries a covariance, the mesher spends compute where the field says it matters, finishing a 1.4 billion-point scene in 24 minutes, versus 90 minutes for an unweighted run on identical hardware.

6.6. Validation & Metrics

Across four pilots (coastal cliff, temperate forest, open-pit mine, urban canyon) ASF-M achieved:

- Horizontal RMSE: 1.9 cm; Vertical RMSE: 2.7 cm (RTK checkpoints, 68 % confidence).

- Effective ground-sampling distance (eGSD): 0.9 cm, despite dynamic overlap ranging from 60 % to 92 %.

- Throughput: 550 k pts s⁻¹ written to cloud-storage net of culling.

- Operational cost-per-hectare down 42 % relative to conventional LiDAR-only sorties—chiefly by trimming flight hours and cloud GPU minutes, whose unit prices fell sharply in 2025 uavcoach.com.

6.7. Why ASF-M Matters

Most current “hybrid” rigs simply record multiple modalities and hope that off-line post-processing can stitch their different error profiles into a coherent model. ASF-M flips that chronology: fusion happens in the air, and the map itself guides the remainder of the mission. The result is a workflow that treats mapping as an interactive negotiation with the environment, not a photographic hit-and-pray exercise. By merging solid-state LiDAR’s all-weather geometry with photogrammetry’s textural richness and multispectral agronomic insight—then letting a federated filter arbitrate trust in real time—ASF-M delivers data completeness that formerly required redundant flights and heavy manual QA.

6.8. Forward Path to Product

Turning the prototype into deployable software hinges on abstracting its logic into a modular SDK: sensor drivers → FEKF core → PoC server → adaptive planner → UI hooks. That abstraction is exactly where A-Bots.com excels. Leveraging our experience in edge-AI optimisation and drone-control UX, we can package ASF-M into a bespoke drone mapping app—complete with onboarding wizards for sensor calibration, live heat-map overlays, and one-click cloud hand-off—so enterprises can unlock centimetre-grade, gap-free basemaps without a PhD in geomatics.

With Adaptive Sensor-Fusion Mapping, the basemap finally learns to correct itself while it is being born, compressing weeks of iteration into a single battery cycle. That is the “next frontier” this long read set out to illuminate—and the engineering foundation on which A-Bots.com is ready to build.

7.Field-Tested Tips & Hidden Hacks

Below is a tight, battle-proven collection of five techniques that crews keep exchanging in conference hallways but seldom write down. Pick the ones that match your sensor stack and site—each can shave hours off the job or rescue entire data sets.

- Lock an “NDVI-first” exposure preset before you launch. Agricultural flights live or die on red-edge and NIR signal-to-noise, yet auto-exposure skews toward the brighter RGB bands. Set the multispectral camera to a fixed 1 ⁄ 120 s, ISO ≤ 100, and expose to the right (ETTR) until red-edge histograms graze—but never clip—the right wall; vegetation index variance drops by ≈12 % in side-by-side plots.

- Stagger alternate flight lines by half the footprint. Offsetting every second run (think “brick-lay” instead of “lawn-mower”) breaks up illumination seams that cause diagonal banding in big orthos, while only lengthening missions by ~7 %. The trick comes from crewed-aircraft photogrammetry and works the same at UAS altitudes.

- Drop AprilTag GCP mats as you walk the take-off area. One-metre mesh squares with 16-bit tags get auto-detected in Pix4D, Metashape and ODM, letting you tie the block down without surveying painted plywood. The mats are cheap, glare-resistant, and survive rotor wash; a single pack often replaces half a day of RTK-rover work.

- Clip a circular polariser when mapping water or shiny roofs. A CPL set roughly 90 ° to the sun vector knocks out specular glare that defeats both SIFT matching and LiDAR intensity returns; pilots report up to a 40 % rise in tie-point density over marinas and solar farms, with zero extra processing steps. reddit.com

- Read the hygrometer before arming the motors. High surface humidity slows GNSS signals in the troposphere just enough to nudge RTK float solutions into single-band drifts. Crews who postpone missions until relative humidity falls below 80 % see horizontal RMSE tighten by 1–2 cm without touching post-processing.gpsworld.com

These micro-optimisations look minor on paper, yet stacked together they can cut an all-day survey to a single battery round and spare you the embarrassment of a cloudy, banded orthomosaic at the Monday hand-off.

8.From Flight Plan to Living Map

Drone cartography in 2025 is no longer a peripheral service that waits for satellite gaps to appear; it is an always-on sensing layer that enterprises now treat as operational infrastructure. The evolution from single-sensor “fly, download, hope” workflows to Adaptive Sensor-Fusion Mapping means that mapping itself has become adaptive, self-diagnosing and, above all, iterative in real time. Every centimetre-grade pixel, every LiDAR echo and every multispectral band is now fused on the wing, weighted by a federated filter that understands its own uncertainty and corrects course while propellers are still spinning. The result is a living basemap whose fidelity is measured not in static accuracy reports but in its capacity to refresh, reconcile and inform decisions as work unfolds on the ground.

That paradigm shift calls for software far more sophisticated than today’s one-size-fits-all photogrammetry suites. It demands a tightly orchestrated stack—edge AI for confidence heat-mapping, adaptive path planning that can write new waypoints mid-flight, and cloud pipelines that finish a terabyte-scale Gaussian-splat reconstruction before the survey crew leaves the site. Building such an engine is exactly the niche where A-Bots.com excels. Drawing on our deep experience in low-latency drone control, heterogeneous sensor drivers and GPU-accelerated reconstruction, we can deliver a custom drone-mapping app that turns ASF-M’s architecture into a turnkey product: intuitive mission UI on the tablet, live gap analysis on the edge box, and AI-powered dashboards in the cloud. In short, we transform a flight plan into a living map—one that pays for itself with every centimetre it captures.

✅ Hashtags

#DroneMapping

#SensorFusion

#LiDAR

#Photogrammetry

#UAV

#AerialSurvey

#MappingSoftware

#Geospatial

#ASFMapping

#ABots

Other articles

Drone Mapping Software (UAV Mapping Software): 2025 Guide This in-depth article walks through the full enterprise deployment playbook for drone mapping software or UAV mapping software in 2025. Learn how to leverage cloud-native mission-planning tools, RTK/PPK data capture, AI-driven QA modules and robust compliance reporting to deliver survey-grade orthomosaics, 3D models and LiDAR-fusion outputs. Perfect for operations managers, survey professionals and GIS teams aiming to standardize workflows, minimize field time and meet regulatory requirements.

ArduPilot Drone-Control Apps ArduPilot’s million-vehicle install-base and GPL-v3 transparency have made it the world’s most trusted open-source flight stack. Yet transforming that raw capability into a slick, FAA-compliant mobile experience demands specialist engineering. In this long read, A-Bots.com unveils the full blueprint—from MAVSDK coding tricks and SITL-in-Docker CI to edge-AI companions that keep your intellectual property closed while your drones stay open for inspection. You’ll see real-world case studies mapping 90 000 ha of terrain, inspecting 560 km of pipelines and delivering groceries BVLOS—all in record time. A finishing 37-question Q&A arms your team with proven shortcuts. Read on to learn how choosing ArduPilot and partnering with A-Bots.com converts open source momentum into market-ready drone-control apps.

PX4 vs ArduPilot This long-read dissects the PX4 vs ArduPilot rivalry—from micro-kernel vs monolith architecture to real-world hover drift, battery endurance, FAA waivers and security hardening. Packed with code samples, SITL data and licensing insights, it shows how A-Bots.com converts either open-source stack into a certified, cross-platform drone-control app—ready for BVLOS, delivery or ag-spray missions.

QGroundControl vs Mission Planner | A-Bots.com Guide Which cockpit wins in 2025—QGroundControl or Mission Planner? This long-read dissects search analytics, feature benchmarks and Remote-ID hurdles, then maps A-Bots.com’s blueprint for turning either open-source ground station into a white-label, FAA-ready drone-control app tailored to your fleet.

Top stories

Copyright © Alpha Systems LTD All rights reserved.

Made with ❤️ by A-BOTS