Home

Services

About us

Blog

Contacts

Building AI Drone Software: Edge Models, UTM, and Remote Ops

1.Full Autonomy & DFR (Drone-as-First-Responder)

2.Drone-in-a-Box, Remote Operations & BVLOS

3.Fleet Management & “Operating Systems” for Drones

4.Open Developer Stack: PX4/ArduPilot + Companion Compute

5.Data Processing & AI Analytics

6.AI-Ready Payloads (Thermal / Dual-Spectrum)

7.C-UAS (AI Counter-Drone)

8.Industries where AI drone software is used

1.Full Autonomy & DFR (Drone-as-First-Responder)

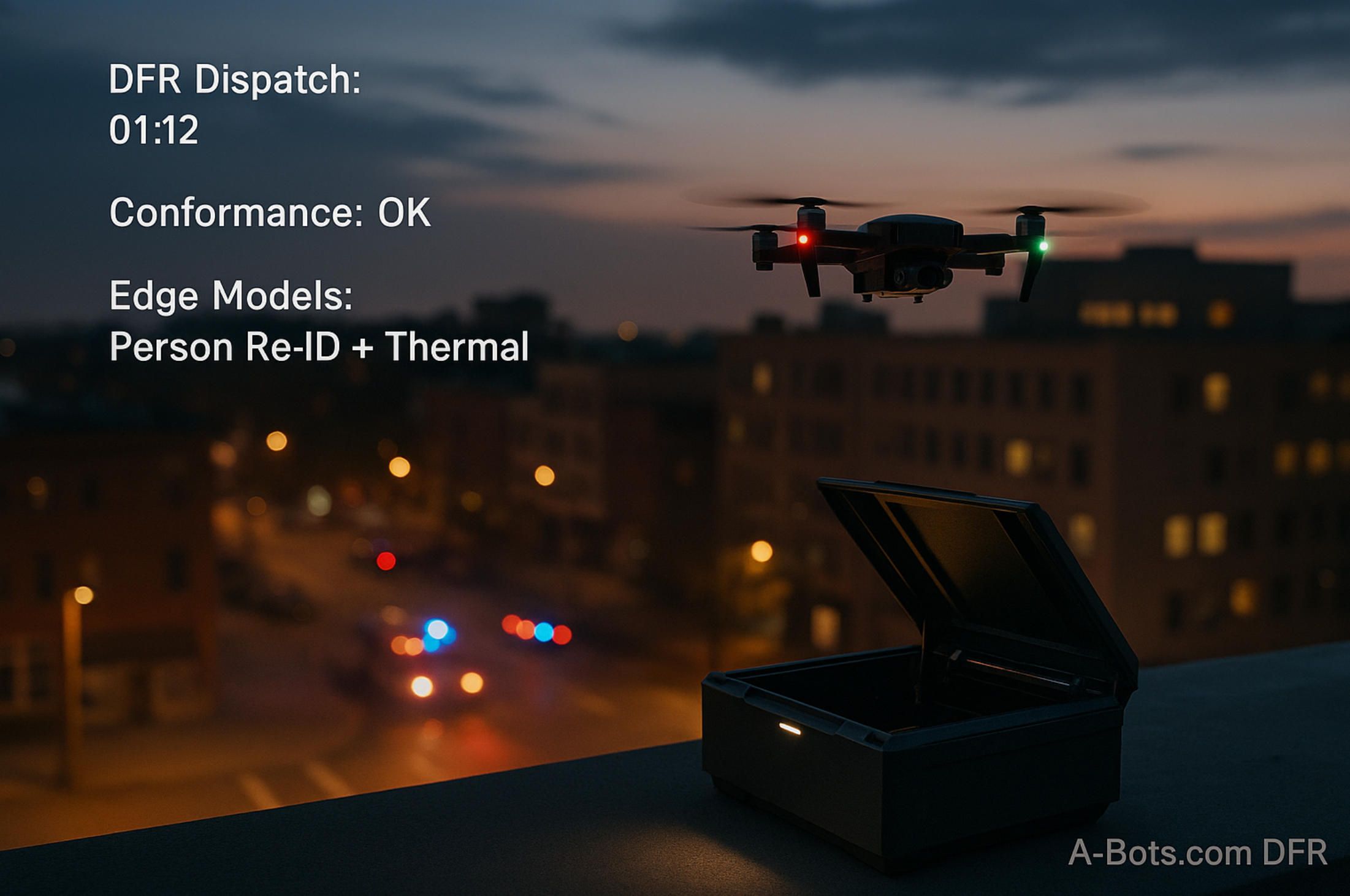

Drone-as-First-Responder (DFR) changes the math of response time: a rooftop box autonomously launches, navigates to the call, streams situational awareness, and returns to recharge—often before a patrol car arrives. What makes this practical is AI drone software that compresses “detect–decide–act” into seconds while respecting airspace and departmental policy.

In a well-run DFR program, the timeline is codified in software. The CAD event, GPS fix, and dispatch notes are ingested; a launch checklist is auto-validated; and the route is generated with live no-fly and weather overlays. AI drone software fuses these signals with historical incident patterns, selecting a path that balances speed and safety, then hands the mission to an autonomous flight executive that knows when to be aggressive and when to yield.

Under the hood, the autonomy stack is layered. Perception models detect wires, poles, façades, and moving actors; mapping builds a continuously updated occupancy grid; and planning negotiates corridors in three dimensions. Here AI drone software doesn’t just classify pixels—it estimates risk, predicts motion, and allocates buffer to keep the safety envelope intact at urban speeds.

Real cities are messy: GPS is multipathed, compass readings drift, and wind funnels between buildings. Robust DFR depends on multi-sensor odometry—visual-inertial SLAM, optical flow at the edge of the frame, and radar or depth when the sun is low. AI drone software must arbitrate these sources, downweighting outliers and raising confidence bars before committing to a maneuver in a GPS-denied alley.

Once on scene, the job shifts from navigation to comprehension. The gimbal selects a vantage point; thermal and RGB feeds are stitched; and targets are acquired, tracked, and re-acquired as they move behind cover. AI drone software can re-identify a subject after occlusion, stabilize on a vehicle at night, and auto-compose a broadcast-safe view that a remote pilot can approve with one click.

Autonomy without safety is a dead end. Geo-fences, strategic and tactical deconfliction, and hooks for detect-and-avoid sensors must be native, not bolted on. When the link degrades or the battery sags, the machine needs conservative behaviors: hover-and-query, reroute to a known safe loiter, or execute an instrumented return with continuous logging. AI drone software encodes these safety cases as policies and tests them with simulated edge failures before they ever fly for real.

Communications are engineered, not assumed. A resilient DFR link budget separates command-and-control from high-bitrate video; chooses between public LTE/5G, private 5G, or microwave; and proves failover with measurable packet loss and latency bounds. AI drone software prioritizes telemetry, re-encodes video on the fly, and adapts bitrate to preserve pilot authority even when bandwidth collapses.

DFR also lives or dies by data governance. Evidence-grade recordings require accurate timebases, signed metadata, and retention rules that match the jurisdiction. On-prem or sovereign-cloud deployments keep sensitive video out of third-party analytics backends. AI drone software enforces least-privilege access, redacts faces when policy demands, and maintains an audit trail from launch to archive.

Operational success is measurable. Median “eyes-on” time, time-to-first-decision, percentage of calls resolved without sending ground units, and complaint rate from residents under the flight path—all should trend in the right direction if the autonomy is doing its job. AI drone software can surface these metrics out-of-the-box, correlating them with model versions and weather so chiefs can justify the program with evidence, not anecdotes.

For teams that need to move from pilots to dependable service, the build approach matters as much as algorithms. A-Bots.com focuses on productionizing AI drone software: aligning the autonomy stack with your concept of operations, integrating CAD/RTCC/records systems, and delivering on-prem workflows that your privacy counsel can sign off on. Because every city and utility is different, we tailor AI drone software to the constraints you actually face—airspace, rooftops, backhaul, and the policies that define acceptable risk.

Human factors often decide whether autonomy helps or hinders. Interfaces must show intent, not just telemetry—why the vehicle chose a corridor, how confident it is in a track, what it will do next if the operator stays silent. Good AI drone software keeps a human on the loop with reversible actions, guardrails against mode confusion, and a clear “big red button” that pauses autonomy without losing situational context.

Finally, DFR needs disciplined validation. Synthetic scenes, hardware-in-the-loop, and replay of past incidents make regression visible and certify that upgrades don’t regress safety margins. With a proper CI/CD pipeline, model changes ride alongside flight-control versions and policy rules, with staged rollouts and rollbacks tied to metrics. When treated as a living system, AI drone software evolves from a cool demo into a 24/7 capability the city can trust.

2.Drone-in-a-Box, Remote Operations & BVLOS

A drone-in-a-box (DIAB) system is more than a weatherproof cabinet with a charger. It is a tightly coupled stack where the dock, aircraft, network, and command center behave like one machine. What unlocks that cohesion is AI drone software that treats the dock as a robotic subsystem—scheduling launches, validating preflight checks, and closing the loop from alert to landing without a person on the roof. In mature deployments, AI drone software compresses dispatch time to seconds and lifts sortie reliability by removing human bottlenecks from routine steps.

The dock must think, not just host. Precision landing uses fiducials, RTK, and visual servoing to pin the final centimeter while crosswinds and vortices from nearby structures try to push the aircraft off pad. Internal cameras verify gear state, door clearance, and foreign-object debris; anemometers and rain sensors feed thresholds; thermal control manages pack temperatures for both fast turnarounds and longevity. AI drone software fuses these signals into a single go/hold decision and records an evidence trail that ties every launch to concrete sensor readings.

Remote operations are the second half of the loop. A Remote Operations Center (ROC) supervises many docks, often across jurisdictions, with a pilot-to-aircraft ratio that increases as autonomy matures. The console is not a pretty video wall; it is an intent interface. AI drone software surfaces recommended actions—launch, hold, re-route, loiter, safe land—and explains the rationale in plain language so the human on the loop can accept or override confidently. For recurring patrols and inspections, AI drone software builds mission templates, schedules by daylight and curfew windows, and triggers ad-hoc sorties from external events.

Airspace is the third rail. Beyond Visual Line of Sight (BVLOS) depends on strategic and tactical deconfliction plus conformance monitoring during the flight. AI drone software integrates with UTM services for digital authorization, ingests NOTAMs and dynamic geofences, and broadcasts Remote ID while verifying that telemetry remains inside the declared envelope. Compliance scaffolding—ASTM F3411 Remote ID, ASTM F3548 UTM interfaces, EASA U-space constructs, and JARUS SORA safety objectives—shapes how plans are authored, monitored, and audited. When a plan deviates, AI drone software raises a conformance alert and evaluates safe responses in real time.

Links must be engineered for failure. Command-and-control is separated from video; each path has measurable service levels for latency, jitter, and packet loss. AI drone software implements QoS rules, adaptive bitrate, and preemptive keyframe insertion to preserve pilot authority during fades. Where reception is uneven, the system blends public 5G with private LTE or microwave, falling back to store-and-forward for non-critical payload data. The result is continuity of control even when the picture degrades.

Health is a first-class citizen. Batteries lie when they are cold and optimistic when they are new. Motors accumulate dust, bearings warm up, and props go slightly out of balance. AI drone software learns the “signature” of a healthy airframe—current draw at constant rpm, ESC temperatures versus ambient, vibration spectra—and predicts time-to-maintenance instead of waiting for a hard fault. Preflight, it runs built-in tests, validates GPS/IMU sanity, and aborts a launch if subcomponents disagree beyond policy tolerances.

None of this matters if the risk model is shallow. BVLOS is a permission to manage risk, not to ignore it. Ground-risk buffers for people and roads, air-risk for low-flying helicopters or crop dusters, and geo-specific hazards like powerline corridors need explicit representation. AI drone software encodes these into fly/no-fly heatmaps and real-time keep-out volumes that shrink or expand with context. For detect-and-avoid, it fuses ADS-B In, networked observers, and vision or radar cues, choosing conservative maneuvers by default.

Weather deserves its own brain. A rooftop anemometer is not a microclimate model. Gusts shear around buildings; sea breezes and canyon winds create traps; convective pop-ups can exceed forecast norms. AI drone software cross-checks on-site sensors with mesoscale feeds, learns local patterns over months, and adapts go/no-go thresholds by route segment. When a squall line approaches, it compresses missions, shortens loiter, and plans a high-margin return before the wall of rain erases visual cues.

Security and compliance are not bolt-ons. Docks sit in parking lots, on roofs, at substations; they are tempting physical and network targets. AI drone software enforces secure boot on the aircraft and the dock controller, rotates credentials, signs logs, and ships tamper events to a SIEM. Video diffs and evidence clips are hashed and time-synchronized; retention and access rules are policy-driven and traceable. For jurisdictions with data-sovereignty constraints, on-prem runtimes and VPC peering keep footage and telemetry inside a defined boundary.

Operations scale when repeatability is visible. The system should answer: how many missions launched on schedule; how many were aborted by weather; how many were saved by crosswind-aware landing; how often crews intervened, and why. AI drone software turns these into runbooks: if headwind exceeds N at waypoint K, climb to M and add reserve R; if link margin dips below X for Y seconds, switch carrier and drop payload bitrate to Z. Over time, the fleet converges toward more uniform, predictable behavior and safer edges.

Two patterns dominate deployments. The first is “campus mesh”: dozens of small docks across large facilities—ports, refineries, logistics yards—where short-range flights create a fabric of awareness. The second is “regional spokes”: larger docks with longer-range aircraft covering rural utilities or pipelines. AI drone software copes with both by abstracting docks and aircraft into interchangeable resources, scheduling by capability (thermal/RGB, wind limits, battery health) rather than by tail number, and by keeping spare capacity for surge events.

BVLOS unlocks the real economics. When one ROC can supervise tens or hundreds of autonomous sorties per shift, the cost per kilometer of surveillance falls below manned alternatives. But it only works when the stack is honest about uncertainty and instrumented for proof. AI drone software that explains its choices, logs its evidence, and learns from misses is the difference between a pilot project and critical infrastructure. In short, DIAB is an operations discipline expressed in AI drone software, and BVLOS is the regulatory permission that such AI drone software earns with evidence.

3.Fleet Management & “Operating Systems” for Drones

At scale, a fleet is not just many aircraft—it is a living distributed system. The “operating system” for that system is AI drone software that abstracts hardware diversity, enforces policy, schedules missions, curates data, and proves compliance. In practice, an effective platform behaves like Kubernetes for flying robots: it reconciles desired state (patrols, inspections, DFR readiness) with observed state (weather, link margin, battery health) and keeps the fleet converging toward safe, repeatable outcomes. Without this layer, autonomy remains a collection of demos; with it, AI drone software becomes the backbone of operations.

A true control plane starts by modeling resources. Aircraft, docks, payloads, and networks are exposed as capabilities—wind tolerance, thermal resolution, endurance, uplink bandwidth—not as serial numbers. AI drone software then solves assignment and routing as an optimization problem subject to those capabilities and to policy constraints (curfews, privacy zones, crew duty limits). When a dock closes for maintenance or a cell sector degrades, the planner rebalances the schedule without human triage.

Identity is foundational. Each airframe, dock, and companion computer joins the fleet through attestations, certificates, and tamper-evident boot sequences. AI drone software treats identity like cryptographic plumbing: per-mission keys, mutual TLS, and short-lived tokens to minimize blast radius. For regulators who require traceability, identities bind to hull numbers and maintenance logs so that every second of telemetry and video is provably from the device it claims to be.

Change management is the next pillar. Airworthiness and autonomy both drift if updates are sloppy. Versioned artifacts—flight-control firmware, perception models, payload drivers—are promoted through stages with feature flags, canary rollouts, and automatic rollback on health regressions. AI drone software ties rollouts to objective gates: crash-free rate, link resilience, precision-landing variance, and battery thermal margins. A stalled metric halts the train and opens a diff rather than pushing risk into the sky.

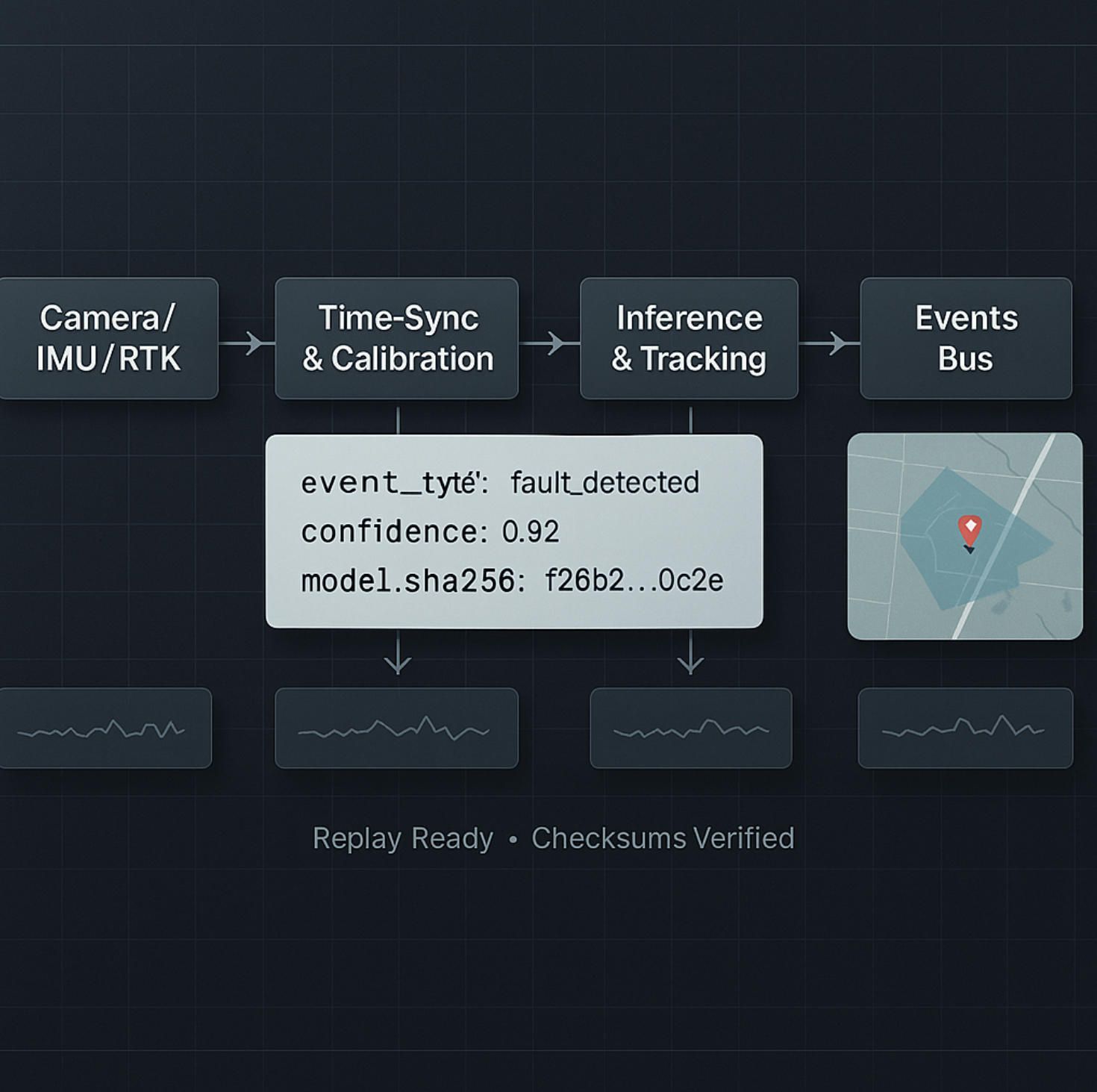

Observability must be first-class. Time-synchronized logs, metrics, and traces from aircraft, dock, and ground systems allow operators to see what the machine believed, not only what it did. AI drone software correlates model inferences with flight events—why the planner chose a corridor, when the tracker lost a subject, how the landing controller corrected for gusts—so engineers can reproduce incidents and auditors can trust reports. In a mature setup, anomalies generate tickets with enough context for root cause, not just alarms to be acknowledged.

Health management is where fleets earn longevity. Batteries lie and motors age. By learning the “healthy signature” of each platform—current draw at setpoints, ESC temperatures vs. ambient, vibration spectra—AI drone software predicts time-to-maintenance and schedules interventions before faults cascade. The same telemetry feeds policy: a pack beyond its cycle budget no longer gets long-range tasks; props with rising imbalance are barred from night missions above pedestrians.

Mission orchestration turns plans into reality. Recurring patrols, ad-hoc inspections, and DFR scrambles compete for finite assets. AI drone software understands priorities and preemption, reserving buffers for emergencies and shifting low-priority sorties to quieter windows. It also reasons about turnarounds: battery warm-up, RTK lock, payload calibration, and door cycles become variables in a schedule that aims for “eyes-on” within service-level agreements rather than best-effort estimates.

The data plane is a product of its own. Raw frames, thermal tiles, and LiDAR or radar bursts are useless if they are not indexed, labeled, and routed. AI drone software generates evidence-grade artifacts automatically—signed video, synchronized sensor packets, and event summaries—then pushes them to on-prem stores or sovereign clouds with lifecycle policies. Near-real-time clips feed operations, while full-resolution captures power training loops for perception models without leaking sensitive footage to third parties.

Edge AI adds another axis: models are now deployed, versioned, and governed like code. A model registry tracks provenance, datasets, and evaluation metrics. AI drone software performs shadow inference during flights to compare a candidate model against the currently active one, enabling A/B decisions grounded in precision/recall instead of gut feel. When a new model wins in the field, the platform promotes it gradually, with automatic rollback if false positives spike or inference latency threatens flight safety.

People and permissions decide whether scale is safe. Ground teams, ROC supervisors, analysts, and external investigators need different views with different powers. AI drone software encodes least privilege in role- and attribute-based access down to “who can scrub faces” and “who can export originals.” Every action—override a loiter, unlock a restricted geofence, shorten retention for a case—is logged with time, reason, and approver so the system remains defensible long after the mission.

Multi-tenancy and data sovereignty shape architecture choices. Municipal coalitions, utility subsidiaries, or global facility networks may share infrastructure but must not share data. AI drone software supports strict tenancy boundaries, customer-managed keys, and regional data residency so legal teams can greenlight deployments without exception clauses. When policy demands air-gapped sites, the same platform runs on-prem, syncing only metadata or not syncing at all.

Interoperability prevents lock-in. Fleets are rarely homogeneous; some aircraft speak MAVLink, others come with proprietary SDKs, and payloads evolve faster than airframes. AI drone software normalizes control and telemetry, encapsulating vendor specifics behind stable interfaces. That way, planners, safety policies, model orchestration, and evidence handling remain constant even as hardware is refreshed—an essential property for five- or ten-year programs.

Safety is a discipline, not a checkbox. Digital flight rules—lost-link behaviors, geofence hierarchies, DAA thresholds—are codified as policies and tested in simulation and hardware-in-the-loop before any field rollouts. AI drone software continuously checks conformance during missions and opens post-flight reports that align with regulatory frameworks. When incidents occur, the platform can replay every decision with the exact models and parameters that produced it, closing the loop between engineering, operations, and oversight.

Finally, the “operating system” earns its name when it fades into the background—reliable enough that crews forget it, transparent enough that auditors trust it, and flexible enough that programs can grow from a few docks to hundreds without a rewrite. This is where a partner matters. A-Bots.com helps organizations stand up this layer—reference architectures for heterogeneous fleets, model governance tied to mission risk, ROC consoles that explain intent—not by inventing yet another dashboard but by productionizing AI drone software into a resilient control plane your operations can grow into.

When fleets cross from dozens to hundreds of aircraft, incremental process fixes stop working. Only a cohesive AI drone software platform—part control plane, part data plane, part safety engine—keeps autonomy aligned with policy, economics, and human judgment. In that sense, “operating system” is not a metaphor; it is the quiet mechanism that turns many drones into one dependable service.

4.Open Developer Stack: PX4/ArduPilot + Companion Compute

An open stack is where AI drone software stops being a black box and becomes an engineering medium. With PX4 or ArduPilot on the flight controller and a companion computer on-board, you can shape perception, planning, and policy to your mission instead of reshaping your mission to a vendor’s roadmap. The result is not just lower cost; it’s architectural leverage: you own the loop from sensor to decision to actuation.

PX4 as a publish/subscribe kernel. PX4’s uORB message bus turns the autopilot into a real-time micro-kernel: estimators, controllers, and drivers exchange state as typed topics with deterministic timing. That separation matters when AI drone software needs to steer the vehicle without violating safety. You inject intent—velocity/pose setpoints, region-of-interest, gimbal targets—through Offboard control or mission items, while the inner loops (rate, attitude, position) stay hard-real-time on the flight controller. The bridge to the companion runs via MAVLink and/or microRTPS for ROS 2, so your perception nodes can subscribe to estimator outputs (pose, wind, vibration) and publish guidance without fighting thread priorities.

ArduPilot as a mature “systems kit.” ArduPilot exposes a deep set of flight modes (GUIDED, LOITER, AUTO), rich mission scripting (Lua), and a conservative, field-proven EKF. For many industrial programs, that maturity is the feature: you can start with mission-planner workflows, layer AI drone software for detection/decision on the companion, and only then graduate to direct setpoints when you’re ready. The HAL abstraction across boards gives you freedom to pick a controller that matches your I/O, EMI, and form-factor constraints.

Companion compute is the brain you bring. Whether you choose Jetson Orin/AGX, Qualcomm QRB-class, or another edge SOC, the companion owns three responsibilities: (1) perception (RGB/thermal fusion, detection, tracking, segmentation), (2) decision (target selection, corridor choice, gimbal framing), and (3) integration (UTM/Remote ID, evidence logging, enterprise backends). Good AI drone software on the companion respects the safety boundary: it proposes actions in terms of setpoints and constraints, it never reimplements inner flight loops, and it degrades gracefully when sensors or models get uncertain.

Perception-to-action pattern. In an open stack, the critical pattern is deterministic plumbing from pixels to setpoints. A practical chain looks like this: camera → zero-copy capture → time-sync → inference → tracker → intent → MAVLink/microRTPS. Latency budgets are explicit (e.g., ≤ 80 ms camera-to-setpoint for obstacle slowdowns; ≤ 150 ms for gimbal ROI framing). This is where production AI drone software earns its keep: it pins each stage to measurable timing and provides backpressure and drop policies so a hot GPU doesn’t silently starve the control link.

Time is truth. If clocks drift, your “AI” is guessing. Use hardware timestamps on camera frames, compensate for rolling shutter, and discipline all clocks via PPS/PTP or flight-controller time sync. Then prove it: record synchronized logs (vision, IMU, estimator, setpoints) and replay them headless. Open stacks shine here—PX4/ArduPilot logs and ROS 2 bagfiles let you bisect bugs by line and time instead of by hunch.

Safety by contract, not by hope. A companion process should live behind a narrow contract: allowed message types, rate limits, and guardrails enforced on the autopilot side. If Offboard setpoints stall, the flight controller must revert to a safe mode (hold/RTL) without ambiguity. Codify geofence hierarchies and DAA hooks on the flight controller; let AI drone software supply constraints (keep-out volumes, target corridors) but never own the last word on safety-critical states.

Offboard control that survives the real world. Minimalistic code can be robust if the contracts are explicit. For example, a velocity-setpoint loop that carries intent and safety:

# Pseudocode: offboard velocity with safety heartbeats (MAVSDK-like)

arm(); set_mode("OFFBOARD")

rate = 30 # Hz

while mission_active:

v = planner.velocity() # from perception/route

v = limit(v, vmax_xy, vmax_z) # policy caps

send_setpoint(v) # NED velocity + yaw rate

send_heartbeat() # proves liveness to FCU

if health.link_margin < L || health.ekf_conf < C:

send_setpoint(0); request_hover() # graceful degrade

disarm() if policy.allows

This isn’t the place for Python in production hot loops; in real deployments we write the same logic in C++ with lock-free queues, but the pattern stands: explicit limits, continuous heartbeats, immediate degrade.

Model governance like code governance. Open stacks are where edge models can be upgraded without aircraft requalification—if you treat them like first-class artifacts. A model registry with versioned datasets, validation metrics, and hardware-in-the-loop gates lets AI drone software ship improvements safely. Shadow inference (run a new model beside the current one) during real missions yields truth data: precision/recall under your weather, background, and motion blur, not a lab’s.

Zero-copy video and deterministic pipelines. On Jetson-class hardware, use hardware capture → NVMM buffers → TensorRT/DeepStream to avoid wasting cycles on memory copies. If you must use GStreamer, cap negotiated formats to prevent hidden color-space conversions. Deterministic AI drone software prefers fixed-size ring buffers and bounded queues over “auto” anything—latency spikes are bugs, not accidents.

Simulation that actually predicts reality. SITL/HITL with Gazebo/Ignition is only useful if sensors are credible. Invest in camera plugins that reproduce your optics (FOV, distortion, exposure), rolling shutter, and motion blur. Feed the estimator with IMU noise that matches your board. Then replay field logs into the same pipeline. Open stacks make this cheap: you can run PX4/ArduPilot SITL, your ROS 2 graph, and your planner in CI and fail a build if a regression widens landing variance or raises CPU to a thermal throttle point.

UTM/Remote ID as software plumbing. Don’t bolt UTM on later. In an open stack, the companion advertises Remote ID, requests strategic authorization, and pushes live conformance checks to your ROC. AI drone software should shape flight plans to standards (ASTM/EASA constructs) and stream signed evidence so BVLOS reviews consume facts, not screenshots.

Known traps and how we avoid them.

- EKF resets + Offboard: if the estimator reinitializes, stale setpoints can be dangerous. We gate Offboard commands on estimator confidence and immediately request HOLD on drops.

- Thermal throttling at noon: we treat inference FPS as a resource to be scheduled; when SOC temperature rises, we degrade models (smaller input, INT8 path) before clocks throttle.

- Micro-outages on 5G: command/control and video travel on different QoS; AI drone software preserves setpoint cadence at the expense of bitrate and silently re-keys on brief carrier flips.

- MAVLink floods: rate-limit the companion; prioritize ACTUATION_TARGET/SETPOINT over telemetry chatter; validate every external MAVLink source in the arena.

Why open beats closed for maintainability. Over a five-year program, you will change airframes, payloads, and carriers. An open PX4/ArduPilot + companion pattern quarantines that churn: drivers and estimators live on the FCU; perception lives on the companion; AI drone software glues them with stable interfaces. Replace a camera and you touch a driver and a node, not your safety case. Swap an airframe and you re-tune controllers, not your entire mission logic.

Where A-Bots.com helps without locking you in. Our role is to harden this pattern: ROS 2 graphs that won’t deadlock, Offboard loops that meet timing, model registries tied to mission risk, SITL/HITL benches wired into CI, and UTM/Remote ID plumbing that satisfies auditors. We ship source, docs, and repeatable builds (containerized toolchains, reproducible images) so your team can take ownership. In other words, we productionize open-stack AI drone software to the point where “custom” feels as dependable as off-the-shelf—only it actually fits your mission.

Open stacks don’t just democratize flight control; they discipline the entire autonomy loop. With PX4/ArduPilot guarding the safety envelope and a companion running AI drone software that is observable, governable, and time-honest, your system earns trust sortie by sortie—and scales without swapping foundations every time your mission evolves.

5.Data Processing & AI Analytics

If flight control keeps a drone safe, AI drone software makes its data useful. The difference between a “nice video” and a decision is a disciplined pipeline: deterministic ingest, calibrated transforms, reproducible analytics, and evidence you can defend. Treat the stack like a production data system that just happens to fly.

Deterministic ingest, or it didn’t happen. Every frame, IMU tick, GNSS/RTK fix, and payload sample enters the pipeline with hardware timestamps and a shared clock. AI drone software aligns these streams at millisecond granularity, compensates rolling shutter, and rejects late/out-of-order messages instead of silently reordering them. The result is analysis you can replay: when a crack was segmented, what pose the estimator believed, which gust hit the airframe. Without time discipline, “AI” becomes guesswork.

Calibration before cleverness. Raw pixels lie. Lenses vignette, sensors drift, thermal cameras need non-uniformity correction (NUC), and emissivity assumptions can be wrong. AI drone software applies camera models (intrinsics/extrinsics), radiometric correction for thermal (emissivity, reflected apparent temperature, atmospheric transmittance), and color/response normalization so training and inference see stable inputs. On mixed RGB+LWIR payloads, it builds a fused rectified pair so detections in either spectrum land in the same world coordinates.

Edge vs. cloud is an engineering choice, not a faith. If a patrol needs a two-minute “time-to-first-decision,” the companion computer runs lightweight detectors, trackers, and change-detection at the edge; heavier map products (dense point clouds, meshes, orthos) move to the ROC or cluster after landing. AI drone software budgets latency explicitly: e.g., ≤ 120 ms for on-scene target re-acquire, ≤ 10 s for near-real-time heat-map overlays, hours for full photogrammetry. The pipeline degrades gracefully—smaller input sizes, INT8 paths, sparse sampling—rather than timing out.

From pixels to products: photogrammetry that survives reality. For mapping, the steps are standard but the guardrails aren’t: feature detection/matching, bundle adjustment, dense MVS, meshing, and orthomosaics. AI drone software rejects bad tie points near textureless water, enforces baseline-to-height ratios to keep parallax informative, and monitors reprojection error to fail fast on blurry sorties. RTK/PPK metadata ties everything to ground truth; when RTK drops, the system tags tiles with confidence so downstream apps don’t over-trust pretty pictures.

Thermal isn’t just “hot or not.” Temperature is a model with assumptions. Wind, sun angle, and surface finish change apparent readings. AI drone software captures on-scene references, applies window-by-window corrections, and outputs both radiometric maps and semantic layers (e.g., “insulator likely overheating,” “panel string under-performing”), with per-pixel confidence. For regulated workflows, it logs the correction chain so an investigator can recompute temperatures from raw RJPG/TIFF if challenged.

The analytics core: detectors, segmenters, change-detectors. In inspection and security, three primitives dominate. Detectors localize objects; segmenters isolate defects; change-detectors compare scenes across time. AI drone software runs ensembles tuned to environment: one model for corrosion on steel, another for spalling on concrete, a third for vegetation encroachment near lines. Trackers stitch detections into trajectories. Post-processors raise or lower thresholds based on context (rain on lens? low sun? lens flare?), and outputs are scored against mission-specific cost functions instead of generic mAP.

Uncertainty is a first-class signal. A confident wrong answer is more dangerous than “I don’t know.” AI drone software propagates predictive uncertainty (e.g., Monte Carlo dropout, temperature scaling) and observational uncertainty (blur, glare, occlusion) into downstream rules. A low-confidence crack near a safety threshold schedules a re-flight; a high-confidence person near a fence routes to a human now. This is operational AI, not leaderboard AI.

Events, not files. Files are for archives; operations run on events. Every analytic produces typed events—{what, where, when, how_sure, evidence}—that land on a bus with schemas and retention. AI drone software routes them to live consoles (for action), case systems (for investigation), and data lakes (for training). A single detection becomes a work order only if policy agrees; otherwise it becomes another labeled example to improve the model.

{

"event_type": "defect.segmented",

"asset_id": "pole_17N-482",

"position_wgs84": [35.0849, -106.6504, 1720.3],

"footprint": "polygon-geojson",

"confidence": 0.87,

"model": { "name": "seg_crack_v23", "sha256": "…" },

"evidence": { "video": "s3://…/clip.mp4#t=101.2-104.5", "frame_ts": "2025-08-12T12:31:07.412Z" },

"conformance": { "rid": "ok", "utm_plan": "ok" }

}

A schema like this lets AI drone software power dashboards, trigger notifications, and—critically—rebuild the same decision later for audit.

Active learning closes the loop. The hard part isn’t training a model once; it’s improving it without wrecking precision. AI drone software prioritizes samples for review where disagreement is highest: between model vs. model, model vs. heuristic, or model vs. human. Reviewers get tight tools: draw once, auto-propagate masks via optical flow, and accept/reject with keyboard-only shortcuts. New labels flow through a registry with dataset versioning, lineage, and bias checks before a retrain job runs.

Change-detection that respects geometry. Comparing today’s mosaic to last quarter’s only works if the pipeline compensates for viewpoint, lens, and terrain. AI drone software registers tiles to a stable base map, masks shadows via sun position, and compares residuals after photometric normalization. For long linear assets, it tracks kilometer posts and creates “mile-by-mile” health indices that feed asset plans directly instead of living in a slideshow.

Compression with intent. Bandwidth and storage are budgets. AI drone software keeps two tiers: near-real-time clips for operators (GOPs tuned for low-latency seeking) and evidence-grade originals with checksums and timecode. For slow links, the edge sends thumbnails and sparse metadata now, bulk frames later. Downstream systems always know what’s “final” versus “preview,” which prevents accidental decisions on lossy proxies.

Joining vision with non-vision is where value pops. Thermal anomalies mean more when joined with SCADA tags; trespass patterns change when a shift ends; corrosion patterns correlate with wind-borne contaminants. AI drone software time-aligns these heterogeneous feeds and exposes simple queries: “Show me panels with >7% under-performance after 14:00 on days with gusts >20 kt,” or “Rank poles by combined vegetation risk and past outage proximity.”

Quality gates, not vibes. Before analytics are trusted, products pass gates: coverage completeness (no gap wider than N meters), GSD within spec, reprojection RMSE below threshold, blur index under limit, and temperature confidence above minimum for thermal tasks. AI drone software refuses to publish maps or alerts that fail gates; it schedules re-flights or downgrades confidence rather than dumping questionable layers into production.

Privacy and policy in the data plane. Faces and license plates may need redaction; critical infrastructure may require on-prem processing. AI drone software runs redaction at the edge when policy demands, watermarks operator views vs. evidence copies, and uses customer-managed keys. Access is least-privilege: analysts can see masks and metadata; investigators can unlock originals with auditable approval.

From analytics to action, automatically. An alert that doesn’t change the world is noise. AI drone software binds events to actions: create a work order with location and evidence, update a digital twin, notify a patrol, or open an emergency channel. Each action confirms back—was a ticket closed, was a patrol dispatched—so future models learn what mattered.

Observability for models, not just machines. Pipelines export operational metrics (latency, throughput, backpressure) and analytic health (precision/recall by class, drift scores, false-positive cost). AI drone software compares live confusion matrices against baselines and automatically rolls back a model when failure modes spike—no midnight heroics required.

Reproducibility in one command. Any map, clip, or alert should be rebuildable from raw inputs, exact code, and model signatures. AI drone software stamps artifacts with digests (code, config, weights) and stores replay recipes. When a regulator or customer asks “how did you know?”, you hit “replay,” not “we think.”

A word on formats and twins. Orthos and meshes are not endpoints; they are layers in systems that own work. AI drone software packages outputs for GIS and digital twins—COG/Cloud-Optimized GeoTIFFs, 3D Tiles, vector layers—and aligns IDs with enterprise asset registries so analytics land on the right pole, string, valve, or perimeter segment.

Where A-Bots.com moves the needle. We don’t sell “magic AI.” We productionize it: ingestion that never drops time, calibration that stands up in court, analytic ensembles that reflect your assets, and event plumbing that closes tickets. When compliance or sovereignty is non-negotiable, we deploy AI drone software on-prem with the same observability and model governance you’d expect from the cloud—so your security team sleeps and your operations actually improve.

In short, good flying is table stakes. The advantage shows up when AI drone software turns telemetry into trusted products, products into ranked events, and events into actions that change outcomes—with clocks aligned, uncertainties tracked, and every step ready to be replayed tomorrow.

6.AI-Ready Payloads (Thermal / Dual-Spectrum)

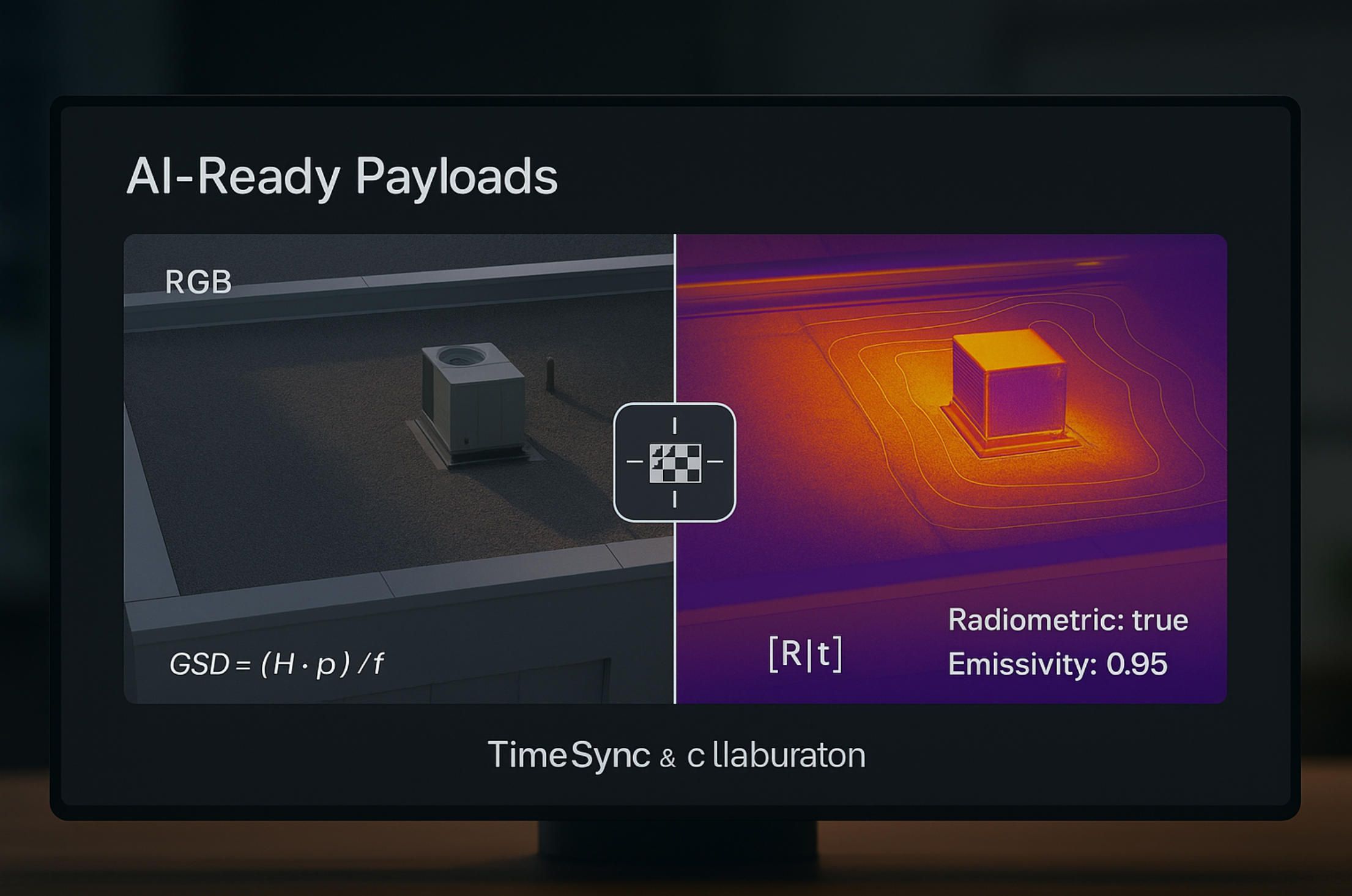

Sensors decide which problems you can solve; calibrations decide whether you solved them; and synchronization decides whether anyone believes you. “AI-ready” means the payload and the AI drone software behave like one instrument: optics, detectors, timing, and metadata are disciplined enough that models can turn photons into decisions without hand-waving.

Thermal is measurement, not a mood. LWIR cameras don’t see “heat,” they measure radiance through optics that add their own emission and attenuation. “Radiometric” payloads expose temperature per pixel with embedded calibration terms; non-radiometric streams do not. Before clever models, AI drone software applies the calibration chain—NUC (non-uniformity correction), emissivity, reflected apparent temperature, atmospheric transmittance—so a 4 °C delta is real, not a sun-glint illusion.

Dual-spectrum is a geometry problem. RGB and LWIR live in different optical paths with different distortion. Boresight matters: extrinsics [R∣t] between the RGB and thermal axes must be solved and kept stable. At long distance a homography can align planes; at inspection range you need depth to resolve parallax. Practical recipe: factory boresight with a heated checker, field verification on a high-contrast target, and continuous drift monitoring via feature reprojection error. The AI drone software then fuses streams early (channel-stacked tensors), mid-level (feature fusion), or late (decision-level), choosing the path that fits your compute and latency budget.

Know your IFOV before you promise detection. Ground sampling distance for a pinhole model is roughly

GSD≈(H⋅p)/f

where H is slant range, p is pixel pitch, f is focal length (consistent units). If your thermal core has p=12 μm and a f=19 mm lens at H=50 m, GSD ≈ 31.6 mm/px. A 5 mm crack will not be “detected” by magic; you need either closer range, longer focal length, or a model that flags indirect symptoms. AI drone software enforces these physics as policy: it blocks analytics when GSD is out-of-spec instead of generating confident nonsense.

Timing is truth. Payload clocks drift and rolling shutters smear. “AI-ready” means hardware time-stamps on frames, PPS/PTP discipline across flight controller and companion, and deterministic capture paths (zero-copy, fixed buffer depths). The AI drone software aligns RGB, thermal, IMU and gimbal pose at millisecond granularity so a segmentation mask corresponds to the pose the estimator actually believed, not a nearby one.

Optics matter more than slogans. Thermal lenses have f-numbers, MTF, and vignetting too. A “wider” lens may kill contrast at the spatial frequencies your task needs. Choose glass for the signal, not the spec sheet: e.g., sub-50 mK NETD helps only if your optics preserve it at the edge of the field, and only if the AI drone software compensates for vignetting before training and inference.

Radiometric video vs. radiometric stills. Many cores deliver radiometric data only for stills; video often arrives as 8-bit “appearance.” If your workflow requires time-series temperature (leak rate, cooling curve), confirm radiometric video or schedule high-rate still bursts and reconstruct a stream. The AI drone software must mark which frames are temperature-grounded and which are appearance-only, so downstream rules don’t compare apples and oranges.

NUC is a behavior, not a button. Shutter-based NUC steals frames; shutter-less NUC drifts more. Programmatic NUC tied to mission state prevents bad choices: run NUC on climb-out, mask it during critical tracking, and force it before a radiometric capture set. The AI drone software tracks drift (fixed-pattern residuals over flats) and requests NUC when thresholds trip, not when an operator remembers.

Fusion that respects failure modes. RGB guides edges and shape; thermal carries emissivity-dependent signal that ignores texture. When smoke, fog, or glare win, gate the fusion: down-weight RGB features, or switch to thermal-dominant models. When the glass sees reflections (common in LWIR near shiny surfaces), gate thermal and trust RGB contours. This is where AI drone software earns its keep: context-aware fusion policies keep false positives from exploding in marginal conditions.

Edge compute is not a blank check. Thermal arrays are smaller; super-resolution and cross-modal enhancement are tempting—and expensive. Budget them. A practical path is tiled inference with fixed latency: run RGB detector at full rate, run thermal-guided refinement at half-rate in regions of interest, and back off to INT8 when SoC temperature climbs. The AI drone software treats FPS as a resource to schedule, not a wish.

Gimbal handshake: mechanics meet math. Sub-pixel jitter will trash change-detection and radiometric statistics. Use a payload and gimbal combo that publishes stabilized attitude at camera-exposure time, not controller loop time. The AI drone software binds frame time, gimbal state, and vehicle pose into a single record so mosaics, isotherms, and event footprints land in the right place the first time.

Thermal pitfalls you must code around. Glass is a mirror in LWIR. Polished metals reflect the sky. Black paint isn’t “black”; emissivity varies with wavelength and angle. Wind and sun angle shift apparent temperature minutes apart. “AI-ready” means your AI drone software captures scene context (wind, insolation, angle of incidence), applies emissivity tables per material when known, and tags outputs with confidence that downstream systems respect.

Verification like you mean it. A payload that never sees a blackbody is a payload you’re guessing with. Field kits with two reference plates (hot/cold) let crews prove radiometric sanity daily. The AI drone software logs deltas against traceable references, writes them into artifact metadata, and refuses “evidence-grade” labels when drift exceeds policy.

Products, not pretty pictures. Operations don’t want frames; they want structured findings. An “AI-ready” payload exposes a manifest and a consistent event schema so analytics flow into action:

{

"payload_manifest": {

"rgb": { "focal_mm": 24.0, "distortion": "brown5", "timestamp_source": "hardware" },

"thermal": { "focal_mm": 19.0, "radiometric": true, "netd_mk": 45, "emissivity": 0.95 }

},

"finding": {

"type": "overheat.anomaly",

"position_wgs84": [41.8803, -87.6298, 186.2],

"roi_polygon": "geojson",

"temp_c": 78.4,

"delta_c": 12.7,

"confidence": 0.91,

"model": { "name": "dual_fusion_v12", "sha256": "…" }

}

}

Downstream, that schema lets the ROC open a ticket with thresholds grounded in physics, not vibes.

When dual-spectrum isn’t enough. Some environments call for SWIR (through smoke/fog), NIR or true multispectral for vegetation/soiling, or even polarization for stress fields on glass. The pattern stays: characterize optics, solve geometry, synchronize clocks, and make the AI drone software carry calibration and confidence forward so decisions age well.

Thermal hygiene during flight. Dew, lens cap heat soak, and rapid cabin-to-ambient shifts ruin early frames. Build pre-launch warm-up and purge cycles into missions; detect fogging with contrast statistics; and block analytics until hygiene recovers. The AI drone software treats these as flight-phase policies, not operator folklore.

Edge-to-enterprise without loss of meaning. Radiometric TIFFs, COGs, and 3D tiles should carry per-tile confidence, GSD, and calibration hashes. When an analyst draws a box on a hot string, the backend can recompute temperatures from raw with the exact coefficients and the exact code that flew. That reproducibility is a property of your AI drone software, not a hope pinned on a vendor viewer.

In short, “AI-ready” is a contract between physics and computation. The payload delivers stable photons and honest metadata; the AI drone software delivers timing, calibration, fusion, and policy that respect reality. Get that contract right, and dual-spectrum becomes more than marketing—it becomes a measuring instrument that answers operational questions on the first sortie, and answers them the same way tomorrow.

7.C-UAS (AI Counter-Drone)

Counter-UAS is not one sensor or one box; it’s a disciplined pipeline that turns weak, noisy cues into defensible actions without creating new risks. Done right, AI drone software fuses RF, radar, EO/IR, acoustic, and network telemetry into a single, explainable track, then applies policy that is legal, proportional, and safe for people on the ground. The job is to detect early, classify correctly, track continuously, infer intent, and only then decide what to do—with every step time-stamped, reproducible, and auditable.

Sensing is plural by design.

RF receivers hear command links, telemetry bursts, Remote ID beacons, and protocol handshakes; compact radars provide range-Doppler for small cross-sections; EO/IR gimbals confirm visual class and payload hints; acoustic arrays localize with phase/time differences in close-in perimeters. “AI-ready” means each sensor is calibrated (frequency/phase, range gates, camera intrinsics/extrinsics), time-disciplined (PPS/PTP), and described in metadata the same way every time. AI drone software subscribes to all of them, aligns streams at millisecond granularity, and refuses to “auto-heal” gaps by guessing.

From blips to tracks.

Detectors fire; trackers make sense. Practical systems run multi-hypothesis or JPDA-style trackers over radar plots while visual trackers (KLT, correlation, or deep features) maintain lock across EO/IR frames. RF direction finding and TDOA contribute bearing/ellipse constraints. AI drone software carries per-track covariance and provenance (which sensor supported what), so an operator can see why the system believes what it believes—and a prosecutor can replay it later.

Classification that respects context.

A quadcopter is not a bird because its micro-Doppler is different; a plane is not a drone because its trajectory budget, RCS, and kinematics are different. Visual classifiers help, but the robust wins come from feature stacks: trajectory smoothness and loiter patterns, climb rates, acceleration spectra, RCS vs. aspect, RF fingerprinting (spectral shape, hopping behavior). AI drone software fuses these features and expresses results with uncertainty, not bravado, so downstream policy can scale response rather than flip a single “drone/not” bit.

Intent is a behavior, not a label.

Where is it headed? How quickly? How does it react to geofence boundaries or illumination? Does it loiter over a substation gate or approach a VIP ingress vector? AI drone software evaluates hypotheses (benign transit, curiosity, reconnaissance, hostile) against map semantics—keep-out volumes, runway finals, fuel yards—and promotes risk as evidence accumulates. False positives fall when you reason about behavior and place, not pixels alone.

Remote ID and “friendlies.”

Not every UAV near your fence is a threat. Whitelists (house drones, training flights, partner operations) and Remote ID/UTM feeds prevent friendly fratricide. AI drone software correlates tracks with declarative plans and beacons, flags conformance, and demands extra evidence before escalating on an identified, conforming aircraft. When declarations and observations diverge, the system raises a “conformance break,” not a panic.

Edge vs. network budgets.

Close-in breaches unfold in seconds; regional airspace surveillance can take minutes. AI drone software runs fast detectors and trackers at the edge (perimeter mast or mobile unit) and pushes heavier cross-site fusion and analytics to the ROC. Latency budgets are explicit: ≤ 200 ms for close-in track updates, ≤ 1 s for cross-sensor association, seconds for visual confirmation at long range. If links wobble, the edge keeps tracking and stores evidence; when bandwidth returns, the ROC receives the full trace with original timestamps.

Adversarial reality: spoof, jam, decoy.

Attackers probe. They spoof beacons, vary airframes, fly low-glare twilight, and hug clutter. AI drone software designs for that: sensor dis-agreements raise suspicion (e.g., RF says “drone” with no kinematic support), classifier abstention is allowed, and the system prefers “I need more evidence” over confident mistakes. Model governance includes red-team datasets (twilight birds, waving flags, rooftop HVAC), adversarial augmentations, and drift monitors that rollback models when the false-alarm cost spikes.

Mitigation is policy, not impulse.

Only authorized entities can apply active countermeasures—and always within law and safety doctrine. Escalation ladders usually start with observe → illuminate/announce → coordinate with airspace services → physical barrier management. Where lawful, electronic or protocol-level effects are considered last, with pre-defined safety envelopes and abort rules. AI drone software encodes these ladders as machine-readable policy and proves that each step was justified by evidence at the time, not hindsight.

{

"c_uas_event": "escalation.proposed",

"track_id": "trk-5A7C",

"risk_score": 0.82,

"evidence": {

"radar_pd": 0.97, "rf_fingerprint": "match:classC",

"eo_class": "quadcopter", "remote_id": "none",

"behaviors": ["loiter", "vector_to_asset:substation_gate"]

},

"policy_path": ["observe","illuminate","notify_authority","hold"],

"safety": { "no_overcrowd": true, "weather_ok": true },

"audit": { "ts": "2025-08-14T10:21:33Z", "models": ["trk_v15","cls_mix_v7"] }

}

A schema like this lets supervisors see the “why,” not just the “what,” and lets auditors replay exactly which AI drone software versions made the call.

Deconfliction and fail-safe.

Mitigation can’t endanger bystanders or manned traffic. Geofence hierarchies (hard, soft, advisory), minimum-risk maneuvers, and “abort on uncertainty” rules must be encoded—not implied. AI drone software checks NOTAMs, ADS-B, and local flight ops before approving any action and continuously reevaluates deconfliction; if uncertainty rises, it steps down the ladder rather than pressing on.

Evidence that ages well.

If it’s not reproducible, it’s not evidence. Every decision artifact—sensor snippets, tracks, classifier outputs, policy steps—carries hashes, timebases, and model signatures. AI drone software can rebuild the same track from raw I/Q data, radar plots, and frames with the exact weights and thresholds that ran that day. That turns “we think” into “we can show.”

Metrics that matter.

Probability of detection at range, false-alarm rates per hour by environment class, track continuity (ID switches per minute), time-to-classification at given ranges, and mean time-to-escalation under policy are the heartbeat of a C-UAS program. AI drone software reports them by model version, sensor config, and weather, so engineering and operations can tune without guesswork.

Test like you’ll fight.

SITL/HITL for trackers and classifiers; RF playback of captured emissions; radar target simulators; photoreal EO/IR with controlled motion and glare; and full “blue vs. red” exercises that measure system honesty under stress. AI drone software treats these benches as CI: if a change widens false positives in sunset conditions or reduces track continuity in clutter, the build doesn’t go to the perimeter.

Privacy and proportionality.

Perimeter cameras see neighborhoods; mics hear more than rotors. AI drone software enforces least-privilege access, on-device redaction for non-targets, and retention rules fit to jurisdiction. It also separates operator situational awareness from evidence copies—watermarked previews for the former, signed originals for the latter—so oversight can trust both.

Interoperability avoids dead ends.

C-UAS rarely lives alone. Integration with C2/TAK, PSAP/CAD, and site security systems turns detections into coordinated response. AI drone software publishes typed events to message buses, supports standard maps/overlays, and consumes UTM/Remote ID so agencies don’t have to swivel-chair between screens.

A word on “non-destructive first.”

The safest countermeasure is often to not create a new hazard. Lights, voice, patrol dispatch, and coordination with airspace services resolve many events. Where active interdiction is lawful and absolutely necessary, it is engineered with minimum-risk rules and real-time abort checks. AI drone software is the arbiter that refuses to escalate when evidence is thin or neighbors are too close.

# Pseudocode: mitigation arbiter (policy-first, safety-first)

if risk > THRESH and conformance_break and no_friendly_match:

propose = ["observe","illuminate"]

if airspace_clear and crowd_low and authority_granted:

propose += ["notify_authority"]

else:

propose += ["hold"]

else:

propose = ["observe"]

# Operator sees rationale + evidence; every acceptance is logged.

Where A-Bots.com helps without adding lock-in.

We don’t sell “magic boxes.” We productionize the layer that makes disparate sensors, models, and policies act like one system: time-honest fusion, behavior-level classification, policy codified as code, and replayable evidence. When mandates require on-prem, we deploy AI drone software behind your perimeter with the same observability and governance we’d expect in the cloud, and we integrate with your C2 and security tooling so detections become coordinated, lawful action.

Counter-UAS is a systems discipline. Sensors give you fragments; AI drone software turns them into tracks, tracks into intent, and intent into proportionate, legal response—leaving a defensible trail the whole way.

8.Industries Where AI Drone Software Is Used

Across industries, the pattern repeats: sensors turn the world into signals; AI drone software turns signals into events; operations turn events into work that changes outcomes. The details differ—compliance regimes, latency budgets, safety envelopes—but the engineering contract stays the same: time-honest data, calibrated analytics, policy-bound actions, and evidence that can be replayed tomorrow.

Energy & Utilities (T&D, Substations).

Grid owners need fewer truck rolls and fewer surprises. Routine patrols become “evidence runs” when AI drone software enforces GSD limits, fuses RGB+thermal for hotspot isolation, and scores vegetation encroachment against conductor sag and wind. Substation workflows depend on radiometric sanity (emissivity, NUC schedules) and asset semantics (breaker IDs, bay topology). Events are typed, not anecdotal—overheat.anomaly, hardware.missing, veg.encroach.risk—and flow straight into ESRI/SAP with location, confidence, and clips. The platform proves improvement with service metrics: fewer unplanned outages, shorter MTTR, and a rising ratio of planned to emergency work.

Oil & Gas (Pipelines, Refineries, Upstream Pads).

Methane and VOCs are compliance and reputation risks. Payloads vary—OGI, TDLAS, RGB/thermal—but the winning loop is the same: AI drone software binds concentration estimates to wind vectors and flight paths, quantifies leaks with uncertainty, and stamps each claim with the model and calibration chain. For pipelines, change-detection along ROWs highlights new dig marks and encroachments; for flare stacks, thermal signatures reveal combustion inefficiencies. Latency budgets split: near-real-time alerts to shift supervisors; full-physics quantification for monthly reports. The software never pretends a JPEG is a measurement; it marks what is radiometric and what is “appearance only.”

Solar & Wind (Utility-Scale Renewables).

PV strings underperform for boring reasons—soiling, cracked cells, wiring faults—and those reasons show up in heat signatures and power curves. AI drone software merges thermal tiles with SCADA, scores strings against ambient/wind, and produces ticketable defects with confidence deltas (delta_c, prob_fault). In wind, blade inspections generate meshes and semantic masks for leading-edge erosion, lightning strikes, and trailing-edge separation. Planned downtime is scarce; the platform schedules sorties against forecasted lulls, validates coverage completeness, and blocks publication if blur or reprojection error exceed gates. Optimization is measurable: fewer truck climbs per MWh, better capacity factors, safer maintenance windows.

Construction & AEC (Vertical & Heavy Civil).

Progress isn’t a slideshow; it’s a contract. AI drone software aligns orthos and 3D tiles to BIM, detects percent-complete on façades and roof packages, and computes stockpile volumes with confidence bounds. “Safety AI” flags edge exposure, missing guardrails, and housekeeping issues with enough context that a superintendent can act. The data plane speaks in RFIs and pay-apps: events map to line items, not folders, and each claim is traceable to georegistered evidence. Latency matters: live overlays for daily standups, curated packages for weekly owner meetings, and archival-grade deliverables once a milestone closes.

Public Safety & Municipal DFR.

Response time, not footage, saves minutes. City programs lean on AI drone software that compresses dispatch-to-launch, maintains conformance with airspace plans, and streams stabilized, policy-compliant views to command. Privacy is mandatory: on-prem runtimes, watermarked previews for operators, and access controls that separate situational awareness from evidence copies. The platform shifts from navigation to comprehension on-scene—target re-ID, thermal/RGB fusion, trajectory prediction—and logs every choice with timebases and model signatures so oversight sees fact, not folklore.

Transportation: Rail, Roads, Ports.

On rail corridors, ballast washouts, encroachments, and clearance violations must surface before a dispatcher hears about them. AI drone software fuses track geometry with detections, produces “slow order” proposals when risk crosses thresholds, and proves that a false alarm would have been cheaper than a miss. Bridge and culvert inspections become consistent because coverage, GSD, and lighting are policy, not preference. At ports, the same stack counts container stacks, checks restricted zones, and joins detections with access-control and berth schedules; outcomes are fewer bottlenecks and defensible security logs.

Telecom (Macro, Rooftop, Small Cell).

Shot lists used to be clipboards. Now AI drone software turns “take pictures” into intent: azimuth/tilt/roll capture plans, bracketed exposures, and mast segments sequenced to avoid glare and motion blur. The analytics layer reads labels, serials, and corrosion masks; integrates PIM/VSWR results from the NOC; and opens work orders when a bracket is misaligned or a mount point shows fatigue. A boring but crucial feature: the platform refuses a deliverable if the angle-of-incidence or GSD misses spec, sparing everyone a second visit.

Mining & Quarries.

Volumetry, haul road compliance, and highwall risk live or die by geometry. AI drone software enforces flight baselines and overlaps, computes volumes with uncertainty envelopes, and flags berms below policy height before a safety audit does. For blast planning, the system aligns historical benches, tracks creep, and ensures decisions reference meshes with known reprojection error. Operators get timely numbers; auditors get reproducibility without PDFs of screenshots.

Agriculture & Forestry.

Crop vigor is only one layer. AI drone software stitches multispectral orthos with sun-angle and wind metadata, deglints water, and produces indices that agronomists can trust. Counting plants, diagnosing nutrient stress, or spotting irrigation leaks turns into work orders in farm-management systems. Forestry uses the same stack differently: post-storm damage mapping, canopy gaps, pest signatures, and fuel-load metrics for prescribed burns. The clock runs slower than DFR, but the need for calibration and time-series discipline is higher.

Insurance & Property Claims.

After hail or wind, speed meets evidence. AI drone software plans roof runs keyed to slope, pitch, and material; classifies damage types with per-mask confidence; and emits a claim package that a human can rubber-stamp or reject with comments. Fairness matters: the system keeps abstention when glare, wetness, or obstructions kill certainty, and schedules re-flights instead of inflating confidence by wish. Carriers see fewer disputes because every pixel ties to a time-stamp, pose, and model signature.

Environmental Monitoring & Critical Infrastructure.

Wetlands, shorelines, levees, and dams demand time-consistent products, not one-off maps. AI drone software registers each new mosaic to a stable base, normalizes photometry, and computes change layers that regulators can accept. For pipelines over fragile terrain, the same engine scores rutting and erosion with geometry-aware thresholds. The policy layer assigns retention by regime, stores raw and cooked products with hashes, and answers “how did you know?” with a literal replay, not a memo.

Airports & Runways.

FOD, rubber build-up, light alignment, and wildlife pressure define runway health. AI drone software flies windows between movements, pushes FOD events with bounding boxes and size estimates, and integrates with NOTAM workflows so ops can close and reopen segments with auditable confidence. Latency budgets are harsh; the edge runs the first pass, the ROC refines after the strip is safe again.

Perimeter Security & Industrial Sites.

Factories, refineries, data centers, and warehouses depend on arrivals and exceptions. AI drone software supplies patrols as code—repeatable routes, lighting-aware gimbal cues, and redaction on-device when policy demands—and reduces operator load by promoting only behavior-level anomalies. The result is fewer “motion near fence” false positives and more “person loitering near gate with re-appearance after patrol pass” events that map directly to a response ladder.

Across all of these, the contracts are consistent. AI drone software enforces measurement before inference, uncertainty before bravado, and policy before action. It speaks the language of the business system it serves—work orders, slow orders, pay apps, claims, SCADA tags—so detections do not die in dashboards. It also leaves a defensible trail: synchronized logs, model versions, calibration hashes, and schema-stamped events that an auditor can replay without calling your best engineer at midnight.

Where a partner helps is not in “magic AI,” but in the glue that makes the pieces act like one system. A-Bots.com productionizes that glue per industry—connectors to your GIS/CMMS/SCADA/BIM, edge models tuned to your assets, and on-prem deployments when sovereignty or privacy are non-negotiable—so your AI drone software stops being a demo and starts paying off in the metrics that matter to you. Visit IoT Application Development Services site for order.

✅ Hashtags

#AIDroneSoftware

#EdgeAI

#RemoteOps

#BVLOS

#UTM

#RemoteID

#DroneInABox

#DFR

#PX4

#ArduPilot

#Auterion

#ThermalImaging

#DualSpectrum

#CUAS

#DigitalTwin

#MLOps

#DroneAnalytics

#PublicSafety

#Utilities

#ConstructionTech

#Renewables

Other articles

Custom Drone Software Mastery - ArduPilot and MissionPlanner This long-read unpacks the commercial drone boom, then dives into the technical backbone of ArduPilot and Mission Planner—one open, multi-domain codebase and a ground station that doubles as a full-stack lab. From rapid-prototype firmware to data-driven optimisation retainers, A-Bots.com shows how disciplined codecraft delivers measurable wins: 40 % fewer mission aborts, 70% faster surveys, and faster BVLOS approvals. Finally, the article looks ahead to AI-augmented navigation, Kubernetes-coordinated swarms and satellite-linked control channels, detailing the partnerships and R&D milestones that will shape autonomous, multi-domain operations through 2034. Read on to see why enterprises choose A-Bots.com to turn ambitious flight plans into certified, revenue-earning reality.

Forestry Drones: Myco-Seeding, Flying Edge and Early-Warning Sensors Forestry is finally getting a flying edge. We map micro-sites with UAV LiDAR, deliver mycorrhiza-boosted seedpods where they can actually survive, and keep remote sensor grids alive with UAV data-mule LoRaWAN and pop-up emergency mesh during fire incidents. Add bioacoustic listening and hyperspectral imaging, and you catch chainsaws, gunshots, pests, and water stress before canopies brown. The article walks through algorithms, capsule design, comms topologies, and field-hard monitoring—then shows how A-Bots.com turns it into an offline-first, audit-ready workflow for rangers and ecologists. To build your stack end-to-end, link the phrase IoT app development to the A-Bots.com services page and start a scoped discovery.

Drone Survey Software: Pix4D vs DroneDeploy The battle for survey-grade skies is heating up. In 2025, Pix4D refines its lab-level photogrammetry while DroneDeploy streamlines capture-to-dashboard workflows, yet neither fully covers every edge case. Our in-depth article dissects their engines, accuracy pipelines, mission-planning UX, analytics and licensing models—then reveals the “SurveyOps DNA” stack from A-Bots.com. Imagine a modular toolkit that unites terrain-aware flight plans, on-device photogrammetry, AI-driven volume metrics and airtight ISO-27001 governance, all deployable on Jetson or Apple silicon. Add our “60-Minute Field-to-Finish” Challenge and white-label SLAs, and you have a path to survey deliverables that are faster, more secure and more precise than any off-the-shelf combo. Whether you fly RTK-equipped multirotors on construction sites or BVLOS corridors in remote mining, this guide shows why custom software is now the decisive competitive edge.

Drone Detection Apps 2025 Rogue drones no longer just buzz stadiums—they disrupt airports, power grids and corporate campuses worldwide. Our in-depth article unpacks the 2025 threat landscape and shows why multi-sensor fusion is the only reliable defence. You’ll discover the full data pipeline—from SDRs and acoustic arrays to cloud-scale AI—and see how a mobile-first UX slashes response times for on-site teams. Finally, we outline a 90-day implementation roadmap that bakes compliance, DevSecOps and cost control into every sprint. Whether you manage critical infrastructure or large-scale events, A-Bots.com delivers the expertise to transform raw drone alerts into actionable, courtroom-ready intelligence.

Drone Mapping Software (UAV Mapping Software): 2025 Guide This in-depth article walks through the full enterprise deployment playbook for drone mapping software or UAV mapping software in 2025. Learn how to leverage cloud-native mission-planning tools, RTK/PPK data capture, AI-driven QA modules and robust compliance reporting to deliver survey-grade orthomosaics, 3D models and LiDAR-fusion outputs. Perfect for operations managers, survey professionals and GIS teams aiming to standardize workflows, minimize field time and meet regulatory requirements.

ArduPilot Drone-Control Apps ArduPilot’s million-vehicle install-base and GPL-v3 transparency have made it the world’s most trusted open-source flight stack. Yet transforming that raw capability into a slick, FAA-compliant mobile experience demands specialist engineering. In this long read, A-Bots.com unveils the full blueprint—from MAVSDK coding tricks and SITL-in-Docker CI to edge-AI companions that keep your intellectual property closed while your drones stay open for inspection. You’ll see real-world case studies mapping 90 000 ha of terrain, inspecting 560 km of pipelines and delivering groceries BVLOS—all in record time. A finishing 37-question Q&A arms your team with proven shortcuts. Read on to learn how choosing ArduPilot and partnering with A-Bots.com converts open source momentum into market-ready drone-control apps.

Top stories

Copyright © Alpha Systems LTD All rights reserved.

Made with ❤️ by A-BOTS