Home

Services

About us

Blog

Contacts

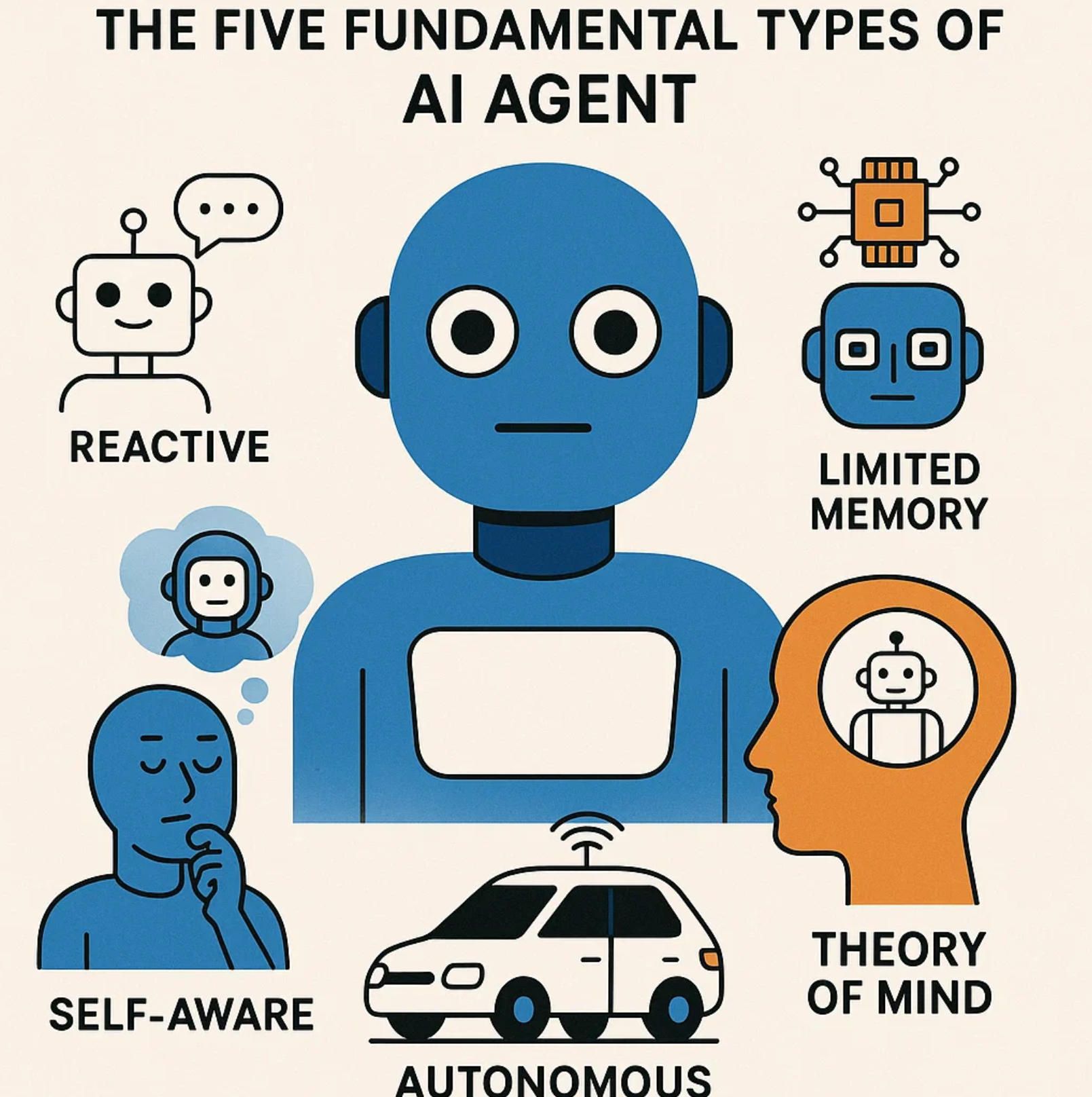

Beyond Chatbots: The Five Fundamental Types of AI Agent

Why “Types of AI Agent” Still Matters in 2025

Reactive Agents — Fast Reflexes, Zero Memory

Deliberative Agents — Model-Based Reasoning & Planning

Goal- & Utility-Based Agents — Rational Decision Makers

Learning Agents — Policy, Value & World-Model Adaptation

Multi-Agent & Hybrid Ecosystems — From Swarms to Hierarchies

Synthesis & Outlook: Blurring Lines Between Types

Why “Types of AI Agent” Still Matters in 2025

In the mid-2020s the AI conversation is dominated by spectacular demos: code-writing copilots, photoreal diffusion models, and large language models that can pass the bar exam. It is tempting to believe the old taxonomies—“reactive,” “deliberative,” “learning,” “multi-agent”—were useful only for the early decades of research when memory was measured in kilobytes and “intelligence” meant a chess program running on a workstation. Yet every engineering team that has tried to ship a production-grade autonomous system in the past two years quickly discovers that understanding the types of AI agent is more than intellectual archaeology; it is a survival skill.

Evolution Without Erasure

The lineage from symbolic expert systems to transformer-based agents is not a clean replacement but an accretive layering. Reactive architectures, born in subsumption robotics, survive in motor-control loops of surgical robots that need millisecond reflexes. Deliberative planners, once the realm of academic path-finding, orchestrate fleets of last-mile delivery bots whose routes reshape every few seconds under traffic constraints. Goal-based agents inject utility calculus into energy-grid dispatch, balancing carbon footprint against peak-load penalties. Learning agents, super-charged by self-supervised world models and reinforcement learning, now update policies in the field—sometimes at dangerous scale, as Boeing and Tesla incident reports remind us. Finally, multi-agent swarms coordinate drone shows and warehouse pick-and-pack operations where no single brain can own global state.

Strip away the buzzwords and the same questions resurface:

- What does the agent know about its environment?

- How does it decide what to do next?

- Can it adapt without compromising safety or compliance?

- How does it coordinate with its peers—or choose not to?

The classical typology slices the problem space along exactly these axes. In 2025 the slicing is still valid; we have merely expanded the set of implementation tricks.

Complexity That Can’t Be Outsourced

Modern AI teams resemble micro-utilities: they ingest real-time data, convert it into intermediate representations, and emit actions that alter the world. When those actions are reversible—re-ranking a product page or tweaking an ad bid—classification errors are absorbed as cost. When the actions are physical—accelerating a forklift, adjusting insulin dosage, steering a drone—the cost is measured in lawsuits, carbon, even human life.

Product managers therefore demand explainable design intents long before the first line of code is written. A sentence like “We need a learning agent” implicitly commits the organisation to a continuous-data pipeline, an offline-to-online evaluation harness, rollback strategies for catastrophic forgetting, and a governance stack that can satisfy ISO 42001 auditors. A statement such as “We could get by with a reactive agent” signals a very different set of constraints: deterministic timing analysis, formally verified decision tables, explicit hardware watchdogs. The taxonomy acts as shorthand for an entire risk-reward envelope, enabling cross-functional stakeholders to converge on architectural scope without drowning in mathematical detail.

Invisible Interfaces, Visible Consequences

2024 was the year consumer LLM wrappers began calling themselves “agents.” They chain prompts, read emails, and draft conference slides. Yet if the wrapper lacks persistent state or the ability to learn from interaction, it is—strictly speaking—an unusually verbose reactive agent. The mislabel might be harmless in productivity tools, but in regulated domains confusion propagates downstream: procurement writes the wrong compliance clauses; safety engineers design tests that measure the wrong invariants. The result is delayed certification or, worse, approval for the wrong class of system.

Conversely, teams that wield the typology with precision create leverage. At a European freight-rail operator, a hybrid deliberative-learning dispatcher cut locomotive idle time by 19 % precisely because the designers isolated a symbolic planner from a policy-gradient optimiser. The planner guaranteed timetable feasibility; the optimiser harvested incremental efficiency inside that safe corridor. Without the clarity of “Which agent is which?” the project would have drowned in a monolithic neural net impossible to validate against railway regulations drafted in the 1970s.

The Horizon Is Hybrid

No production system of any scale deploys a single type of agent in isolation. An autonomous combine harvester harvests reactive micro-behaviours for obstacle avoidance, deliberative path replanning when moisture sensors report unexpected soil compaction, and a learning layer that adjusts throttle to minimise diesel burn under shifting torque loads. The orchestration fabric gluing these layers together is itself a meta-agent, brokering state hand-offs and policing priority inversions.

Industry analysts may argue over whether the future belongs to end-to-end learning or symbolic reasoning 2.0; practitioners already know the answer is both, and often three or four types at once. What matters is the vocabulary to reason about trade-offs at design time, long before a Git commit turns an architecture diagram into technical debt.

A Lens for the Next Decade

The hardware roadmap—RISC-V edge accelerators, neuromorphic co-processors, non-volatile RAM—is making compute cheap in places that were once computational deserts: farm machinery, surgical implants, subsea drones. Each environment imposes unique latency ceilings, thermal budgets, and failure modes. Choosing the wrong agent class is not a rounding error but an existential threat to the project.

For AI engineers in 2025 the typology is thus neither historical footnote nor academic trivia. It is the map legend without which our sprawling, hybrid, mission-critical AI landscapes become unreadable. Throughout the following sections we will revisit the five fundamental types—reactive, deliberative, goal-/utility-based, learning, and multi-agent—test their boundaries, and trace real code paths where one hands off to another.

By the end, the lines between categories will blur—as they should. But the discipline of naming each agent’s core commitment will remain. It is the compass that keeps teams oriented while architectures grow, deadlines slip, and stakeholders multiply. And when you reach the concluding blueprint, you will see how A-Bots.com leverages that compass to build end-to-end AI agents applications that are not merely impressive in demos but sustainable, auditable, and shipped.

Reactive Agents — Fast Reflexes, Zero Memory

Origins and Core Design Principles

The archetype for a reactive agent was born in the late-1980s laboratory of Rodney Brooks, where thin layers of “sense-act” behaviors controlled insect-like robots with only an 8-bit microcontroller between sensor and servo. The guiding claim was audacious for its time: intelligence can emerge without any internal model of the world, provided the control loop is fast enough and the mapping from stimulus to action is well chosen. In practice, that mapping is encoded as a network of finite-state machines, lookup tables, or lightweight neural controllers that transform raw sensor voltages directly into actuation commands within microseconds. There is no memory of yesterday’s state, no anticipation of tomorrow’s goal—only the relentless present tense of real-time physics. The promise is extreme responsiveness and analyzable timing, a combination that still drives certification for aerospace, surgical, and industrial safety systems in 2025.

Modern Engineering Patterns

Although the philosophical debate over “representation” is largely settled—modern systems blend multiple agent types—the engineering grammar of reactive control has matured rather than vanished. A contemporary reactive stack typically begins with hard-real-time I/O on dedicated silicon: Hall-effect sensors feeding position counters, analog-to-digital converters sampling torque curves, or millimetre-wave radars delivering range profiles at 100 Hz. A microkernel or bare-metal scheduler reserves deterministic CPU slots so the controller can run its update law within a worst-case execution budget measured in tens of microseconds. Business logic is written in C99, Rust #![no_std], or Verilog, then proven with model checkers that verify the absence of dead-time violations.

The control law itself often resembles an augmented finite-state automaton. Each arc is annotated with guard conditions such as “IR sensor < 5 cm” and actions like “reverse motor polarity for 20 ms.” To blur the brittleness inherent in pure deterministic rules, engineers inject low-pass filters, PID gains, or—in cutting-edge swarm robotics—tiny feed-forward convolutional networks pruned to run in under three kilobytes. But the governing principle remains: the world is its own best model, so anything slower than the plant dynamics is pushed up to a higher-level agent or discarded entirely.

Latency budgets illustrate the discipline. A pick-and-place robot tasked with lifting 4-kg battery cells allows no more than 250 µs between force-sensor interrupt and torque-vector update; a surgical stapler must adjust firing pressure within 80 µs to avoid tearing tissue; an autonomous racing drone demands 200 Hz attitude correction to survive a 9-g slalom. These numbers translate directly into SRAM footprints, stack depths, and even PCB trace lengths, binding the software architecture to the electromagnetics of copper and silicon.

Strengths, Limitations, and Role in Hybrid Stacks

The strength of a reactive agent is provability. Because behavior depends only on the current observation, formal verification can exhaustively explore all sensor-action pairs and certify bounded outcomes. That is why avionics standards such as DO-178C allocate the stall-recovery reflex of an eVTOL aircraft to a memoryless controller hard-wired in FPGA fabric; the deliberative flight planner may choose a scenic route, but the reactive layer prevents aerodynamic disaster if an updraft slams the wing at 300 ms-¹. The same logic governs insulin micro-pumps, industrial press brakes, and the yaw dampers of container-ship stabilizers.

Yet the very property that makes reactive agents safe also renders them myopic. With no temporal persistence they cannot reason over hidden state, predict delayed rewards, or weigh conflicting objectives. A warehouse AGV that relies solely on ultrasonic bumpers will spend the night nudging a fallen pallet instead of re-planning a detour; a high-frequency trading bot that treats every market tick independently collapses when liquidity evaporates for a few hundred milliseconds. Consequently, modern architectures embed the reactive agent as the basal reflex layer of a hierarchy. Above it sits a deliberative world-model that handles large-scale navigation or resource scheduling, and a learning agent that fine-tunes parameters over time.

The hand-off between layers is not trivial. Reactive loops demand cycle-level guarantees, while deliberative layers operate on millisecond to second horizons. Engineers therefore erect ring buffers, shared-memory mailboxes, or DDS topics with explicit age-of-information contracts. If the deliberative layer stalls, watchdog timers freeze its outputs and let the reactive controller drive the system to a safe halt. Conversely, when the environment transitions from “nominal” to “hazard,” interrupt lines pre-empt the planner and cede authority to the reflex layer within microseconds. Designing these arbitration schemes is as crucial to safety as writing the control code itself.

Case Snapshot: Sub-Sea Pipeline Inspection

Consider an autonomous ROV inspecting gas pipelines at 3 000 m depth. Visibility is near zero, and acoustic comms introduce seconds of latency. The vehicle therefore carries a three-tier stack: a reactive controller stabilizing yaw, pitch, and depth; a deliberative mapper building a SLAM representation from sonar scans; and a learning agent that predicts bio-fouling patterns to optimize inspection paths. During a sudden pressure drop, cavitation bubbles confuse the sonar and stall the mapper. Within 5 ms the reactive agent overrides thrust with a pre-programmed retreat maneuver, burning twelve percent extra power but preventing implosion. Post-mission logs show the learning layer later retrained on that anomaly, yet its very survival hinged on the stateless reflex code written in fewer than 600 lines of Rust.

Looking Ahead

As neuromorphic accelerators miniaturize, some developers dream of replacing deterministic lookup tables with low-power spiking nets that learn on-device. Whether such nets remain “reactive” once they encode micro-memories is an open question, but the engineering mandate survives: first guarantee fast, bounded, observable behavior, then layer higher cognition atop it. In the next section we turn to Deliberative Agents, where memory, planning, and symbolic abstraction take center stage—and where the constraints of real-time reflexes become the scaffolding on which slower, smarter decisions are safely built.

Deliberative Agents — Model-Based Reasoning & Planning

From Maps to Models

Where a reactive agent lives entirely in the present tense, a deliberative agent insists on remembering the past and imagining the future. It maintains an explicit, often symbolic, representation of the environment—call it a map, a knowledge graph, or a hybrid voxel-and-vector world-model—and treats that internal structure as ground truth for logical inference. The result is behavior that appears purposeful: a Mars rover chooses a detour around a dune rather than plowing ahead; a warehouse AMR reschedules its route to avoid a freshly blocked aisle; a smart-grid dispatcher delays a turbine ramp-up because it forecasts a cloud bank sweeping across a solar farm thirty minutes from now.

This commitment to an internal model emerged in the 1970s with STRIPS-style theorem-proving planners and matured through the 1990s in the form of A*, D* Lite, and partial-order planners. In 2025 those algorithms remain core scaffolding—often re-implemented in CUDA or Rust for parallel execution—yet they are now wrapped in layers of learned heuristics that prune search space on the fly. The essential loop, however, has not changed: sense → update model → plan → act → loop. Every controller tick is divided between “thinking about the world” and “changing the world,” and the architecture rises or falls on how well it balances the two.

The Planning Pipeline

Deliberative reasoning begins with state estimation. Sensor fusion pipelines—EKF for continuous dynamics, factor-graph optimizers for SLAM, transformers for language observations—ingest raw data and output a coherent, timestamped belief about where things are and what they are doing. That belief feeds a goal recognizer that translates human intent (“reach sampling site B14 by 14:00 LST”) into a bounded set of terminal states. Finally, a search engine expands successor states, guided by cost functions that mingle physics (battery drain, wheel slip), economics (opportunity cost of idling), and governance (no-go zones, safety envelopes).

Modern planners rarely run as monoliths. Instead, they split across time scales: a strategic planner reasons in minutes or hours, a tactical planner refines the next few seconds of motion, and a micro-planner produces spline coefficients or torque commands that align with the reflex layer below. Between each tier lies a contract: latency budgets, fidelity of dynamics, and a risk profile. Break the contract and the hierarchy collapses, as a California delivery-bot startup learned when its high-level planner issued paths that violated curb-cut tolerances the micro-planner could not satisfy, resulting in dozens of stalled robots and an eight-figure recall.

Engineering Trade-Offs

The power of deliberation is foresight, but foresight is brittle under uncertainty. Every element in the model—terrain friction, market volatility, human intent—can drift. Engineers therefore embed model-update triggers tied to surprise thresholds. If the rover’s wheels slip 8 % more than predicted, the terrain mesh is rebuilt and the planner restarts; if a drug-discovery pipeline observes ligand affinities outside the prior, it reweights its QSAR graph before exploring new synthesis routes. Such updates are expensive, so designers ration them via any-time planning: an algorithm returns a feasible (though not optimal) plan under a real-time deadline, then refines it as slack permits, a technique popularized by Likhachev’s Anytime D* and now accelerated on edge GPUs.

Memory footprint is another constraint. A city-scale HD map for automated trucks consumes terabytes—impossible to house on a vehicle without aggressive tiling and compression. Companies solve this with semantic abstraction: the truck carries a coarse lane graph locally and streams micro-maps over 5G only when approaching complex junctions. The deliberative agent thus plays archivist, curator, and cartographer all at once, deciding which slice of reality deserves the scarce bytes of on-board SRAM.

Case Snapshot: Lunar Rover Route Generation

NASA’s upcoming Artemis robotic scouts illustrate deliberative control under punishing constraints. Because sunlight—and hence power—cycles are deterministic, every meter driven into a shadowed crater must be plotted such that the rover returns to a sunlit safe-haven before battery voltage drops below survivable thresholds. The planner runs on an ARM-64 flight computer, leveraging a hybrid algorithm that blends A* (for global optimality) with Rapidly-Exploring Random Trees (for local non-holonomic feasibility). Dust deposition slowly alters traction coefficients, so the rover monitors wheel-motor current; a 12 % deviation from the predictive model triggers partial re-planning. Mission logs project that each additional re-plan costs two minutes of CPU and fifteen watt-hours—small on Earth, mission-critical on the Moon. Without such deliberation the rover would either crawl at glacial speeds or strand itself in darkness; with it, average traverse speed doubles while staying inside a provably safe energy margin.

The Open Horizon

Deliberative control is sometimes caricatured as old-school AI—anachronistic in the age of end-to-end neural policies—but that caricature misreads where the real risks lie. A self-driving tractor executing deep-RL policies can learn optimal throttle settings for varying soil moisture, yet when the field borders an unfenced irrigation canal the farmer demands a guarantee: The tractor must never enter that polygon, no matter what the reward gradient says. Deliberative maps and search yield such guarantees. They erect “virtual fences,” encode exhaustively audited constraints, and allow formal reachability analysis—none of which can be expressed through reward shaping alone with any legal certainty.

What will change in the next decade is how deliberative agents acquire and refine their models. Neural radiance fields promise sub-centimeter reconstructions updated in near-real-time; differentiable physics engines let gradient-based planners reason directly about slosh dynamics in fuel tanks; language-conditioned world-models enable spacecraft to parse human-written procedures mid-flight. Each advance deepens the dialogue between perception, memory, and action, but it does not erase the categorical need for an agent whose first reflex is think before you move.

As the article unfolds we will see how these deliberative kernels interlock with learning layers and reflex loops, forming hybrid stacks that keep autonomous combines out of irrigation ditches, surgical robots inside sub-millimeter error envelopes, and orbital tugs on propellant-positive trajectories. The taxonomy of types of AI agent is not a museum catalogue—it is the blueprint by which engineers decide where to spend milliseconds, watts, and human trust. In the end-game synthesis you will find that the teams at A-Bots.com treat deliberative planning not as an optional add-on but as a first-class contract, ensuring the AI agents they ship can navigate not only terrain and traffic but regulation, liability, and the unforgiving edge cases of the real world.

Goal- & Utility-Based Agents — Rational Decision Makers

From Goals to Utilities: Why Rationality Still Matters

If a reactive agent survives by reflex and a deliberative agent survives by foresight, a goal- or utility-based agent survives by choosing. It is the branch of the types of AI agent taxonomy that formalises preference: each potential world state earns a numeric score, and the agent selects the action sequence whose expected score is highest. In practice that score can be a Boolean goal—mission accomplished or not—or a real-valued utility that mixes economy, safety, comfort, carbon, and dozens of domain-specific signals. The abstraction is durable because it collapses thousands of sensor readings into a single scalar that software can optimise, yet it stays interpretable enough for regulators and product owners to debate whether the scalar truly reflects human intent.

Engineering the Objective Lattice

Designing a rational agent begins with objective elicitation: stakeholders articulate what “good” means in concrete, measurable terms. An energy-grid operator may rank blackout avoidance over cost, while a ride-hail platform might trade passenger wait time against driver earnings and fleet electrification targets. Engineers then translate that ordinal ranking into a utility function that maps state variables to the real line. When objectives conflict, the mapping becomes a lattice: piece-wise weights change under operating modes, soft constraints add hinge-loss penalties, and risk terms bend the curve to penalise low-probability catastrophes harder than the mean-variance calculus of classical finance would.

To keep that lattice tractable the agent needs an internal world model just rich enough to compute expected utilities. In low-noise environments a Markov Decision Process suffices; in partially observable domains the model upgrades to a POMDP, and in adversarial settings it embeds a game-theoretic opponent model. The model’s fidelity dictates the agent’s rationality ceiling: an autonomous excavator that ignores bucket-soil interaction dynamics will optimise itself straight into premature hydraulic failure no matter how elegant its reward curve.

Algorithms in the Field: Search, Optimisation, Simulation

Once the utility landscape is defined, computation begins. On-board planners for eVTOL aircraft rely on anytime heuristic search—variants of ARA* and LPA* running on embedded GPUs—to find feasible trajectories that clear no-fly cylinders while maximising energy reserve at landing. Warehouse fleet dispatchers solve a mixed-integer programme every fifteen seconds to assign pick tasks, re-weighting the objective coefficients live as traffic density or human worker proximity changes. Commodity-trading bots skip symbolic search altogether and instead treat the environment as a black-box simulator; they run thousands of parallel rollouts under stochastic price paths and pick the action whose Monte Carlo utility sits above the 95th percentile.

Across all these implementations three engineering patterns recur. First, horizon slicing: long-range planners use coarse dynamics and broad utility terms, short-range planners refine detail over a shrinking horizon. Second, warm-starting with learned priors so the planner rarely begins from scratch; a neural network predicts near-optimal actions that seed the optimiser. Third, policy distillation for run-time speed: once an optimiser converges in the cloud, its result is compressed into a lightweight neural policy that runs at the edge in microseconds, handing authority back to the optimiser only when confidence drops below a calibrated threshold.

Case Snapshot: City-Scale Smart-Grid Dispatch

Consider a 2025 metropolitan micro-grid with rooftop solar, bidirectional EV chargers, and district-heating cogeneration. Operators specify a trio of goals: keep voltage within ±3 %, minimise marginal CO₂ per kWh, and shave peak tariffs. The utility-based agent converts that trio into a convex objective with soft penalties for voltage excursions and a time-varying carbon intensity coefficient tied to the upstream generation mix. Every five minutes it ingests weather radar, household demand forecasts, and energy-price futures, then solves a model-predictive control problem over a four-hour horizon. A second-tier optimiser embeds battery-health degradation curves so the cheap solution of draining every EV at 6 p.m. is ruled out as irrational once lifecycle costs are considered. Field data after twelve months shows 11 % lower CO₂, a 7 % reduction in peak tariffs, and—critically—a mean Time Above Voltage Limit of 0.04 %. Those numbers survive regulatory audit because the utility terms are explicit; inspectors can replay scenarios and verify that every control action maximised the stated function under the contemporaneous belief state.

Bridging Ambition and Accountability

The promise of goal- and utility-based control is alignment: the agent’s numeric objective crystalises human intent into code. The peril is that mis-specified utilities become paper-clip goals at industrial scale. Post-mortems of 2024 robo-taxi pile-ups revealed reward curves that paid too little for lateral comfort, encouraging lane-merge aggressiveness that annoyed drivers and, in one case, triggered an emergency-brake cascade. Modern design workflows therefore embed counter-factual audits: red teams craft adversarial scenarios, governance layers simulate their impact, and a human committee signs off on the updated coefficients.

Looking ahead, research on iterated utility functions—where the agent can negotiate or even rewrite its own goals under supervision—aims to reduce hand-tuning overhead. Meanwhile differential privacy and ESG reporting rules push utilities to log the marginal impact of each action on social externalities, demanding cryptographically verifiable diaries of every numeric trade-off the agent makes.

Within the hybrid stacks discussed across this article, rational agents often sit at the very top, emitting set-points to deliberative planners and safety envelopes to reflex loops. That stratification lets engineers prove properties at each layer: the reactive core keeps the robot upright, the deliberative core keeps it on the map, and the utility core keeps it useful. When A-Bots.com architects end-to-end AI agents for clients, the contract starts with utility elicitation workshops and ends with run-time dashboards where executives watch their objectives accrue in real time—an operational embodiment of rationality that turns boardroom strategy into lines of code and back again.

Learning Agents — Policy, Value & World-Model Adaptation

Plastic Control in a Moving World

Reactive, deliberative and utility-driven layers behave as if the environment were either static or at least slowly drifting; they rely on designers to recalibrate when reality outruns their assumptions. A learning agent reverses that workflow. It treats every timestep as an opportunity to rewrite itself, folding fresh observations into weights, tables or latent vectors so tomorrow’s policy is literally different code from today’s. In the contemporary types of AI agent taxonomy this is the category where adaptation is not an after-thought but the organising principle, and where the border between software and data all but disappears.

The rise of transformer self-supervision in 2022-2024 proved that gigantic offline corpora can endow models with a proto-world-model; yet when those models steer physical assets—forklifts, cardiac pumps, stratospheric balloons—they must continue learning on their own trajectories. Otherwise the gap between training distribution and operational edge-cases widens until the agent’s latent beliefs grow dangerously fictional. The ambition, then, is a controller that perceives, plans and simultaneously refits its internal machinery fast enough to remain calibrated yet slow enough to avoid thrashing into instability.

The Trinity: Policy, Value, Model

Modern learning agents are rarely end-to-end black boxes. Instead they separate three coupled estimators:

- Policy π(a | s) maps a state observation to an action proposal.

- Value V(s) predicts long-term return if that policy is obeyed.

- World-Model M(sₜ₊₁ | sₜ, aₜ) captures transition dynamics, optionally emitting latent rollouts to imagine futures that the robot has never physically visited.

In flight these elements update at radically different cadences. The policy might fine-tune every few hundred milliseconds from on-device gradients harvested in a replay buffer; the value head syncs less often, smoothing estimation noise; the world-model refits overnight in a cloud enclave where terabytes of trace logs and high-fidelity simulators allow back-propagation across seconds-long trajectories. The orchestration code that brokers these clocks is itself a micro-agent tasked with safeguarding temporal credit assignment: if a newly learnt policy spikes battery draw, the value learner must notice before the planner’s risk budget is violated.

Data Loops, Simulators & Safety Governors

No learning architecture can outrun the quality of its data loop. In aerial-delivery drones the loop begins with a photogrammetry-derived map, continues through IMU, GNSS and millimetre-wave radar streams, and ends in a ring-buffer with per-timestep labels: wind shear vectors, servo currents, customer satisfaction scores. A cross-device federation protocol merges hundreds of such buffers, differentially private by design, then spins up domain-randomised simulators that perturb aerodynamics, payload mass and sensor latency. Thousands of virtual sorties finish before dawn, generating synthetic future states that the real drone may never live to see—but the world-model learns them anyway.

Crucially, the updated policy cannot simply overwrite the live controller. A safety governor—often a shielded reactive layer or a decision-tree certified against ISO 26262—monitors the KL-divergence between old and new policies. If the delta exceeds a calibrated threshold the switch aborts, and the rover, robot or pump continues running the previous, provably safe reflex. In essence the learning agent is permitted to mutate only inside a verified sandbox shaped by the non-learning layers described earlier in this article.

Case Snapshot: Adaptive Harvest Combines in Kazakhstan

A-Bots.com recently collaborated with an ag-machinery OEM operating in North Kazakhstan’s sharply variable black-soil steppes. The target platform was a 460-horsepower combine harvester whose header load, traction and grain-loss tolerances swing wildly with soil moisture and crop density. A purely deliberative route planner kept the machine on coverage paths, but fuel burn and kernel loss exceeded warranty budgets whenever rain followed midday heat spikes.

Engineers embedded a learning agent atop the utility planner. Its policy net adjusted reel speed and threshing-drum RPM; its value head forecast six-minute-ahead diesel cost plus an agronomically weighted loss penalty; its world-model fused lidar biomass depth with microwave ground-moisture estimates uplinked every five minutes from Sentinel-1. On foggy days the agent trusted the satellite prior more than its own laser altimetry; on clear, dusty evenings the weighting flipped.

Field trials across a 4 300-hectare test plot yielded a mean 8.7% fuel reduction and a 12.4% grain-loss drop relative to the OEM baseline. More tellingly, the policy’s covariance matrix shrank over harvest season—evidence that the agent was converging toward stable control laws under the site’s particular micro-climate. The reactive safety kernel never tripped once, confirming that the modification-gating logic kept exploration inside agronomically safe bounds.

Guarding Against Catastrophic Learning

The same plasticity that cuts diesel costs can amplify rare sensor glitches into self-reinforcing bias. In 2024 a prominent autonomous-tugboat operator reported a near-collision when its RL controller over-weighted radar speckles caused by sleet; the learnt policy veered sharply, and although the deliberative collision-avoidance layer saved the vessel, the incident spurred new catastrophic-forgetting mitigations. Techniques now deployed include stochastic weight-averaging, elastic weight consolidation that locks down parameters crucial to hard-learned safety reflexes, and counter-factual replay where adversarial critics test whether a proposed gradient would have increased historical accident likelihood.

Where Learning Sits in the Stack

Within the hybrid architectures threading through this deep dive, learning agents operate as adaptive envelopes: they nudge parameters of deliberative planners, tune utility weights under shifting economics, or generate latent environment rollouts that accelerate search. They rarely control raw actuators for more than a handful of milliseconds without the reflex layer’s veto, yet their influence compounds over hours, days and seasons—precisely the scale where static controllers degrade.

The practical moral is clear. A project that declares “Let’s bolt on RL later” is planning a retrofit nightmare; conversely, a team that hands unconstrained exploration keys to a brand-new robot is courting a recall. Rational adaptation must be architected from day one, with telemetry hooks, simulator parity and regulatory audit trails baked into the DevOps pipeline.

In the closing section on Multi-Agent & Hybrid Ecosystems we will see how many such learning entities coordinate—sometimes cooperate, sometimes compete—inside warehouses, road networks and orbital planes. But the lesson of this chapter endures: if an AI system must prosper in a world that changes faster than any requirements document, it needs a living core that can measure, value and rewrite itself while holding the rest of the stack—and human safety—absolutely sacred.

Multi-Agent & Hybrid Ecosystems — From Swarms to Hierarchies

The moment a single intelligent controller shares environment variables with another equally autonomous peer, the design space expands from the behaviour of one agent to the ecology of many. In 2025 that ecology ranges from centimetre-scale drone swarms painting night skies with RGB LEDs to continent-spanning energy markets where each megawatt-hour bidder is an algorithmic trader. The terminology—multi-agent system (MAS)—suggests homogeneity, yet the production reality is a hybrid quilt of reactive reflexes, deliberative planners, utility maximisers and on-line learners, each instantiated dozens, hundreds or thousands of times, then stitched together by communication fabric that can fail in unpredictable ways. Understanding how these disparate agent types coexist, compete and cooperate is the final—and often the hardest—chapter in the “types of AI agent” taxonomy.

Coordination Without a Central Brain

Early MAS research treated cooperation either as an elegant game-theoretic equilibrium or a loosely coupled black art. Commercial deployments forced a harder stance: coordination must be robust under lossy radios, variable latency and partial observability, without conceding real-time guarantees. The canonical technique is message-passing with explicit staleness budgets. Warehouse robots, for instance, broadcast position-velocity tuples at 60 Hz on Ultra-Wideband; if a packet ages past 120 ms, neighbours treat it as absent and fall back to purely reactive collision-avoidance. Similar age-of-information cut-offs govern smart-intersection traffic lights, where a forgotten packet could lock an arterial avenue into perpetual red.

To scale beyond a few dozen peers, designers promote the swarm into layers. A local flock of aerial imagers runs consensus over magnetometer headings so formations stay tight in gusty wind; above that, a regional manager assigns coverage zones using an auction algorithm; at the top, a global planner reorders missions when cloud cover occludes a target. Each stratum speaks a different protocol—ROS 2 topics on the edge, gRPC between regional managers, and eventually persistent Kafka streams into the cloud. That stratification turns an otherwise intractable O(n²) message graph into near-linear traffic while preserving the illusion of fluid, global intent.

Emergence, Incentives and Conflict Resolution

When agents optimise distinct utilities, cooperation cannot be taken for granted. Grid-tied home batteries chase the lowest tariff for the homeowner, yet the distribution operator values phase balance more than cents per kilowatt-hour. Left unchecked, thousands of “rational” batteries switch simultaneously at 19:00, destabilising voltage. Field engineers now interpose a virtual-market layer where each battery publishes bids that include a penalty for collective instability; a distributed solver clears the auction every four seconds, aligning micro-incentives with macro-stability.

The same principle governs fleets of last-mile delivery bots navigating pedestrian plazas. Each vehicle runs a deliberative route planner minimising travel time, but a curb-weight inequality emerges: heavier bots bully lighter ones to yield at intersections, degrading overall throughput. The fix is not better path-finding alone; it is a cooperative negotiation protocol that allocates right-of-way credits. Credits are minted by a central ledger but traded peer-to-peer over WebRTC; the emergent behaviour resembles fair traffic even under dropped packets, because the credit accounting persists offline and reconciles once connectivity returns.

Safety Cascades in Heterogeneous Stacks

Pure swarms—identical hardware, identical brains—remain rare outside research labs. Most industrial systems are heterogeneous hybrids: a combine harvester shares a field with moisture sensors, yield-mapping drones and an edge cluster predicting fuel-flow variance; a port automation suite orchestrates tugboats, quay cranes and autonomous trucks, each with radically different control horizons. Cross-platform safety is therefore orchestrated by policy waterfalls. At the leaf, every machine enforces its own reactive kill-switch. One level up, domain-specific safety policies—COLREGS for vessels, ISO 3691-4 for robots—translate sensor data into no-go envelopes. At the canopy, a site-wide risk monitor ingests anonymised state streams and vetoes plans that push aggregate hazard above a threshold.

Such waterfalls demand formal responsibility segregation: the crane’s controller must guarantee never exceed 3 m s⁻² jerk, while the port-wide MAS must guarantee no crossing with a predicted closest-point < 5 m. Proved together, the conjunction satisfies harbour-authority insurance auditors. Break either link and the proof collapses, as a 2024 European warehouse discovered when its upper-layer MAS allowed velocity profiles that technically honoured inter-robot distances yet violated individual wheel-slip constraints, leading to four derailments and a nine-day shutdown.

Learning Together—Or Not

Multi-agent reinforcement learning (MARL) promises collective adaptation: warehouse fleets that discover emergent lane discipline, drone swarms that learn provably minimal communication graphs, synthetic-market traders that converge toward Nash prices. In practice MARL’s curse is combinatorial; joint action-spaces explode. Production systems mitigate by factorising the policy: each agent learns only a local policy conditioned on a distilled global fingerprint—say, a two-dimensional congestion heat-map instead of precise peer coordinates. Gradient back-propagation flows through a value decomposition network hosted in the cloud; compressed policy deltas trickle back to the edge during charging cycles. Even then, rollout-generated variance can corrupt stability, so change-control is moderated by the same safety governors discussed in the previous section: divergent policies are throttled until exhaustive agent-in-the-loop simulation clears them for staged release.

There remain domains where learning is deliberately compartmentalised. Air-traffic-control drones broadcast telemetry but do not update flight-control policies on the wing; certification bodies prohibit in-flight weight changes to any code that affects envelope protection. Instead, learning occurs only in the simulator, and new policies are loaded during scheduled maintenance, preserving swarm coherence across thousands of aircraft.

Toward Planet-Scale Collectives

Edge-compute trends make it plausible that by 2030 tens of billions of devices will negotiate scarce bandwidth, energy and attention without central arbitration. Planet-scale MAS will therefore embed hierarchical abstractions borrowed from networking—cells, areas, autonomous systems—to localise optimisation while propagating only coarse-grained intents upward. Blockchain attestation may record irrevocable commitments (e.g., carbon ledger balances), while zero-knowledge proofs let agents validate peer compliance without leaking private state.

The conceptual breakthrough, visible already in pilot projects, is a recognition that no single agent type suffices. A drone’s reactive layer avoids a sudden gust, its deliberative planner replans around a new airspace NOTAM, its utility head weighs delivery ETA against battery depreciation, and a federated learner retrains the aerodynamics model overnight. The multi-agent whole is not a swarm of identical minds but a society of specialists, each bounded in authority yet unified by protocols, budgets and shared ontologies. Engineering that society is less about inventing new algorithms than about orchestrating old ones—binding timing contracts, security envelopes and governance processes into a system that scales faster than any human oversight committee could intervene.

In the article’s concluding synthesis we will trace exactly how those contracts crystallise into deployable blueprints—and why A-Bots.com begins every client engagement by mapping which agent type sits where in the hierarchy long before a single Docker container spins up on the factory floor.

Synthesis & Outlook: Blurring Lines Between Types

Spend long enough inside a real production system and the neat taxonomic borders we have just explored begin to fade. The reflex layer that once looked purely reactive now passes tiny gradients back into its gain tables; a deliberative planner that was supposed to rely on symbolic search quietly embeds a learned heuristic to meet latency budgets; a utility maximiser adjusts its coefficients through exposure to market drift; even the multi-agent communication fabric shapes micro-behaviours in ways no single designer predicted. By 2025 the question is no longer Which type of AI agent should I choose? but How deeply will the types interpenetrate, and where must I enforce hard seams?

The Era of Purpose-Built Hybrids

Regulatory pressures, edge-compute abundance, and user expectations for zero downtime are converging toward architectures that treat each agent class as a capability slice rather than a monolithic persona. A drone-delivery platform, for instance, lets its reactive attitude controller learn micro-trim offsets in real time but freezes its stall-protection logic behind FPGA gates; the global mission planner runs deliberative search yet delegates energy budgeting to a utility head that refreshes every cloud-to-edge sync; an overnight federated learner digests thousands of flights, distilling aerodynamics updates that trickle down as firmware patches. The resulting system is simultaneously reactive, deliberative, rational, adaptive and social, with each layer bound by explicit interface contracts on timing, authority and verifiability.

Interpretable Fluidity

The paradox is that fluid boundaries can coexist with stronger guarantees—if the seams are explicit. Formal methods teams now verify “safety envelopes” that span multiple agent behaviours, not just single modules. Explainability dashboards trace a control decision through three layers of logic and two peer negotiations, yet compress that lineage into a human-auditable narrative: gust detected → micro-trim adjusted → energy buffer dipped → route replanned. We are inching toward agents whose internal complexity is free to evolve so long as the story they tell the rest of the system remains stable.

Toolchains and Talent Shifts

Toolchains are adapting accordingly. Reactive code is increasingly written in Rust or HDL, not C; planners compile into GPU-bound kernels that expose differentiable hooks; utility functions ship with YAML manifest files so ops teams can hot-patch weights without a redeploy; learning pipelines embed synthetic data generations side-by-side with real logs, blurring training and simulation. Hiring patterns reflect the shift: robotics engineers now read game-theory papers, reinforcement-learning specialists learn model checking, DevOps teams treat federated learning clusters as first-class CI targets.

Grand-Scale Convergence

Macro-trends push the convergence further. Edge ASICs with mixed-signal neuromorphic cores make it economical to run continual-learning policies at milliwatt budgets, so reactive loops can adapt without cloud round-trips. Meanwhile, international safety frameworks such as ISO 42001 demand traceability across the entire agent stack, forcing deliberative and learning layers to expose the same audit hooks. Combined, these pressures create what might be called an irreversible hybridisation: any subsystem that refuses to learn soon becomes brittle; any subsystem that learns without explicit boundaries is un-certifiable. Success lies in deliberately braiding the agent types together while preserving their unique disciplines.

Looking Five Years Ahead

By 2030 we will likely see regulatory test-beds where autonomous machines must demonstrate live personality swaps: a delivery rover that moves from quiet suburbia into a stadium crowd must downshift its exploratory learning rate, elevate its utility weight on pedestrian comfort, and widen its reactive obstacle buffer—all in seconds, with proofs that no invariant is broken. Such elasticity will be impossible without the layered vocabulary of types we have dissected here. What began in the 1980s as competing schools of thought is maturing into a syntax for system design, the grammatical rules by which engineers, auditors and algorithms negotiate responsibility in real time.

The A-Bots.com Commitment

At A-Bots.com we start every engagement by mapping that grammar onto your domain. Which reflexes must remain immutable? Which planners can borrow a GPU when the cloud is near and fall back to heuristics when it is not? How should utility curves evolve as your business model pivots? Where must learning happen—in the device, in the lab, or in a federated collective that spans continents? We answer these questions with code, simulation, and provable contracts, delivering end-to-end ai agents applications that ship fast reflexes, deep foresight, rational trade-offs, adaptive intelligence and swarm-grade coordination — without forcing you to choose one at the expense of the others. In a world where the boundaries between agent types are blurring by design, that holistic engineering ethos is the difference between an impressive prototype and a system that endures.

✅ Hashtags

#TypesOfAIAgent

#AIAgentArchitecture

#ReactiveAgents

#DeliberativeAgents

#LearningAgents

#MultiAgentSystems

#UtilityBasedAI

#HybridAI

Other articles

Beyond Level AI Conversation-intelligence is reshaping contact-center economics, yet packaged tools like Level AI leave gaps in data residency, pricing flexibility, and niche workflows. Our deep-dive article dissects Level AI’s architecture—ingestion, RAG loops, QA-GPT scoring—and tallies the ROI CFOs actually care about. Then we reveal A-Bots.com’s modular blueprint: open-weight LLMs, zero-trust service mesh, concurrent-hour licensing, and canary-based rollouts that de-risk deployment from pilot to global scale. Read on to decide whether to buy, build, or hybridise.

AI Agents Examples From fashion e-commerce to heavy-asset maintenance, this long read dissects AI agents examples that already slash costs and drive new revenue in 2025. You’ll explore their inner anatomy—planner graphs, vector-store memory, zero-trust tool calls—and the Agent Factory pipeline A-Bots.com uses to derisk pilots, satisfy SOC 2 and HIPAA auditors, manage MLOps drift, and deliver audited ROI inside a single quarter.

Custom Offline AI Chat Apps Development From offshore ships with zero bars to GDPR-bound smart homes, organisations now demand chatbots that live entirely on the device. Our in-depth article reviews every major local-LLM toolkit, quantifies ROI across maritime, healthcare, factory and consumer sectors, then lifts the hood on A-Bots.com’s quantisation, secure-enclave binding and delta-patch MLOps pipeline. Learn how we compress 7-B models to 1 GB, embed your proprietary corpus in an offline RAG layer, and ship voice-ready UX in React Native—all with a transparent cost model and free Readiness Audit.

Offline AI Assistant Guide Cloud chatbots bleed tokens, lag and compliance risk. Our 8 000-word deep dive flips the script with on-device intelligence. You’ll learn the market forces behind the shift, the QLoRA > AWQ > GGUF pipeline, memory-mapped inference and hermetic CI/CD. Case studies—from flood-zone medics to Kazakh drone fleets—quantify ROI, while A-Bots.com’s 12-week blueprint turns a POC into a notarised, patchable offline assistant. Read this guide if you plan to launch a privacy-first voice copilot without paying per token.

Top stories

Copyright © Alpha Systems LTD All rights reserved.

Made with ❤️ by A-BOTS