Home

Services

About us

Blog

Contacts

Beyond theLevel.ai: What Level AI Tells Us About the Future of CX Intelligence and How A-Bots.com Can Build It for You

The Rise of Level AI: Market & Product Context

Under the Hood: Technical Anatomy of Level AI

Business Impact & Competitive Landscape

Beyond theLevel.ai: A-Bots.com’s Blueprint for a Custom CX-Intelligence Stack

Section I — The Rise of Level AI: Market & Product Context

1. A Perfect Storm for Conversation-Intelligence Platforms

By mid-2025 three currents had merged to yank contact-center AI from the hype cycle into the CFO’s line of sight. First, the cost of large-language-model (LLM) inference dropped roughly ten-fold between GPT-3.5 (2023) and the latest open-weight variants, making it economically feasible to analyse every call or chat instead of a 1-2 % sample. Second, regulators intensified scrutiny of how companies monitor customer interactions, forcing leaders to prove fair treatment at scale (GDPR Art. 22 in the EU, CPRA in California, ADR reform in Australia). Third—and numerically hardest to ignore—agent churn climbed to 30-45% annually, a figure now quoted by every major analyst house and trade publication (techrepublic.com). With so much money leaking from re-hiring and compliance fines, the market was primed for a platform that could listen to 100 % of conversations, score quality automatically, and surface Voice-of-the-Customer (VoC) themes without an army of human supervisors.

2. From Alexa Insight to $73 M in Venture Fuel

Level AI’s story starts in Mountain View in 2019, when former Amazon Alexa product lead Ashish Nagar left the voice-assistant team convinced that transformer models could do for contact-center workflows what Alexa had done for the smart-home (techcrunch.com). The thesis resonated with investors: a $39.4 million Series C in July 2024 brought total funding to $73.1 million, vaulting the company into the break-out cohort alongside Observe.ai and Dialpad. Level AI’s positioning line—“the LLM-native customer-experience intelligence layer”—is more than marketing; it signals a ground-up rebuild of the analytics stack around transformers rather than bolting GPT onto legacy speech analytics.

3. What Exactly Does Level AI Ship?

At the core sits QA-GPT, a proprietary model that reads scorecard criteria expressed in plain English, applies them to 100 % of calls, chats, and emails, and claims near-human accuracy without manual phrase-training. Sitting beside it, Real-Time Agent Assist listens to the live transcript, retrieves answers from knowledge bases, auto-populates CRM fields, and lets supervisors jump into red-flag calls before they spiral. The same semantic engine powers VoC Insights, clustering emergent topics and sentiment shifts across every channel so product teams can spot bug-induced call spikes before Twitter does (thelevel.ai). Finally, a CX Intelligence Hub ties QA scores, VoC themes, CRM data, and workforce metrics into one executive dashboard, giving COOs a single pane of glass instead of half-a-dozen swivel-chair reports.

4. Early Proof Points: Logos, Integrations, Hiring

Level AI’s public case studies feature brands such as ezCater and Affirm, each reporting double-digit Net Promoter Score lifts and 30-45 % reductions in manual QA hours. Crucially, the platform plugs into existing ecosystems—Zendesk, Salesforce, Five9, Twilio, NetSuite, leading SSO providers—so buyers are not forced into a rip-and-replace gamble. LinkedIn shows headcount edging toward the upper end of the 51-200-employee band, with fresh hiring in applied research and EMEA go-to-market roles—classic “Series C scale-up” signals.

5. Strengths—and the Fault Lines That Matter to Buyers

Level AI wins deals on depth of LLM integration, breadth of channel coverage, and a focus on actionable QA rather than vanity sentiment scores. Yet competitor messaging reveals soft spots: Abstrakt, for example, publicly cites customer quotes of $185 per agent per month and positions itself as both cheaper and faster to deploy, claiming Level AI “never commits to an onboarding date” (abstrakt.ai). Highly regulated segments also balk at a pure-SaaS footprint that keeps data in Level AI’s cloud, opening space for partners who can deliver private-cloud or on-prem alternatives.

Why this matters for A-Bots.com.

The very gaps that frustrate prospects—opaque implementation timelines, price inflexibility, one-size-fits-all hosting—are exactly where a custom-build specialist shines. Section II will peel back Level AI’s architecture layer by layer—vector stores, RAG flows, latency budgets, compliance controls—and show how A-Bots.com can replicate the value while tailoring the stack to each client’s regulatory, budgetary, and domain constraints.

Section II — Under the Hood: Technical Anatomy of Level AI

Knowing what a platform does is only half the battle; understanding how it does it tells you whether you can—or should—build something similar. Below we dissect Level AI’s pipeline step-by-step and flag the architectural decisions that A-Bots.com would replicate, adapt, or redesign when delivering a bespoke Customer-Experience Intelligence stack.

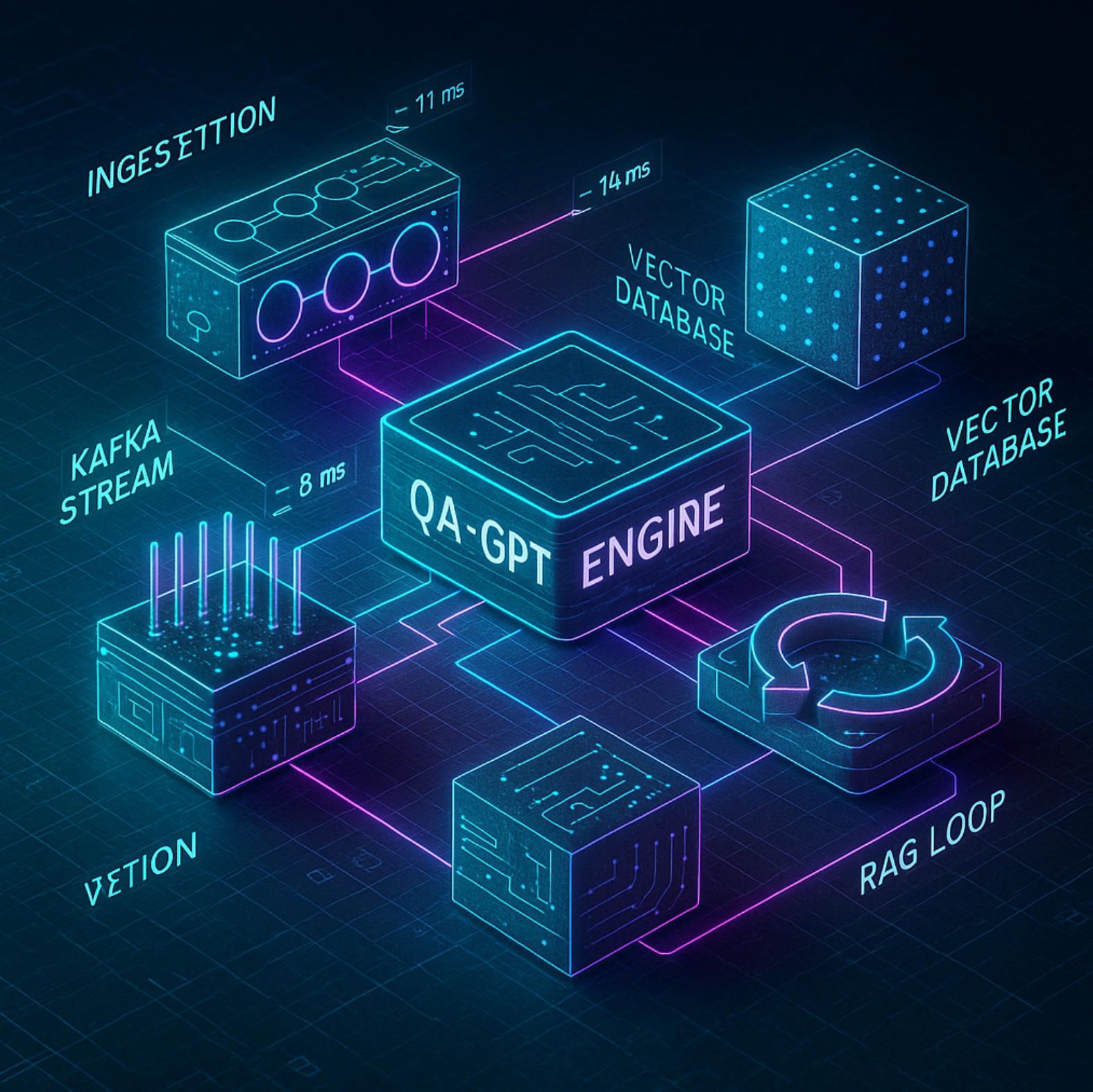

1. Ingestion & the Omnichannel “Raw Lake”

Everything starts with capture. Level AI ingests phone audio, chat transcripts, e-mails, and screen shares via native connectors for Zendesk, Salesforce, Five9, Twilio and other CCaaS/CRM systems.These connectors stream JSON event payloads—call‐legs, agent IDs, CRM metadata—into a partitioned object store (typically S3 or GCS). By persisting raw artefacts before any transformation, Level AI keeps a non-destructive audit trail that product, compliance, and data-science teams can replay if models or regulations change.

For a custom build, A-Bots.com normally prefixes this lake with a Kafka (or Pulsar) buffer to decouple ingestion spikes (Monday billing calls) from downstream GPU workloads. This also lets us fan-out to multiple consumers—ASR, analytics, redaction—without duplicating traffic.

2. Speech-to-Text & Channel Normalisation

Voice traffic flows next through an Automatic Speech Recognition layer. Level AI’s marketing material is vendor-neutral, but benchmarks imply error rates on par with Deepgram Tier-1 or AWS Call Analytics. The audio is chunked into ~2 s segments so transcripts arrive as a token stream instead of a giant blob, enabling sub-second downstream inference for agent assist. Phone, chat, and e-mail transcripts are then pushed through a normaliser that unifies emoji, timestamps, and language-specific stop-words, ensuring the LLM sees a consistent token vocabulary.

A-Bots.com often adds a locale detector here so multilingual call centres (e.g. Spanish-English switchboards) automatically route each transcript into the matching ASR model and fine-tuned LLM.

3. Domain-Fine-Tuned LLMs: QA-GPT & Friends

Level AI’s crown jewel is QA-GPT, a proprietary generative model fine-tuned on millions of contact-centre interactions. Unlike legacy “keyword scorecards,” QA-GPT consumes rubric prompts in plain English—“Did the agent verify the caller’s account using two factors?”—then scores every interaction against those criteria. Because the model embeds both the rubric and the conversation in the same semantic space, it can answer why a call failed and cite the offending utterance.

Real-time coach prompts run on a lighter sibling model distilled from QA-GPT. The lighter model trades some nuance for latency ≤ 300 ms, a hard ceiling beyond which live guidance feels laggy to agents.

4. Retrieval-Augmented Generation & the Vector Store

When an agent says, “Can I cancel after 14 days?” the system retrieves company-specific policy snippets and lets the LLM draft an answer. Level AI accomplishes this with a classic RAG loop: the last few turns of dialogue form the query, which is embedded via an SBERT-style encoder; the embedding probes a vector database (Pinecone- or Weaviate-class) that holds KB passages and prior calls; top-K chunks are then stitched into the prompt sent to the generative model (blogs.nvidia.com). A cache layer stores frequent Q&A pairs so evergreen questions (“reset password”) skip vector search entirely, shaving ~120 ms.

In A-Bots.com projects we sometimes replace the external vector DB with Postgres/pgvector for mid-market budgets, or deploy Redis-Search in memory when sub-100 ms median latency is contractual.

5. Real-Time Agent Assist: Latency Budget Breakdown

Latency is the make-or-break KPI for live assist. Level AI’s public demos show ~600 ms door-to-door:

- 180 ms WebRTC audio packetisation and ASR chunk.

- 120 ms RAG fetch (or cache hit).

- 200 ms generation on 13-17 B-parameter model with 8-bit quantisation on A10 G GPUs.

- 100 ms WebSocket delivery and UI render.

Hitting that number requires careful prompt templates (< 600 tokens) and streaming decode so the UI prints words as they materialise. A-Bots.com typically uses a prompt pre-processor that prunes system instructions to the top hashtags (#Policy, #Benefit, #Upsell) and back-fills the longer rationale only if the agent scrolls, reducing initial token count by ~30 %.

6. Security, Privacy & Data Residency

Level AI advertises full AES-256 encryption at rest, TLS 1.3 in transit, and RBAC with audit logs. The platform is SOC 2 Type II, GDPR, HIPAA, and PCI-DSS aligned, but remains a single-tenant SaaS; customers cannot bring their own cloud. That’s the friction point for highly regulated verticals—telecom, public sector, financial trading desks—that need data residency inside national borders or even on-prem.

A-Bots.com addresses this by containerising each microservice (ASR, embedding, LLM, vector DB) behind a service mesh like Istio. We can deploy the entire stack in a customer’s VPC or on-prem Kubernetes cluster, optionally tethering to a private-cloud compute enclave (Apple-style “Private Cloud Compute”) for bursty LLM workloads that exceed on-prem GPU capacity.

7. Observability: Why It Matters for Model Trust

Level AI exposes dashboards for false-positive QA flags and drift in VoC topic clusters, but the model weights remain a black box. We bake model observability hooks into every stage—latency traces, score-distribution histograms, prompt+response logging with PII masks—to satisfy both engineering SLOs and emerging AI-audit frameworks (NIST RMF, ISO 42001). These hooks make it trivial to diagnose if a rising Auto-Fail rate is due to seasonal slang, new product SKUs, or an upstream ASR regression.

8. Blueprint Takeaways for A-Bots.com Clients

- Reuse what works, swap what constrains. The QA-GPT concept is sound; we would replicate the rubric-in-prompt trick but let clients choose between open-weights (Mistral 8x22B) or a closed API (OpenAI) depending on data-sovereignty rules.

- Latency is a UX feature. Sub-second live assist demands GPU budgeting, prompt streaming, and a no-ops cache. We size clusters via historical concurrency, not seat count.

- Privacy is not a bolt-on. From the raw lake to the vector store, data must carry row-level encryption tags so redaction is enforceable everywhere, not just in the UI.

- Observability equals survival. Drift happens; the only question is whether you see it before customers do. Telemetry hooks need to be a Day-0 requirement, not a post-launch patch.

In Section III we will zoom out to the business lens—ROI patterns, pricing levers, and how Level AI stacks up against Observe.ai, Abstrakt, Qualtrics and Zendesk Resolution Platform—before mapping a go-to-market calculus for enterprises deciding between buying Level AI and commissioning A-Bots.com to build a domain-tuned alternative.

Section III — Business Impact & Competitive Landscape

1. Why the CFO Cares: Hard-Dollar Levers behind Conversation-Intelligence

Generative-AI analytics are not a “nice-to-have reporting upgrade.” They attack four line-items that live in every contact-center P&L:

- Quality-Assurance payroll. Supervisors once listened to a 1–2 % sample of calls; an LLM scoring 100 % of interactions cuts manual QA hours by 70–90 %. Level AI markets this as “100 % Auto-QA” and positions it as the first head-count dividend (thelevel.ai).

- Compliance exposure. Flagging risky utterances in real time slashes post-facto remediation costs (regulators now ask for proof, not promises). A Level AI deployment at a top financial-services BPO claims to have saved “millions” in potential fines once VoC clustering surfaced mis-selling patterns early (thelevel.ai).

- Agent productivity & churn. Real-time RAG prompts shave 10–25 % off average handle time (AHT). That throughput uptick either absorbs volume spikes without hiring or frees budget for wage retention.

- Revenue lift via VoC analytics. When funnel leaks are spotted weeks sooner, product fixes or retention offers arrive while the customer still cares.

These four levers mean conversation-intelligence projects now get 12- to 18-month payback windows, a metric finance chiefs can benchmark against any SaaS roll-out.

2. Level AI’s Scorecard: Evidence from the Field

Because Level AI does not publish dollar figures in analyst decks, we triangulate from public case snippets and partner statements:

- An online retailer’s rollout reports double-digit NPS gains and QA coverage jumping from 3% to 100%.

- A contact-center provider for tier-one banks attributes a multi-million-dollar compliance saving to Level AI’s VoC anomaly detection—important because banks rarely allow vendor names in public releases.

Even without audited ROI tables, these directional wins echo what the broader market sees: ServiceFirst’s Observe.ai deployment booked a 77 % ROI and $3.7 M in incremental loan volume after automating QA and coaching loops (observe.ai). Level AI’s results land in the same zone but tilt more toward compliance and VoC than pure sales uplift.

3. The Pricing Reality Check

Seat-based SaaS may feel old-school, yet most vendors still quote per-agent stickers. A leaked customer quote puts Level AI at roughly $185 per agent per month, plus platform fees. That number is at the high end of the spectrum:

- Observe.ai rarely discloses list price publicly, but marketplace listings cluster around USD 75–90 per user (capterra.com).

- Abstrakt.ai goes on record promising to “beat Level AI 99.9 % of the time,” signalling a sub-$100 price point and faster onboarding.

- Zendesk Resolution Platform (launched March 2025) introduces outcome-based tiers that bundle AI seats into SLA guarantees—effectively masking per-user math behind resolution rates (zendesk.com).

Buyers therefore face a classic value-vs-budget fork: Level AI stakes a claim on deeper analytics and domain-specific LLMs, but competitors undercut on sticker price or flip to usage/outcome pricing to reduce CFO sticker shock.

4. Competitive Posture in Mid-2025

Observe.ai skews toward U.S. mid-market BPOs and positions “Real-Time + Post-Call on one license.” Rapid bookings growth (155 % YoY) tells us the land-and-expand strategy is working.

Abstrakt.ai competes on latency: sub-200 ms response guarantees and a marketing blitz around “onboard in weeks, not quarters.” Its lowest-friction installs win SMBs or green-field programs where integration depth is secondary.

Qualtrics approaches from the opposite flank—enterprise experience-management budgets. Its 2025 roadmap talks about “agentic AI as a coworker” embedded in the existing XM stack, blurring the line between customer analytics and employee feedback suites.

Zendesk weaponises its installed base of ticketing seats; the new Resolution Platform rolls generative AI, a knowledge-graph, and an Insights Hub into an integrated upsell. Flexible, outcome-linked pricing aims squarely at procurement objections Level AI still hears.

Strategic takeaway: Level AI owns the “deep LLM native” narrative but must defend against (1) lower-cost fast followers, and (2) suite vendors bundling “good-enough” AI into existing contracts.

5. Macro-Market Context — Rising Tide, Crowded Harbor

Analysts size the call-center-AI market at ~USD 2.1 B in 2024 with a CAGR near 19 % through 2034. That rising tide floats many boats; still, the spend is fragmenting: pure-play vendors, CCaaS suites, and custom builders are all angling for shares of the same AI budget. As usage-based LLM costs fall, differentiation shifts from raw model access to domain-tuned workflows, latency, and governance—areas custom development can excel at.

6. The Build-versus-Buy Moment—Where A-Bots.com Fits

Enterprises now wrestle with three constraints that off-the-shelf tools only partially solve:

- Data-Residency and Air-Gap Needs. SaaS vendors keep data in their cloud; public-sector or telco buyers sometimes cannot.

- Non-Standard Workflows. Seat-pricing penalises seasonal peaks (tax season, holiday retail) where concurrency, not head-count, drives cost.

- Model Governance. Board-level AI-risk policies increasingly demand auditability of prompts, embeddings, and inference chains—often impossible inside black-box SaaS.

A-Bots.com specialises in modular, RAG-centric architectures that replicate Level AI’s value props—100% Auto-QA, live agent assist, VoC analytics—while letting clients dictate cloud, on-prem, or hybrid topologies, swap open-weight or closed LLMs, and meter cost to actual concurrency.

Section IV will translate those capability gaps into a concrete blueprint and rollout roadmap, showing how A-Bots.com de-risks the journey from pilot to production without locking clients into price escalators or vendor data silos.

Section IV — Beyond theLevel.ai: A-Bots.com’s Blueprint for a Custom CX-Intelligence Stack — Formula-Free Revision

Everything theLevel.ai does can be decomposed, recombined, and—where necessary—improved. Below is the proven playbook A-Bots.com follows when an enterprise decides, “We need Level-class value, but on our own terms.” It is not an ivory-tower diagram; it is a sequence of deliverables, milestones, and governance checkpoints that have already shepherded other AI workloads from whiteboard to production.

1. Phase Zero: Strategic Fit & ROI Geometry

The engagement opens with a 12-day Discovery Sprint that answers three board-level questions:

- Where will value concentrate? Which of the four P&L levers highlighted in Section III—QA payroll, compliance exposure, agent productivity, or revenue lift—offers the fastest, clearest upside?

- Where does the data live and move? Voice, chat, and CRM metadata may sit in different clouds, on-prem racks, or CCaaS silos. Latency budgets and privacy constraints vary by channel.

- What is the tightest risk constraint? Data-residency laws, AI-audit requirements, or customer-experience SLAs usually define the narrowest passage the solution must navigate.

Discovery concludes with a single-page ROI brief that translates those answers into hard numbers—savings, cost of compute, change-management effort—reviewed and approved by Finance before a single container is built.

2. Phase One: Foundation Stack—“Plumb Once, Reuse Forever”

Weeks 1 – 6 are about plumbing, because nothing accelerates iteration like friction-free data flow. We deploy:

- Dual-mode Capture Adapters to ingest via CCaaS APIs or SIP packet capture when APIs are missing.

- An event-stream buffer (Kafka on KRaft) that shards traffic by channel, locale, and tenant, enabling “replay” for future model retraining.

- A zero-trust service mesh (Istio) that enforces mutual TLS and per-route RBAC; certificates rotate automatically via SPIFFE.

At this point the customer owns a hardened, vendor-agnostic substrate; swapping one ASR or LLM for another later becomes a Helm upgrade, not a forklift.

3. Phase Two: Intelligence Layer—Modular, Swap-Friendly Brains

Using the same ingestion backbone, we wire in three inference micro-services, each behind its own gRPC façade so components can evolve independently:

- Speech-to-Text — default is Whisper large-v3 on Nvidia L4; regulated clients can redirect to on-prem Deepgram, Google CCAI, or Kaldi.

- Domain-Tuned QA-GPT — fine-tuned open-weight models on ~50 k labelled interactions, or prompt-wrapped GPT-4 where fine-tuning is restricted.

- RAG Assist — a distilled 3-B model fed by pgvector or Pinecone; embedding models can swap (E5, MiniLM, GTE) with no API change.

Each service rolls out via canary releases: 1 % traffic, metrics check, then full cut-over—no hard downtime.

4. Phase Three: Experience Layer—Where Humans Meet AI

- Agent Glass — a React panel that injects into any Chromium-based CCaaS desktop, streaming suggestions < 600 ms after customer speech. Agents watch answer cards materialise word-by-word; supervisors see live sentiment bars flash red on compliance triggers.

- Insight Portal — a Next.js web app with Elastic dashboards for VoC clusters, drift alerts, and audit-trail playback. Every filter or drill-down generates a shareable permalink, turning discoveries into artefacts ops teams can revisit.

Both UIs authenticate through the client’s IdP (OIDC/SAML), making team roll-outs a matter of permission toggles, not user copy-pasting.

5. Phase Four: Pilot, Expand, Automate

- Pilot (Weeks 12 – 16). 100 seats, one geography, one language. Success gates: QA labour hours drop ≥ 60%, AHT drops ≥ 10%, compliance false-negatives < 0.5%.

- Expansion (Weeks 17 – 24). Roll to remaining queues, languages, and channels. GPU cluster auto-scales for Monday spikes without paying for idle hardware mid-week.

- Automation (Month 7 +). QA-GPT triggers pipe into BPM systems: cancellations spawn save-offers, fraud phrases flag KYC review, mis-shipped orders open credit tickets. This is where ROI accelerates.

6. Cost & Licensing Philosophy—Pay for Concurrency, Not Seats

A-Bots.com licenses the stack on concurrent-inference hours plus support SLA. Seasonal clients (tax prep, holiday retail) don’t pay December prices in April. GPU capacity can be client-owned, leased through a hyperscaler marketplace, or burst to A-Bots.com’s enclave when spikes exceed local hardware.

7. Governance & Lifespan Engineering

- Model Observability — prompts, embeddings, logits, and latency traces stream into OpenTelemetry; drift detection triggers PagerDuty alerts.

- Prompt Vault & Redaction — PII masker replaces names, account numbers, addresses before storage; security teams replay incidents without violating privacy.

- Regulatory Audit Packs — signed hash-chains link every QA-GPT decision to raw tokens and model digests, satisfying SOC 2 and upcoming ISO 42001 auditors.

8. Future-Proofing Moves

With brains and plumbing decoupled, tomorrow’s upgrades are incremental: swap an Mistral-MoE encoder for richer embeddings, add an edge Tiny-ML “whisperer” to pre-compress audio at branch offices, or chain a policy-tuned agent workflow that drafts refund e-mails in brand voice—each enhancement an overlay, not a rewrite.

9. Closing the Loop

Off-the-shelf vendors promise speed but sell uniformity—perfect if your call flow mirrors everyone else’s, painful when it doesn’t. A-Bots.com’s blueprint delivers Level-class intelligence without ceding data custody, pricing control, or roadmap sovereignty. Brains and plumbing stay decoupled, rollouts advance through measurable gates, and licensing respects the seasonal heartbeat of real-world operations. In short: you gain conversation-AI upside shaped to your own gravity, governed by your own rules, and paid for on your own terms—no algebra required.

✅ Hashtags

#LevelAI

#ConversationIntelligence

#CustomerExperience

#CXAnalytics

#AutoQA

#VoiceOfCustomer

#ContactCenterAI

#ABotsCom

#BuildVsBuy

#AICompliance

Other articles

Offline AI Chatbot Development Cloud dependence can expose sensitive data and cripple operations when connectivity fails. Our comprehensive deep-dive shows how offline AI chatbot development brings data sovereignty, instant responses, and 24/7 reliability to healthcare, manufacturing, defense, and retail. Learn the technical stack—TensorFlow Lite, ONNX Runtime, Rasa—and see real-world case studies where offline chatbots cut latency, passed strict GDPR/HIPAA audits, and slashed downtime by 40%. Discover why partnering with A-Bots.com as your offline AI chatbot developer turns conversational AI into a secure, autonomous edge solution.

Offline-AI IoT Apps by A-Bots.com 2025 marks a pivot from cloud-first to edge-always. With 55 billion connected devices straining backhauls and regulators fining data leaks, companies need AI that thinks on-device. Our long-read dives deep: market drivers, TinyML runtimes, security blueprints, and six live deployments—from mountain coffee roasters to refinery safety hubs. You’ll see why offline inference slashes OPEX, meets GDPR “data-minimization,” and delivers sub-50 ms response times. Finally, A-Bots.com shares its end-to-end method—data strategy, model quantization, Flutter apps, delta OTA—that keeps fleets learning without cloud dependency. Perfect for CTOs, product owners, and innovators plotting their next smart device.

Offline AI Agent for Everyone A-Bots.com is about to unplug AI from the cloud. Our upcoming solar-ready mini-computer runs large language and vision models entirely on device, pairs with any phone over Wi-Fi, and survives on a power bank. Pre-orders open soon—edge intelligence has never been this independent.

Custom Offline AI Chat Apps Development From offshore ships with zero bars to GDPR-bound smart homes, organisations now demand chatbots that live entirely on the device. Our in-depth article reviews every major local-LLM toolkit, quantifies ROI across maritime, healthcare, factory and consumer sectors, then lifts the hood on A-Bots.com’s quantisation, secure-enclave binding and delta-patch MLOps pipeline. Learn how we compress 7-B models to 1 GB, embed your proprietary corpus in an offline RAG layer, and ship voice-ready UX in React Native—all with a transparent cost model and free Readiness Audit.

Offline AI Assistant Guide Cloud chatbots bleed tokens, lag and compliance risk. Our 8 000-word deep dive flips the script with on-device intelligence. You’ll learn the market forces behind the shift, the QLoRA > AWQ > GGUF pipeline, memory-mapped inference and hermetic CI/CD. Case studies—from flood-zone medics to Kazakh drone fleets—quantify ROI, while A-Bots.com’s 12-week blueprint turns a POC into a notarised, patchable offline assistant. Read this guide if you plan to launch a privacy-first voice copilot without paying per token.

Top stories

Copyright © Alpha Systems LTD All rights reserved.

Made with ❤️ by A-BOTS