Home

Services

About us

Blog

Contacts

Unplugged Intelligence: A‑Bots.com Introduces a Solar‑Powered, Offline AI Agent for Everyone

Why the World Needs Offline AI Now

Inside the Agent: Hardware & Intelligence

Where It Changes Everything: 12 High‑Impact Domains

A‑Bots.com Mission & Next Steps

1. Why the World Needs Offline AI Now

Artificial intelligence may feel ubiquitous, yet more than one‑third of humanity—about 2.6 billion people—remains disconnected from the Internet. These users live mostly in rural or low‑income regions where back‑haul is patchy, power grids are unstable, or data plans are prohibitively expensive. Even in developed economies, wildfires, floods, geopolitical conflicts, or a simple base‑station outage can knock communities offline for hours or days. An AI assistant that works entirely on‑device, draws a browser‑free UI through local Wi‑Fi, and recharges from a power bank or a 10 W solar panel is therefore not a niche gadget—it is a resilience tool. ITU

The Digital Divide Is Bigger Than We Think

While mobile coverage now blankets 96 % of the planet, connectivity is not the same as usable Internet. ITU’s 2024 facts‑and‑figures report tallies 2.6 billion offline citizens—and the gap is widening in absolute numbers as populations grow. Offline AI sidesteps this divide: emergency medics in rural Africa can run triage models locally; agronomists in the Kazakh steppe can identify wheat rust without waiting for a satellite link; Arctic research teams can query a language model while storms black out VSAT.

Privacy & Sovereignty Pressures

Regulations such as GDPR and China’s DSL mandate strict data‑handling rules, making continuous cloud streaming risky or outright illegal in many settings. Enter edge AI: all sensor data is processed, embedded, and encrypted inside the device—no raw video, biometrics, or proprietary CAD ever leave the premises. This is mission‑critical for hospitals, R&D labs, or defence contracts where leaking even a single frame could incur million‑dollar liabilities. A‑Bots.com’s forthcoming agent stores its vector database on an encrypted NVMe and never calls home unless the user explicitly opts in. ).

Latency & Reliability for Time‑Critical Tasks

Cloud inference travels hundreds of kilometres to a data centre and back; every extra hop introduces delays. Edge studies show that processing on local hardware can shave tens of milliseconds—sometimes up to 40 %—off the round‑trip. For collision‑avoidance drones, high‑frequency trading sensors, or augmented‑reality service manuals on a factory floor, those milliseconds are the difference between success and failure. Because the agent’s compute lives centimetres from the sensors, decisions arrive in real‑time even when the nearest cell tower is overloaded (DZone).

Sustainability & Energy Efficiency

AI workloads already consume more electricity than many nations. Shifting inference from megawatt‑scale data centres to watt‑scale single‑board computers makes a measurable dent. A peer‑reviewed analysis of communication‑heavy workloads finds that edge execution can cut client‑side energy use by up to 55 %—before accounting for the savings from data‑centre cooling and long‑haul fibre. Power‑bank‑ready, solar‑charge‑friendly hardware compounds the benefit: a rural health clinic or disaster‑relief camp can run diagnostics all day off a shoebox‑sized photovoltaic mat instead of hauling diesel for a generator (ScienceDirect).

Cloud‑Centric Gadgets Still Miss the Mark

High‑profile AI wearables showcase the promise of voice‑first agents, yet they remain tethered to the cloud. Rabbit R1’s Large Action Model (LAM) spins up remote VMs for every task, leaving the device helpless when reception drops. The much‑publicised Humane AI Pin requires a US$24/month connectivity plan plus continuous cloud storage—hardly practical for field engineers inspecting wind turbines 40 km offshore. No mainstream device today blends full offline reasoning, ad‑hoc Wi‑Fi phone pairing, and renewable‑friendly power in a single package. That gap is precisely where A‑Bots.com steps in.

A Convergence of Market Signals

The demand side is equally clear. Analysts peg the edge‑AI hardware market at US$24.2 billion in 2024, soaring to US$54.7 billion by 2029 (17.7 % CAGR)—outpacing the broader semiconductor sector. Silicon vendors have answered with NPUs that deliver trillions of operations per second at single‑digit watts, putting GPT‑grade language models within reach of palm‑sized boards. When you couple that compute curve with the explosive growth of power‑bank (US$18.6 b) and portable‑solar (US$577 m) ecosystems, the infrastructure for a truly autonomous assistant already exists.

The Takeaway

Offline AI is not a step backward; it’s the overdue completion of the edge‑computing promise. It secures data, saves energy, slashes latency, and—most critically—extends machine intelligence to the billions who can’t rely on perfect connectivity. In the next section we’ll peel back the aluminium lid on A‑Bots.com’s agent and show how we’re fusing mini‑computer ingenuity, quantised LLMs, and solar‑aware power design into a pocket‑sized companion built for a disconnected world.

2. Inside the Agent: Hardware & Intelligence

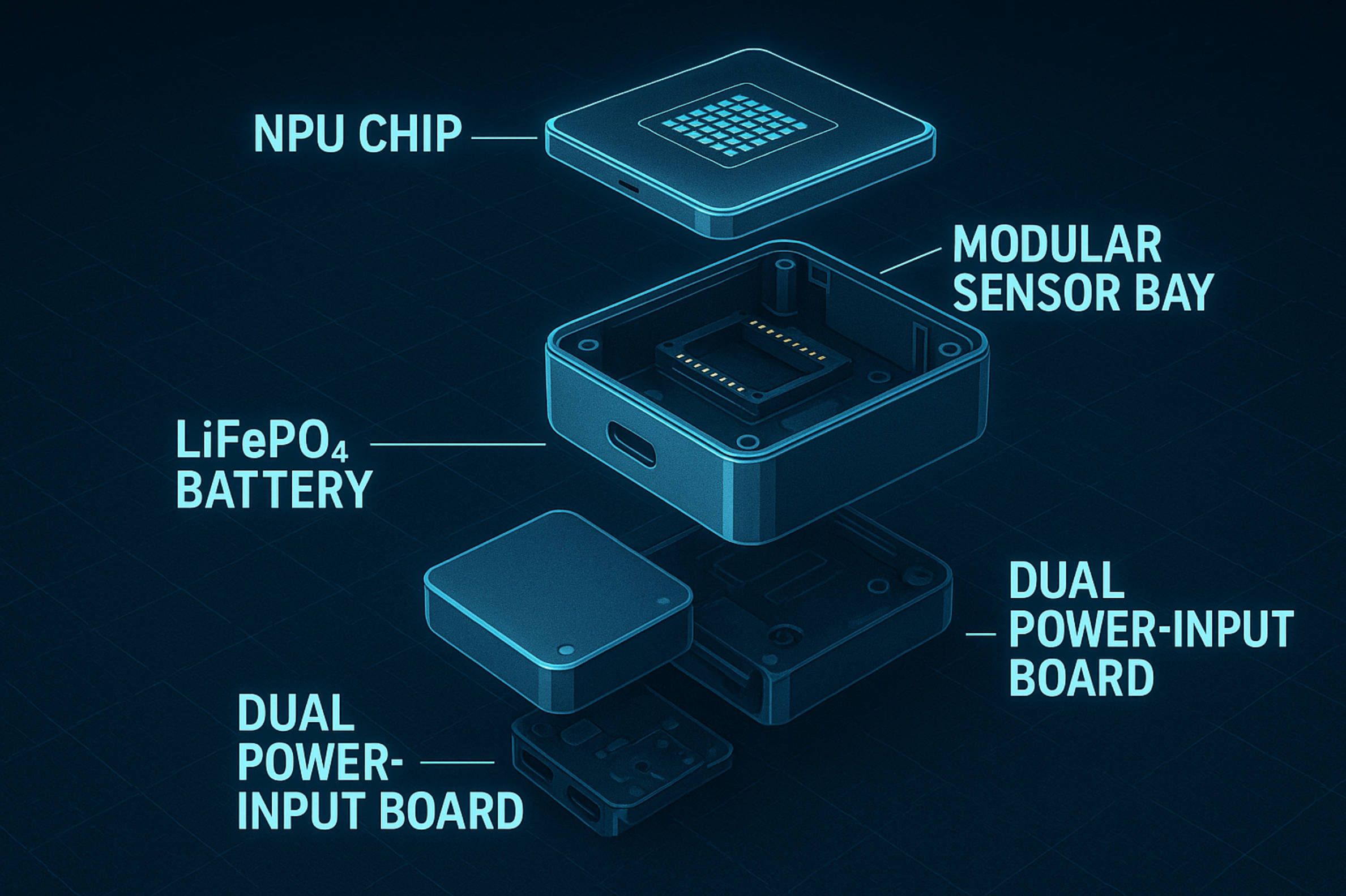

The prototype that A‑Bots.com will unveil later this year packs a complete offline AI workstation into a chassis scarcely larger than a deck of cards. Everything—from language reasoning to image recognition—runs locally, powered by a fanless single‑board computer we have tuned for watt‑scale efficiency without sacrificing the throughput needed for modern transformer models. Below is a closer look at each engineering layer and how it ladders up to reliable, privacy‑preserving intelligence in the field.

Compute Core

At the heart of the device sits a 6‑nm Arm SoC that integrates a quad‑core CPU cluster for general tasks and a neural‑processing unit (NPU) capable of 20 TOPS at a 5‑W thermal budget. The NPU’s mixed‑precision pipelines accelerate matrix multiply operations that dominate transformer inference, letting us execute an 8‑billion‑parameter language model in real time while the CPU remains mostly idle for orchestration. A‑Bots‑engineered firmware exposes fine‑grained power‑gating, so unused CPU cores or DSP blocks are parked when the workload shifts to the NPU, slashing idle draw to under 600 mW.

The board carries 16 GB of LPDDR5 memory paired with 128 GB of high‑endurance eMMC and an NVMe slot for expansion. A tight hardware memory controller schedule keeps sustained bandwidth above 60 GB/s—critical for keeping token latency low when the model reaches its longer context windows. For vision tasks the SoC hands off frames to a second‑generation ISP, where hardware tone mapping and edge enhancement shrink data volume before inference, again saving cycles and power.

Modular Sensor & I/O Fabric

Rather than locking users into a single sensor layout, the agent relies on a pogo‑pin mezzanine that supports hot‑swappable modules:

- Far‑field mic array – six MEMS microphones deliver beam‑formed audio for offline speech recognition up to five metres.

- Global‑shutter RGB camera – 8‑MP resolution, 120 fps burst for rapid motion analysis.

- Thermal sensor & ToF lidar – optional module for night‑time wildlife or security monitoring.

Peripheral expansion follows a managed CAN‑over‑USB profile: each module advertises its power envelope, so the power manager can throttle or shed loads when operating from solar alone. A‑Bots.com will open the module specification so third parties can build custom sensors—spectrometer heads for agronomists, air‑quality probes for environmental scientists, or mmWave radars for industrial safety inspectors.

Power Architecture

The agent embraces off‑grid autonomy as a first‑class requirement. A dual‑input charge controller accepts either a standard 18‑W USB‑C PD supply or a 12‑V solar blanket, automatically selecting maximum power‑point tracking in sunlight. An internal 9 800 mAh LiFePO₄ pack provides six to eight hours of continuous vision‑language operation; dock a 20 000 mAh power bank and uptime extends to two full work shifts. During low‑duty cycles—say, wake‑word monitoring—firmware drops the entire board into deep sleep at 60 mW, enabling multi‑day standby between charges.

Thermal engineering is equally aggressive: a graphite heat spreader and finless aluminium shell wick burst loads without resorting to active fans. This silent design matters in clinical or wildlife settings where noise contaminates the environment.

Connectivity & User Interface

The device runs its own encrypted dual‑band Wi‑Fi hotspot. On boot, it advertises a zero‑configuration service so any iOS or Android phone can attach via a progressive web app, mirroring the agent’s conversational UI and sensor dashboards. Because the entire model sits on the agent, the phone merely streams commands and receives structured responses—no heavy lifting drains the handset’s battery.

When Internet is available, the agent can optionally tether through the phone for package updates or federated‑learning exchanges. A hardware privacy slider physically disconnects the radio module, guaranteeing air‑gap operation for secure locations. Visual feedback on the carbon‑black e‑ink status strip confirms whether radios are live, storage is encrypted, and power comes from battery or solar.

Software Stack & Model Optimisation

The OS is a minimal Linux build with real‑time extensions. We isolate critical services—LLM inference, vector database, speech pipeline—into container sandboxes orchestrated by a lightweight supervisor. Each container inherits seccomp and AppArmor profiles, shielding the system even if one component is compromised.

Language model

- 8 B parameters, 16‑k token context, quantised to 4‑bit weights with per‑channel calibration.

- Rotary positional encoding permits streaming inference, reducing recurrent memory look‑ups.

- A retrieval‑augmented layer indexes the local knowledge base; fine‑tuned adapters answer domain‑specific queries without reaching for the cloud.

** Vision & audio models**

- A 300‑M parameter V‑IT transformer handles object detection and OCR; an 80‑M parameter UNet variant processes thermal frames.

- Offline speech pipeline couples wake‑word spotting (< 150 µW continuous) with a Bayesian VAD front‑end that keeps false triggers below 0.3 %.

All models share a common tokeniser and embedder, cutting memory overhead and enabling multimodal fusion when tasks require joint reasoning—such as generating an English label for an image of plant leaf blight in the middle of a field with no signal.

Security & Resilience

Boot integrity starts with an on‑board secure element that stores the device certificate and signs verified boot chains. Full‑disk encryption guards user data; a second isolated enclave houses model files and activation keys. Users can wipe or regenerate keys via a pinhole reset—vital for humanitarian teams operating in high‑risk zones.

Updates are delivered as delta‑compressed packages but only when the user enables connectivity. In hostile environments the agent can rotate cryptographic session keys every hour and route the Wi‑Fi interface through channel‑hopping to foil passive surveillance.

Built for Longevity

A‑Bots.com’s design philosophy shuns planned obsolescence. The aluminium frame uses stainless threaded inserts so end‑users can swap batteries after 1 500 cycles; ports are gasketed to IP54. Component selection favours industrial‑temperature variants, ensuring operation from –20 °C winter dawns in Central Asia to 50 °C desert afternoons. Firmware images remain open for audit, and a published board‑support package allows research labs to repurpose the hardware long after the initial product cycle.

From Silicon to Situational Awareness

This tightly integrated hardware‑and‑software stack elevates the agent from a clever gadget to a mission‑grade tool. It can survive rough handling in disaster zones, sip power from a pocket‑sized solar panel, and still produce on‑device large‑language insights without betraying a single byte of private data to the cloud. In the next section we’ll explore exactly how such robustness unlocks tangible value across agriculture, healthcare, manufacturing, conservation, and beyond.

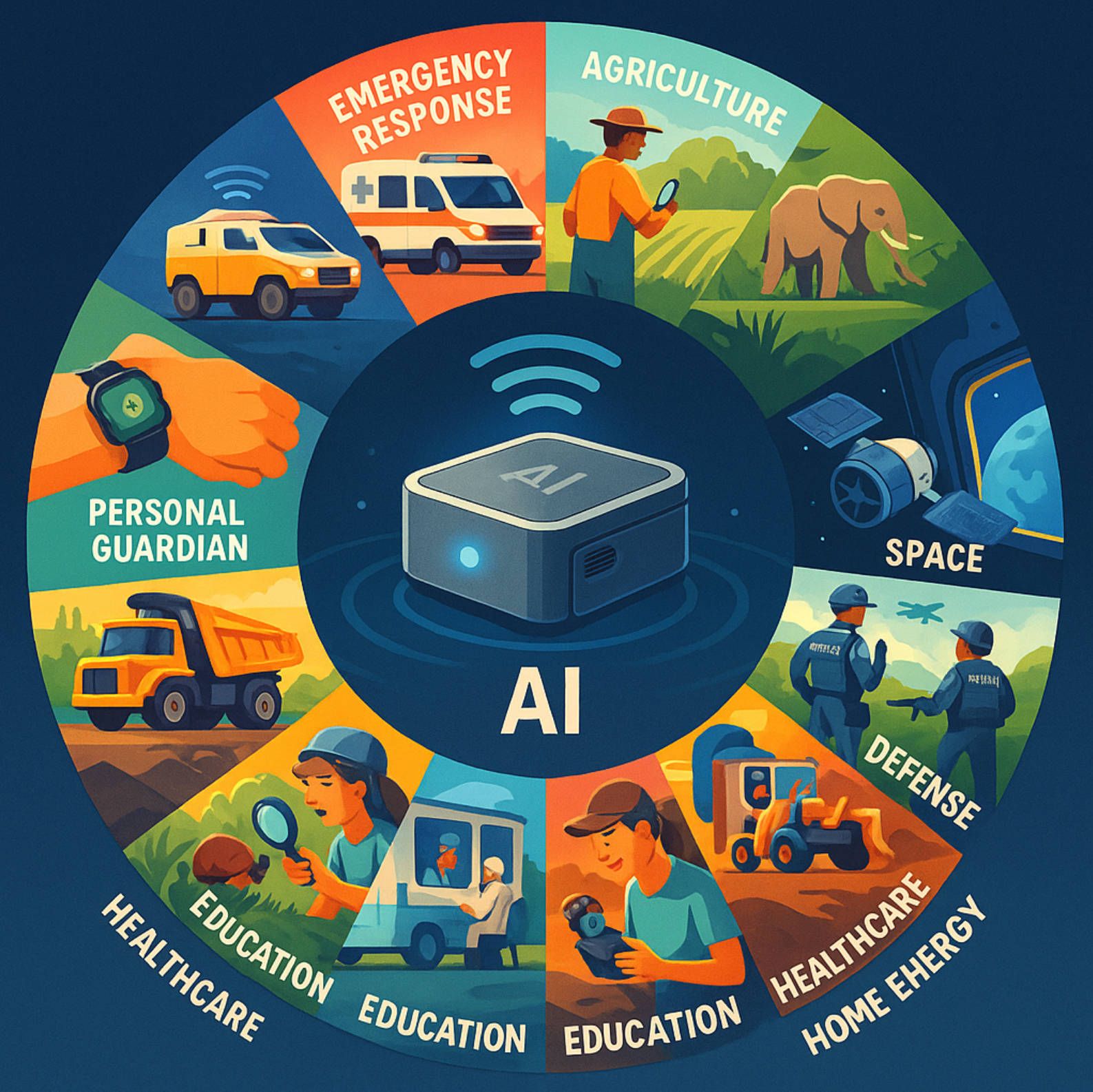

3. Where It Changes Everything: 12 High‑Impact Domains

The moment intelligence becomes untethered from the cloud it stops being a luxury add‑on and starts acting like electrical power or clean water—a primary enabler that reshapes how people live and work. Below, we dive deeply into a dozen arenas where A‑Bots.com’s offline, solar‑friendly agent transcends the limitations of conventional AI assistants and proves indispensable in everyday operations.

Emergency Response

When earthquakes fell cell towers and floods submerge fibre trunks, first responders must improvise with whatever technology they carried into the field. A backpack‑sized agent gives a rescue team medical triage, structural‑damage assessment, and multilingual translation without waiting for satellite uplinks. Medics speak symptoms into a lapel mic; the agent suggests differential diagnoses and dosage guidelines while logging vitals on an immutable ledger. Drone operators pipe thermal imagery directly into the same box, where a vision model outlines human silhouettes under rubble in real‑time. Because every inference runs locally, the workflow continues in tunnels or elevator shafts where GPS is blind and bandwidth is zero. The device’s silence—no fans, no radio chatter—means it neither compromises stealth search for survivors nor drains already scarce power reserves.

Remote Agriculture

Farms located beyond reliable 4G footprints often rely on agronomists who visit only once per season. Plant stress spreads faster than that. Mounting the agent on an ATV or attaching it to a scouting drone transforms the daily walk of fields into a high‑resolution diagnostic sweep. Leaf‑scale imagery passes through convolutional and transformer layers that identify nutrient deficiencies, fungal blights, or aphid clusters and immediately plot corrective actions on an offline map. A‑Bots.com’s retrieval‑augmented language model contextualises the finding with local weather, soil telemetry, and market prices, recommending whether to spray, irrigate, or accept partial yield loss. Power comes from the tractor’s USB‑C port by day and a foldable solar mat at the equipment shed by night, keeping the farmer’s laptop free for accounting rather than inference workloads. The ripple effect is massive: reduced pesticide waste, timely interventions, and ultimately higher food security for regions that can least afford crop failures.

Wildlife Conservation

Anti‑poaching units trekking through savannah or rainforest depend on radio silence to avoid tipping off traffickers. A wrist‑mounted controller linked to an agent in the rucksack can analyse acoustic patterns for gunshots or vehicle engines, estimate direction, and vibrate a silent alert. Thermal cameras spot nocturnal movement; the onboard model distinguishes between elephant herds, antelope, and human intruders with infrared only—no visible flash, no cloud relay. Battery swaps are rare thanks to the agent’s intelligent power‑domain scaling that idles the vision core until a motion vector crosses a set threshold. For biologists, the same hardware logs biodiversity metrics: call signatures of bird species, migration timings, nesting success, all time‑stamped and geocoded for later syncing to central databases when the team returns to range headquarters.

Space & High‑Altitude Flight

CubeSats and stratospheric balloons face stringent downlink budgets; every bit they beam home costs dollars and time. Embedding our agent as an onboard analytics co‑processor lets missions pre‑filter raw sensor data. Spectrometer readings are compressed into salient features, cloud‑top images turned into storm‑evolution probabilities, and only the most valuable packets are queued for transmission. Inside crewed spacecraft, the device becomes an offline conversational assistant that references operating manuals, life‑support schematics, and medical guidelines, all stored locally to dodge any dependency on Deep Space Network scheduling. In high‑altitude drones surveying wildfires, the agent’s vision model detects flare‑ups and autonomously re‑tasks flight paths—crucial when latency to the ground station is measured in minutes, not seconds.

Mining & Oil Rigs

Underground mines and offshore platforms are notorious RF hellscapes. Wi‑Fi ripples across jagged rock faces, and salt mist corrodes antennae. The agent’s mesh‑capable hotspot, however, can daisy‑chain through Ethernet tethered nodes or short‑range industrial protocols such as Modbus‑TCP. Edge vibration analysis running on its NPU flags early bearing failures in conveyor belts; gas‑sensor arrays feed anomaly detectors that raise alarms before methane levels hit danger thresholds. If an evacuation ensues, an intrinsically safe battery pack keeps the unit lit, guiding crews along exit routes rendered on a low‑power e‑ink panel. Data remains within the mine’s jurisdiction—critical in industries where proprietary ore body models or drilling logs are guarded trade secrets.

Military & Peacekeeping

Electronic‑warfare scenarios often jam satellite navigation and spoof public networks. An offline AI analyst that consumes multispectral drone feeds, topographic data, and secure voice commands enables squads to maintain situational awareness without betraying their presence. For peacekeepers, the same platform can perform sentiment analysis on local radio chatter and flag escalation triggers in near real‑time. Hardware tamper switches and full‑disk encryption assure commanders that, if a device is lost, no sensitive doctrine or field intel leaks. Meanwhile, solar recharging removes the logistical bottleneck of battery resupply convoys, cutting both cost and risk in contested regions.

Maritime Shipping & Research Vessels

Cargo ships criss‑cross oceans under strict fuel budgets; satellite bandwidth is rationed for navigation and corporate e‑mail. An onboard agent augments bridge operations with predictive maintenance, scanning engine‑room sensor streams for vibration signatures that hint at cylinder scoring or lube‑oil dilution. On deck, a waterproof camera module feeds hull‑integrity models that detect rust blooms or ballast‑tank leaks. For oceanographers aboard survey vessels, offline marine‑life classifiers tag whale songs or map algal‑bloom boundaries without waiting for shoreline data centres. All processing happens below deck, in a temperature‑‑controlled electronics bay empowered by the vessel’s own solar‑assisted micro‑grids.

Smart Manufacturing

Factories that fully embrace Industry 4.0 accumulate terabytes of high‑frequency sensor data per shift. Streaming it to the cloud is not only expensive, it exposes trade‑secret process variables. Mount the agent at each production cell: cameras inspect solder joints, microphones listen for gear backlash, and laser‑line scanners check part dimensions, all in situ. The language model ingests equipment manuals so technicians can query troubleshooting steps hands‑free. Because inference is confined to the shop floor, intellectual property stays behind the firewall, and latency for corrective actions drops below the cycle time of a robotic arm. Power loss triggers the device’s three‑second supercapacitor ramp‑down sequence, ensuring log integrity even during brownouts.

Outdoor STEM Education

Field trips often devolve into note‑taking exercises, with teachers later spending evenings transcribing observations. Hand each student group a compact agent: it runs a kid‑friendly conversational shell with curriculum‑aligned knowledge graphs stored locally. Children point a monocular camera at insects, mineral samples, or constellations; the agent identifies species, chemical compositions, or star names and explains underlying scientific principles in age‑appropriate language. No data connection means no distracting notifications, and parents can rest easy knowing pictures of their children never leave the device. A fold‑out solar leaf clipped to the back of a backpack keeps it running all weekend during camping expeditions.

Healthcare Outreach

Mobile clinics operating across sparsely populated regions carry ultrasound wands, blood‑glucose meters, and digital stethoscopes—but cloud AI consults are impractical. Our agent accepts raw DICOM frames, stethoscopic waveforms, or patient‑dialog transcripts and performs risk‑scoring on‑device. Midwives in rural areas can conduct foetal heart‑rate classification offline; community nurses translate treatment instructions into local dialects via the language model’s embedded phrase tables. Battery life lasts an entire vaccination drive thanks to dynamic voltage scaling that turns off computer‑vision blocks when running purely linguistic tasks. Encrypted patient records remain on the agent until crews return to a broadband‑equipped hospital, preserving confidentiality and chain‑of‑custody.

Personal Data Guardian

In an era of ubiquitous cameras and microphones, individuals increasingly crave digital autonomy. Clipped to a jacket pocket, the agent continuously transcribes conversations or captures lifelog snapshots—but everything is indexed and queried locally. Say “Show me the café where I met Anna last Tuesday,” and the device retrieves imagery and context in milliseconds, never uploading a byte to third‑party servers. It doubles as a privacy translator: when you press the mute button, the Wi‑Fi chip is physically severed, and a local wake‑word recogniser lights an LED when smart speakers in the room begin listening, offering a discreet warning.

Home Energy Resilience

With climate change amplifying grid instability, homeowners seek appliances that operate through outages. Docked on a kitchen counter, the agent orchestrates battery inverters, HVAC thermostats, and rooftop solar arrays without cloud APIs. When the mains drop, the device becomes the micro‑grid nerve centre, negotiating load‑shedding between refrigerator, freezer, and medical devices based on learned usage patterns. Because the algorithmic core requires mere watts, it runs happily off the same lithium‑iron‑phosphate bank that backs the refrigerator compressor, ensuring the control plane never dies before the payload. Family members still access voice assistance, calendar reminders, and recipe suggestions via a local Wi‑Fi link to their phones—valuable continuity when the neighbourhood is dark.

A Network of Possibilities, No Network Required

Across these twelve scenarios, a common thread emerges: critical work does not stop where bandwidth ends. By collapsing state‑of‑the‑art language, vision, and decision models into a palm‑sized, renewable‑powered computer, A‑Bots.com equips professionals, educators, explorers, and everyday families with a co‑pilot that respects privacy, survives rough handling, and never begs for a signal. The agent becomes eau‑de‑tech: invisible yet deeply integrated, elevating outcomes from mine shafts to mountain peaks and from factory floors to kindergarten playgrounds. In the concluding section we will connect the dots between this transformative potential and A‑Bots.com’s roadmap—culminating in the upcoming public prototype reveal and pre‑order window.

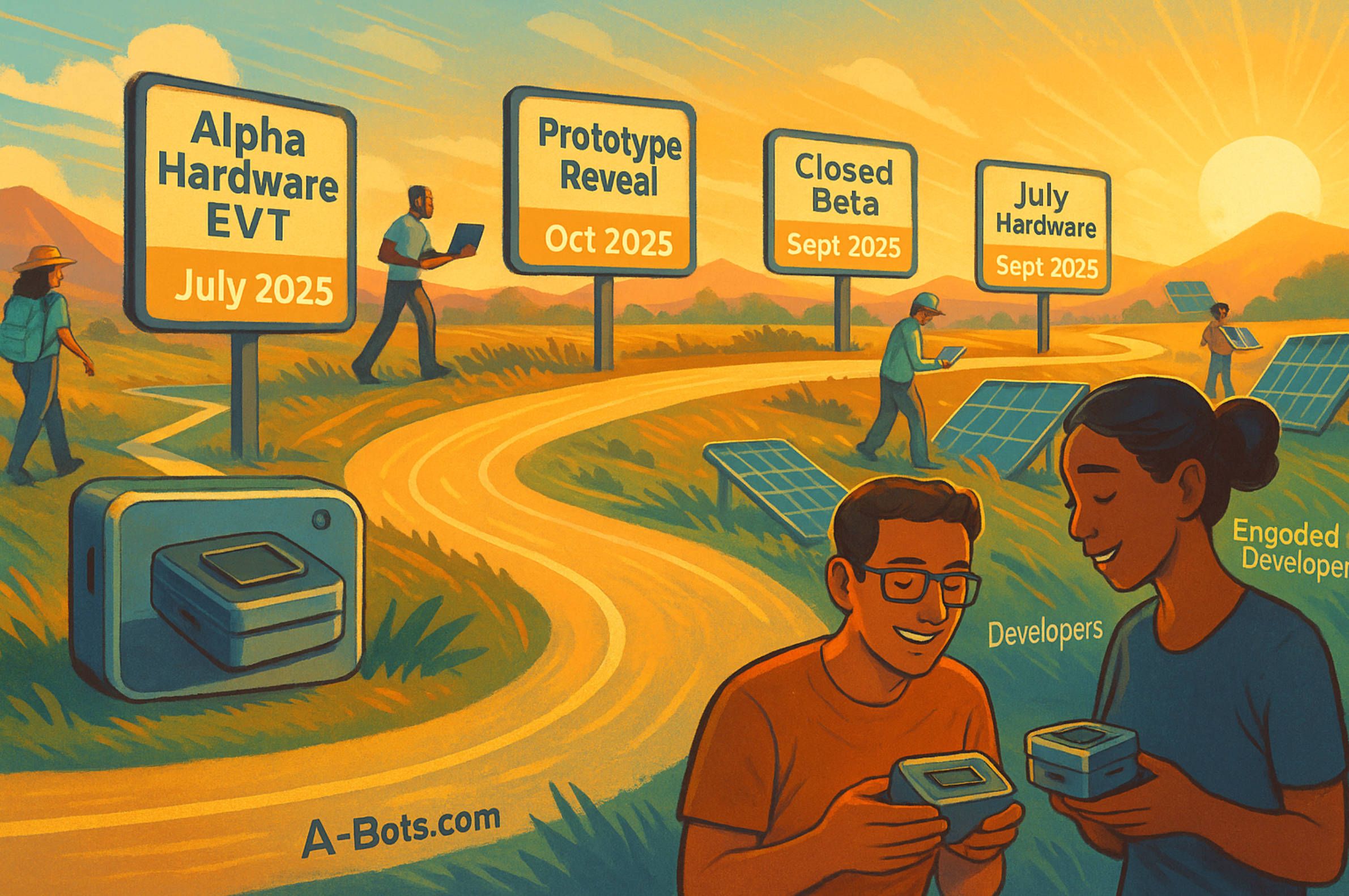

4. A‑Bots.com Mission & Next Steps

Why We Build

A‑Bots.com was founded on a simple conviction: intelligence should be as available as sunlight—everywhere, always, and owned by the people who use it. For a decade we have delivered custom mobile apps, industrial IoT stacks, and edge‑machine‑learning toolchains for clients who cannot afford downtime or data leakage. From predictive‑maintenance dashboards in wind farms to AR repair manuals for autonomous tractors, our work has one recurring theme: put the brains close to the problem. The offline agent is the logical culmination of that ethos—a pocket‑sized R&D lab that keeps working when the cloud clocks out.

What Sets Us Apart

Most headline wearables treat the cloud as an umbilical cord; pull the plug and functionality collapses. Our architecture flips the paradigm:

- Local First – Language, vision, and sensor fusion run entirely on device; connectivity is an optional bonus, not a crutch.

- Energy Agnostic – USB‑C, power bank, or a foldable solar sheet all drive the same workflows without user tweaks.

- Open Module Spec – Third parties can snap in new sensors or co‑processors, extending the agent’s lifespan beyond a single hardware cycle.

- Privacy by Design – Data never leaves the aluminium shell unless the owner explicitly green‑lights a transfer.

This uncompromising combination of autonomy, sustainability, and extensibility positions A‑Bots.com to lead a category that major incumbents have barely acknowledged.

Your Invitation

We are opening three pathways for early adopters:

- Field‑Tester Cohort – Apply if you run projects in remote healthcare, conservation, or crisis response. You’ll receive beta hardware, a direct Slack channel to our engineering leads, and the chance to shape feature priorities.

- Developer Program – Sign up for the SDK preview to port custom models or design new sensor modules. Participants appear in a featured partner directory at launch.

- Community Pre‑Order – Reserve a production unit with a refundable deposit and gain access to monthly behind‑the‑scenes webinars plus a private GitHub repo of design files.

The Next Signal

In the coming weeks we will publish a white‑paper detailing benchmark results, energy profiles, and security audits. If these updates matter to your mission—whether that mission is lifting crop yields in bandwidth deserts or safeguarding patient data in a rural clinic—join us. A‑Bots.com is not merely shipping hardware; we are building the connective tissue for a world where every person, drone, or sensor can think for itself. The signal is coming from the edge. Be ready when it reaches you.

✅ Hashtags

#OfflineAI

#EdgeAI

#SolarPowered

#OnDevice

#PrivacyTech

#EdgeComputing

#AIHardware

#ResilientTech

#ABots

#AIInnovation

Other articles

Smart Solar and Battery Storage App Solar panels and batteries are cheaper than ever, but real value emerges only when software choreographs them minute by minute. This in-depth article tracks the cost curves reshaping residential energy, explains the app architecture that forecasts, optimises and secures every dispatch, and unpacks the grid-service markets that now pay households for flexibility. Packed with field data—from NREL bill-savings trials to Tesla’s 100 000-home virtual power plant—it quantifies user ROI across savings, resilience and sustainability. The final section details why A-Bots.com is uniquely positioned to deliver such platforms, fusing AI forecasting, IEC-grade cybersecurity and award-winning UX into a turnkey solution. Whether you build hardware, aggregate DERs or own a solar roof, discover how intelligence—not silicon—unlocks the next decade of energy value.

Custom App Development for Smart Hydroponic Gardens Controlled-environment agriculture is booming, yet success hinges on software that can orchestrate pumps, LEDs, nutrients, and climate in real time. In this in-depth guide A-Bots.com walks you through the full technology stack—hardware, edge intelligence, secure connectivity, cloud analytics, and UX—showing how each layer compounds into measurable savings. You’ll see case data on 90 % water reduction, 20 % yield gains, and pay-back periods as short as 26 months, plus a four-stage methodology that de-risks everything from proof-of-concept to fleet-scale OTA updates. Whether you’re a rooftop startup or an appliance manufacturer, learn how bespoke app development transforms a hydroponic rack into a transparent, investor-ready food engine—and why the next billion city dwellers will eat produce grown by code.

App Development for Elder-Care The world is aging faster than care workforces can grow. This long-read explains why fall-detection wearables, connected pill dispensers, conversational interfaces and social robots are no longer stand-alone gadgets but vital nodes in an integrated elder-safety network. Drawing on market stats, clinical trials and real-world pilots, we show how A-Bots.com stitches these modalities together through a HIPAA-compliant mobile platform that delivers real-time risk scores, family peace of mind and senior-friendly design. Perfect for device makers, healthcare providers and insurers seeking a turnkey path to scalable, human-centric aging-in-place solutions.

Mobile App Development for Smart Pet Feeders Smart pet feeders are no longer luxury gadgets — they are becoming vital tools in modern pet care. But their true power is unlocked only through intuitive, connected mobile apps. This article explores how custom software development elevates the user experience far beyond off-the-shelf solutions. From feeding schedules to AI-driven health monitoring, we break down what pet owners truly expect. We analyze the market, dissect real product cases, and outline the technical architecture behind dependable smart devices. Most importantly, we show why hardware manufacturers need the right digital partner. A-Bots.com delivers the kind of app experience that builds trust, loyalty, and long-term value.

Mobile App Development for Scales Smart scales have evolved into intelligent health companions, offering far more than weight data. Today’s users demand full-body insights, AI-driven feedback, and smooth integration with other devices. This article explores how mobile apps transform smart scales into personalized wellness ecosystems. We analyze the market growth, user expectations, and technical architecture needed for success. Real-world case studies and forward-looking trends are covered in depth. We also reveal why brands must prioritize custom software over generic solutions. If you're building the future of digital health, it starts with your app.

Custom Agriculture App Development for Farmers In 2024, U.S. farmers are more connected than ever — with 82% using smartphones and 85% having internet access. This article explores how mobile applications are transforming everyday operations, from drone-guided field scouting to livestock health tracking and predictive equipment maintenance. It examines why off-the-shelf apps often fail to address specific farm needs and how collaborative, farmer-funded app development is gaining momentum. Through real-world examples and step-by-step guidance, readers will learn how communities of growers can fund, design, and launch custom apps that fit their exact workflows. A-Bots.com offers tailored development services that support both solo farmers and agricultural groups. With offline capabilities, modular design, and support for U.S. and international compliance, these apps grow alongside the farm. Whether you're planting soybeans in Iowa, raising cattle in Texas, or running a greenhouse in California — this article offers the tools and inspiration to build your own farm technology. Discover why more farmers are saying: we don’t wait for the future — we build it.

Top stories

Copyright © Alpha Systems LTD All rights reserved.

Made with ❤️ by A-BOTS