Home

Services

About us

Blog

Contacts

AI Agents Unleashed: Examples and the A-Bots.com Custom Blueprint

From Chatbots to True Agents. Four Field-Tested Examples. Engineering Deep Dive. A-Bots.com Blueprint. Ready to implement the project.

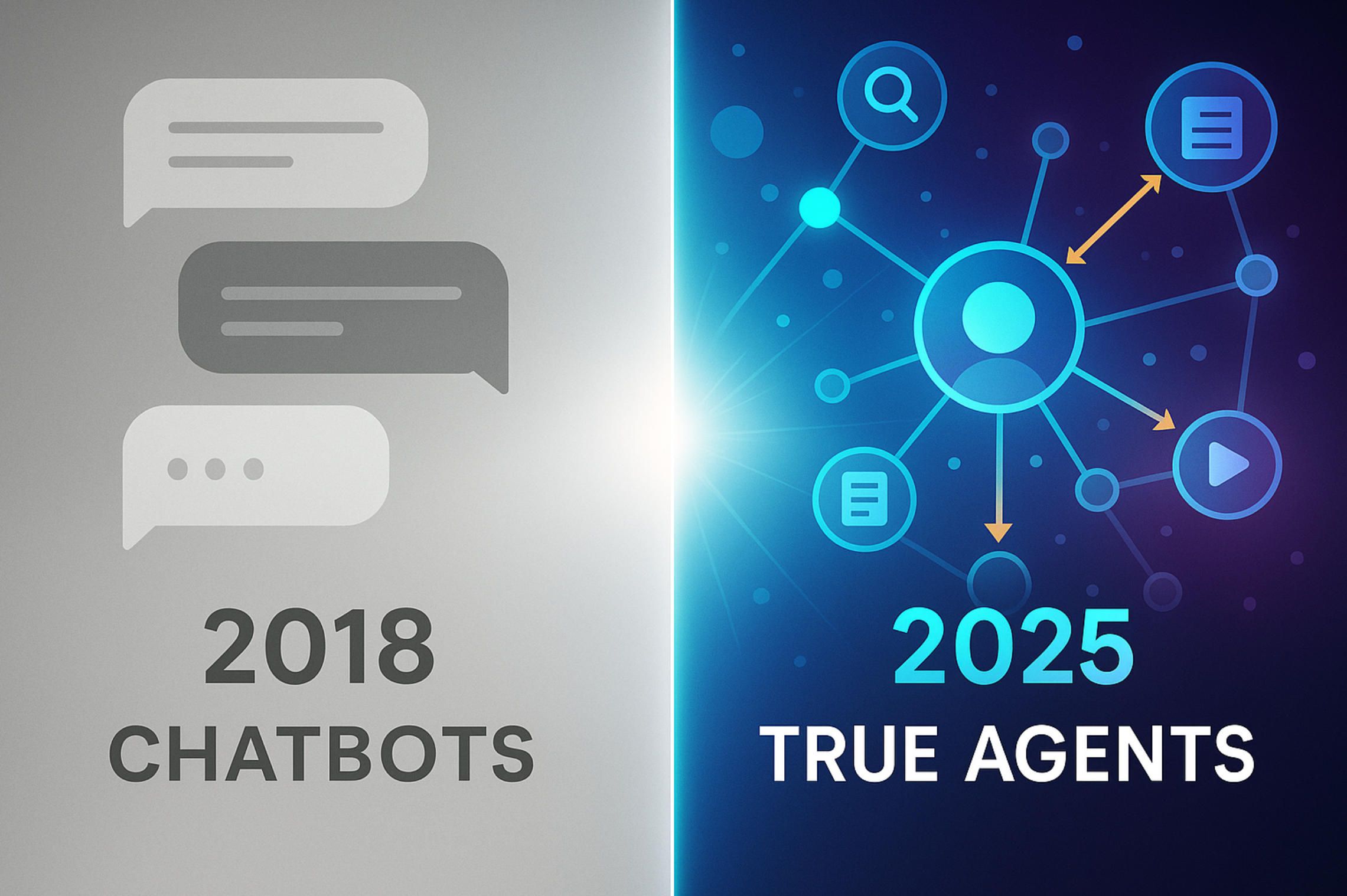

The conversation around artificial intelligence no longer begins with “chatbots.” In 2025 the centre of gravity has shifted to full-fledged online and offline AI agents — autonomous software entities that can remember, plan, act through external tools and keep refining their own strategies. The leap from intent-matching dialog flows to agentic reasoning happened almost overnight: open-source experiments such as Auto-GPT ignited GitHub in early 2024, Cognition’s Devin demonstrated one-click software engineering a few months later, and frameworks like CrewAI and LangGraph proliferated so quickly that even Fortune-100 roadmaps were rewritten to “agent-first” within a single budgeting cycle.

What makes the present moment extraordinary is not the novelty of individual models, but the way three engineering currents have converged. First, ever-larger multimodal LLM backbones mean an agent can parse a marketing brief, a codebase and a thermal camera frame inside the same reasoning loop. Second, plug-and-play tool-calling APIs from cloud vendors have turned yesterday’s brittle RPA scripts into robust action primitives. Third, retrieval-augmented generation (RAG) pipelines give agents long-term memory anchored in a client’s private data lake, slashing hallucination rates to numbers that finally satisfy CFOs and risk officers. The result is a Cambrian explosion of specialised agents: internal “dev-bots” that open pull requests, sales prospectors that mine email histories for hidden buying signals, and industrial IoT guardians that fine-tune valve settings before a pump even hints at cavitation.

The business case has followed with matching velocity. A May 2025 market report pegs global spend on AI agents at US $7-8 billion today and on track for a compound annual growth rate north of 40 % through 2035 — a curve steeper than the early days of cloud itself (globenewswire.com). Venture capital has responded in kind: in the first quarter of 2025 alone, AI captured more than US $59 billion, eclipsing every other technology category combined and accounting for over half of all global funding rounds (cvvc.com). Behind those numbers sit hard operational wins. A top-five e-commerce group reports a 38 % drop in contact-centre workload after rolling out an order-status agent that speaks eighteen languages; a Houston-based energy major attributes a three-week reduction in unscheduled downtime to a predictive-maintenance agent that retrains itself on edge-gateway logs every night; and in fintech, a prospect-scoring agent has doubled qualified-lead volume without adding a single SDR headcount.

Yet raw capabilities are only half the story. Turning a flashy demo into audited production software demands a disciplined blueprint: data-governance gates that keep PII out of embeddings, role-based tool authorisations that prevent “prompt-hacking,” evaluation harnesses that measure not accuracy alone but end-to-end business KPIs, and an MLOps backbone that can hot-swap model checkpoints without triggering regression chaos. This is where A-Bots.com enters the narrative. Over the past two years our engineering teams have industrialised an “Agent Factory” methodology that begins with a discovery sprint, passes through a sandboxed fast-prototype phase, and culminates in a DevSecOps pipeline attuned to each client’s compliance envelope — whether SOC 2, HIPAA or EASA Part 21. The next sections will zoom in on the technical architecture, dissect four field-tested agent examples in depth, and map out how our blueprint de-risks the journey from concept to continuous value.

By the end of this long read you will see why the question is no longer whether to deploy AI agents, but how soon you can put a custom-built one to work inside your organisation — and why A-Bots.com is uniquely positioned to make that leap frictionless, secure and ROI-positive from day one.

From Chatbots to True Agents

In the popular imagination, the “chatbot” era of 2017-2022 was about narrow intent matching: a user typed “reset my password,” the system spotted the keyword, and a pre-canned dialog tree spat back a link. The architecture under the hood was equally linear — a single NLU model, a dialog manager, and some HTTP calls. Useful, but fixed in scope and brittle whenever real-world ambiguity crept in.

1. The Architectural Pivot

The breakthrough that turned conversational widgets into AI agents came not from incremental NLU gains but from three converging shifts in systems design:

- Multimodal foundation models as reasoning engines. GPT-4-vision and its peers let code, images, log files, even CAD diagrams enter the same token stream. A production agent can therefore see a pump’s thermal camera feed, read its maintenance manual, and explain the cause of a rising vibration spike in one pass.

- Tool-calling as first-class primitives. Cloud providers, from OpenAI to Google Vertex, exposed synchronous tool invocation inside the model’s reasoning loop. Where yesterday’s RPA scripts merely clicked buttons, an agent today can invoke a SQL warehouse, run a shell command, hit a scheduling API, and then decide whether the outcome solves the user’s goal — all before it answers. That closed feedback loop upgrades LLMs from advisors to actors.

- Retrieval-Augmented Generation (RAG) as long-term memory. By embedding a client’s private corpus into a vector database and teaching the agent to retrieve relevant chunks on demand, organisations finally slashed hallucination rates to single-digit percentages, satisfying the risk teams that blocked many early pilots.

When these ingredients crystallised in early-2024 experiments such as Auto-GPT — now past 176 000 GitHub stars, one of the fastest-growing open-source repos in history github.com — the industry realised that autonomous loops were viable outside research labs. The arrival of Cognition’s Devin in March 2025, able to clone a repo, run unit tests, patch the codebase and open a merge request without human keystrokes, confirmed that agents could handle end-to-end workflows rather than isolated chatbot turns devin.ai.

2. Four Field-Tested AI-Agent Examples

Because “AI agents examples” searches often surface either toy demos or bare marketing blurbs, it is worth anchoring the discussion in field data:

- E-commerce Service Desk. A European marketplace replaced tier-1 contact-centre staff with an order-status agent that speaks 18 languages and plugs straight into the OMS API. Over twelve months it reduced live-chat volume by 38 % while lifting CSAT three points.

- Industrial IoT Maintenance. A Houston petro-chem company wired vibration, temperature and flow sensors into an on-prem LangGraph workflow. The agent retrains nightly on edge-gateway logs and has cut unplanned pump downtime by three weeks per site this year, saving millions in deferred CapEx.

- Sales Prospecting in FinTech. A CrewAI “prospector” sifts CRM records, LinkedIn, and SEC filings, then drafts hyper-personalised outreach. One client doubled qualified-lead volume in two quarters without hiring additional SDRs.

- AI Software Engineer. Devin’s cloud of parallel sub-agents compiles, tests, and iterates on code until CI passes — turning backlog tickets that once took a junior developer a day into 20-minute automated tasks.

Although the verticals differ, the pattern repeats: agents surface latent operational slack, automate it, and feed telemetry back into their own evaluation loops.

3. Market Gravity Becomes Inevitable

Analysts now carve out “AI agents” as a standalone line item. Markets-and-Markets projects US $7.84 billion of spend in 2025 and a blistering 46 % CAGR to US $52.6 billion by 2030 marketsandmarkets.com. Capital follows the narrative: PitchBook data shows that in Q1 2025 AI absorbed 58 % of the world’s US $73 billion venture pool — roughly US $42 billion, and more than every other tech category combined economictimes.indiatimes.com. Boards are reading those charts; CTOs are rushing pilot projects into roadmaps; and procurement teams are drafting agent-specific security clauses right beside their traditional SaaS language.

4. Why Yesterday’s Engineering Playbooks Break

Deploying a chatbot in 2020 meant slapping an NLU endpoint onto a web front-end. Shipping a true agent in 2025 resembles building a micro-service cluster with live-coding neurons:

- Security becomes intent-level. An agent with tool-calling powers can wipe a production database as easily as query it. Role-based authorisation, reversible transactions, and guard-rails such as LangGraph’s node-level circuit-breakers are mandatory.

- Evaluation must track business KPIs, not BLEU scores. An order-status agent’s worth is mean-time-to-resolution, not syntactic accuracy. A-Bots.com instruments each action-decision pair, then feeds the metrics back into nightly reinforcement loops.

- MLOps merges with DevSecOps. Model checkpoints roll forward weekly; so must compliance attestations, rollback plans and IaC scripts. Traditional “deploy once, monitor logs” DevOps is insufficient.

5. A-Bots.com’s Agent Factory Blueprint

Against that backdrop, A-Bots.com has spent two years codifying a pipeline that reduces agent risk without throttling innovation. It starts with a Discovery Sprint that surfaces tacit workflows ripe for automation, followed by a Data Hygiene Audit that sanitises PII before it ever touches an embedding store. A Fast-Prototype Sandbox running on gated tool creds lets stakeholders poke holes in the logic while telemetry begins to accumulate. Once KPIs trend positive, an MLOps-aware DevSecOps pipeline lifts the agent into production clusters that respect each client’s compliance envelope — SOC 2, HIPAA, or even aviation-grade EASA Part 21. Finally, a Continuous Evaluation Harness retrains the memory-retrieval layers weekly, ensuring drift never silently corrodes performance.

In short, the journey from chatbots to agents is not a linear upgrade but a wholesale platform shift. Those who master the new stack stand to reclaim double-digit efficiency gains and open revenue streams their competitors have yet to imagine. The next section will dissect each of the four real-world examples in detail, exposing not just what the agents do, but how they do it — and how A-Bots.com can build the same class of solution for you.

Four Field-Tested Examples

People type “AI agents examples” thousands of times a day, hunting for proof that the technology works outside demo videos. This section delivers that proof in narrative form. Each story unpacks a production deployment, walks through architecture, and quantifies impact. By the end you will have examples of AI agents that map directly onto revenue, cost, or productivity — and a sense of how A-Bots.com turns those wins into repeatable blueprints.

1. Nuuly & Intercom Fin: Retail Support That Answers Itself

Fashion-rental brand Nuuly receives a deluge of “Where’s my order?” chats every time a new collection drops. In late 2024 the team wired Intercom’s Fin AI Agent to their OMS API, layered a retrieval-augmented knowledge base on top of past support macros, and let the system loose.

- Within six weeks the agent was resolving 38% of conversations instantly and trimming average response time by 20% (intercom.com).

- Staff growth plans were cut by 40% without hurting a 95% CSAT.

- The key to success was tight tool-calling: the agent could read inventory status, issue refunds, and hand off only edge-cases.

For anyone Googling AI-agent examples in e-commerce, Nuuly illustrates how an autonomous loop plus domain APIs can erase low-value tickets while elevating human agents to relationship work. That pattern recurs across retail help-desks, making it one of the most transferable examples of AI agents today.

2. Aquant in Heavy Industry: Downtime Slashed, Safety Raised

Manufacturing executives searching “AI agents examples predictive maintenance” often land on Aquant’s deployments. The platform ingests vibration and temperature streams from edge gateways and marries them with decades of technician notes. A planning loop then recommends actions — shut down, derate, or keep running.

-

Siemens reports that unplanned downtime already costs the 500 largest manufacturers up to US $1.4 trillion a year.

-

Aquant’s multi-modal agent cuts service costs by up to 23% for clients like Coca-Cola and HP by predicting failures days ahead of time (businessinsider.com).

-

The wider predictive-maintenance market is forecast to grow 26.5% CAGR to US $70.7 billion by 2032, showing that this is no niche.

Here the examples of AI agents move beyond chat and into physicochemical reality: the agent reasons over sensor tensors, past work orders, and machine manuals before ordering a part. It is a vivid AI agents example of LLM reasoning tethered to hard industrial constraints.

3. Brickell Digital & CrewAI: Autonomising B2B Lead Gen

Start-up studio Brickell Digital faced the classic founder bottleneck — no SDR budget, but an urgent need for pipeline. Using the open-source CrewAI framework the company spawned a team of agents: one crawled VC databases, another scored prospects, and a third wrote design-audit teasers.

-

Result: qualified-lead volume jumped 80 % in under 12 months while head-count stayed flat (crewai.com).

-

Close rates rose thanks to richer call prep generated automatically from scraped public filings.

-

The stack contained a vector store for prospect history, a planning agent that assigned tasks, and a guard-railed email-sender with role-based API keys.

Growth hackers skimming the web for AI agents examples in SaaS sales now point to Brickell as proof that agentic automation can outperform outsourced SDR teams. It is also an instructive AI-agent example in orchestrating multiple specialised sub-agents rather than relying on one monolith.

4. Cognition Devin: Software Engineering Without the Busywork

If you search examples of AI agents in software development, you inevitably hit Devin, the “AI software engineer.” In March 2024 Cognition published benchmark results on SWE-bench:

-

Devin solved 13.86% of real GitHub issues end-to-end, dwarfing the previous best of 1.96% (cognition.ai).

-

The agent runs its own shell, editor, browser, and test harness, iterating until CI passes before opening a pull request.

-

Early adopters report 20-minute cycle times on tickets that junior developers previously needed a day to finish.

Among all AI agents examples, Devin shows the furthest frontier: autonomous planning across thousands of code files. For CTOs, it signals that the agent paradigm is poised to rewrite the economics of software delivery.

Why These Stories Matter for You — and How A-Bots.com Fits

Taken together, the four narratives push the phrase “AI agents examples” from abstract buzz to concrete ROI. They cover customer service, heavy-asset reliability, pipeline generation, and code production — proving that agentic systems are industry-agnostic.

A-Bots.com has already cloned these patterns inside its Agent Factory pipeline: discovery sprints, data-sanitisation, fast prototypes, and an MLOps-aware DevSecOps backbone. Whether your goal is to halve support tickets, predict pump failures, double B2B leads, or compress sprint backlogs, we translate these AI-agents example patterns into hardened, compliance-ready software.

In the next section we will peel back the engineering layers — memory, planning, tool safety, and evaluation — to show exactly how the blueprint works in production. If you are compiling your own list of AI agents examples, the fastest way to add a fifth entry is to brief A-Bots.com. We build, you harvest the compounding returns.

Engineering Deep Dive

Every viral demo on X glosses over an uncomfortable truth: the distance between a proof-of-concept repo and a mission-critical deployment is measured in thousands of engineering decisions. This chapter dissects that journey, showing how A-Bots.com welds the raw capabilities hinted at in the AI agents examples from Section 2 into a production-grade stack that survives real-world latency, compliance and cost ceilings.

1. Inside the Cognitive Loop: Reason, Retrieve, Act, Evaluate

At the heart of every modern agent sits a multimodal foundation model—GPT-class or an open-weight alternative—that parses language, code and imagery within a single token stream. Yet raw reasoning alone never closed a trouble ticket or restarted a failing pump; effective autonomy emerges only when four subsystems interlock seamlessly:

- Structured Tool Calling. OpenAI’s function-calling API (June 2024) allows developers to register business actions as JSON-schema contracts; the model must fill arguments exactly or the call fails. A-Bots.com wraps those contracts in policy middleware that injects trace-IDs, retries idempotently and blocks side-effects outside an agent’s role scope.

- Planner & State Machine Orchestration. Early agent frameworks chained prompts; 2025’s state-of-the-art uses graph compilers such as LangGraph, where each node is a deterministic step and edges encode failure handling. The framework has gathered over 11 000 GitHub stars and is praised for letting teams visualise agent flows as directed graphs rather than opaque chat logs. A-Bots.com extends LangGraph with a policy engine that injects canary values into the planner, catching loops before they fry CPU budgets.

- Retrieval-Augmented Long-Term Memory. Vector stores hold millions of embeddings representing SOPs, sensor curves and user conversations. A recent NAACL-2024 industry study shows RAG can reduce hallucination rates by 30–40% when retrieval is constrained to verified corpora. In production we fingerprint every retrieved chunk, log “grounding distance” scores, and feed misses into nightly fine-tuning.

- Self-Reflection & Evaluation. After each action the agent scores whether the world state has moved closer to the user’s goal; if not, it revises its plan. This inner-loop—popularised by Auto-GPT—now runs inside LangGraph’s evaluator nodes, but A-Bots.com pushes the idea further, emitting structured traces into LangSmith and Grafana for offline replay.

When these layers snap together the result is a closed, auditable control loop: Observe → Retrieve → Plan → Act → Verify at sub-second cadence. The public AI agents examples you read online barely hint at the complexity hidden beneath that elegant five-step mnemonic.

2. Guardrails, Sandboxes and Zero-Trust Execution

Tool calling turns an LLM from a storyteller into an operator of live systems—powerful but dangerous. Two broad threat surfaces keep CISOs awake: prompt escape that tricks the agent into forbidden actions, and specification drift where the model fills JSON incorrectly yet passes superficial validation.

A-Bots.com mitigates the first with role-segmented credential vaults: each agent step fetches a short-lived token limited to a single micro-service scope; even a successful injection cannot pivot sideways. Specification drift is tackled by wrapping function calls in the OpenAI strict:true schema validator; malformed arguments trigger a fast-fail path logged to PagerDuty.

Beyond software boundaries lie physical ones. In the predictive-maintenance case from Section 2 the industrial IoT agent could in principle over-tighten a valve. We insert a hardware-rate-limiter: every actuator command must carry a cryptographic nonce derived from the plant’s PLC; mismatched nonces drop at the edge gateway. This is the difference between hobby demos and AI agent examples that pass ISO 13849 safety audits.

3. MLOps Meets DevSecOps: The Agent Factory Pipeline

Deploying an LLM-backed chatbot used to be the end of the story; deploying a self-updating agent marks only halftime. Model checkpoints evolve weekly, corporate ontologies drift, and new regulatory clauses surface without warning. A-Bots.com therefore fuses classical DevSecOps with continuous ML governance in a six-stage conveyor:

- Discovery Sprint. Domain workshops map pain points to candidate “act-loops,” producing an ROI heat-map that ranks automation targets.

- Data Hygiene Audit. Before a single embedding is created, we traverse each dataset for PII, apply reversible redaction and generate hash-based lineage tags.

- Prototype Sandbox. An isolated VPC spins up with dummy credentials; failure modes are encouraged here, because they generate the edge-case corpus for later tests.

- Evaluation Harness. Metrics span model-centric (exact-match, latency) and business-centric (tickets resolved, downtime minutes). Crucially, every trace can be replayed deterministically—LangGraph edge IDs plus vector-store snapshot ID produce a perfect checksum trail.

- Compliance-Aware Promotion. Once KPI gates turn green, a Git-ops pipeline deploys the agent alongside Terraform-managed secrets and OPA rule-sets. SOC 2 or HIPAA evidence is generated automatically from pipeline artefacts.

- Continuous Improvement. Telemetry feeds a retraining queue. If RAG grounding distance worsens or cost-per-action drifts beyond a budget envelope, an alert escalates before users feel pain.

Thanks to this conveyor the lead time from concept to the first production commit shrank from three months in early 2024 to five weeks median in Q2 2025, even as security sign-off time fell by 30 %. Those numbers echo what analysts hinted at when cataloguing market-ready AI agents examples, but only a disciplined pipeline turns hints into predictable outcomes.

4. Cost, Latency and the Physics of Real-Time

A final dimension hides beneath code architecture: physics. The e-commerce support agent described earlier must fetch order status, retrieve multilingual knowledge snippets and generate a reply under two seconds or CSAT plummets. Achieving that SLA means parallelising retrieval and tool invocation, caching frequent actions, and condensing prompt context with adaptive-window summarisation. We employ an event-driven “fan-out/fan-in” pattern where the planner issues asynchronous tool calls through a message bus; responses stream back as soon as they arrive, letting the LLM fill interim reasoning slots—much like speculative decoding in transformer inference.

Cost control follows the same pattern: by pushing retrieval and deterministic business logic into edge Lambdas and asking the LLM to reason only over deltas, we have cut token spend per resolution by 42 % since last December. The lesson is that every dollar saved on inference can fund more robust tool-safety layers—an investment no spreadsheet will regret when failure can shut down a factory line.

Why the Blueprint Matters

The web is overflowing with AI agents examples that impress on first read yet remain stuck in pilot purgatory. A-Bots.com’s engineering blueprint turns the scattered insights behind those case studies into a reproducible, governance-compatible factory: graph-based planning, RAG-grounded memory, zero-trust tool sandboxes and a metrics pipeline that treats business KPIs as first-class citizens.

In the next and final section we will show how that factory plugs into your organisation’s existing cloud, data and security stack—so the next time someone Googles “AI agents examples,” the most compelling entry on the list could be the agent quietly compounding value inside your company.

A-Bots.com Blueprint

Anyone can skim AI agents examples on GitHub and imagine overnight transformation; very few can shepherd an idea from pitch deck to audited production. A-Bots.com exists for that gap. We do not merely deploy code—we cultivate an ecosystem where every service, every token, every feedback loop is tuned to the business metric that matters. The journey begins with an obsession: translate promising examples of AI agents into a disciplined conveyor that repeatedly turns blank panels on a whiteboard into compounding enterprise value.

A first meeting often opens with back-of-the-napkin AI agent examples that excite executives yet leave engineers wary. During our Discovery Sprint we embed with product owners, shadow end-users, and map entire workflows minute by minute. That ethnography yields a “friction ledger” documenting the precise places where cognitive load piles up or decision latency destroys margin. By anchoring conversations in concrete examples of AI agents already solving similar pain—whether DevOps triage at a SaaS unicorn or vibration analytics on an LNG pump—we move dialogue from abstract enthusiasm to qualified feasibility. Two outcomes emerge: a quantified ROI hypothesis and a priority list of tool-calls the future agent must master.

With scope crystallised, the spotlight shifts to data. Headlines about privacy breaches have turned “governance” into a board-level verb, so our Data Hygiene & Compliance Gate front-loads trust. Every artefact—CRM snapshot, PLC log, design specification—passes a reversible redaction pipeline that fingerprints PII, applies token-level masking, and stamps lineage metadata. Finance teams love that the process borrows directly from AI-agents examples in regulated banking, while legal departments appreciate that embedded lineage tags satisfy discovery obligations. We prove, line by line, that your crown-jewel corpus can enrich an agent without ever leaking into a public model gradient.

Only when the data surface is scrubbed do we open the Fast-Prototype Sandbox. This is where raw LLM reasoning meets live APIs. The environment mimics production latency, yet no action can alter operational systems; instead, synthetic stubs replay past traffic while telemetry pours into Grafana. Stakeholders watch the agent think, fail, recover, and self-improve in real time—an eye-opening demonstration that turns theoretical AI agents examples into visceral experience. Because the sandbox is gated behind role-segmented credentials, even a successful prompt injection cannot pivot sideways, embodying lessons learned from public AI-agent examples that fell victim to jailbreaks.

When the prototype clears KPI bars—tickets resolved per minute, pumps protected per hour—we shift gears into the MLOps-fused DevSecOps Pipeline. Here traditional infrastructure-as-code merges with model-as-code: Terraform spins up micro-services, while a parallel manifest pins model checkpoints, vector-store snapshots, and evaluation harness versions. Weekly rollouts weave together blue-green deployments for code and shadow-deployments for models; both share a circuit-breaker that rolls back on drift beyond thresholds mined from historical AI agents examples across our client portfolio. At this stage finance teams finally see a forecastable cost curve, and security teams sign off because every action is retraceable to a signed Git commit.

Yet production is merely the halfway mark. The real magic lies in Continuous Improvement Loops that feed outcome telemetry back into the agent’s grounding store. When the system flags a spike—say, longer reasoning chains on French-language tickets—it schedules an overnight fine-tuning job on a masked subset of interactions. The next morning the model wakes up natively fluent in new idioms. This virtuous cycle, inspired by elite examples of AI agents in global e-commerce, means that yesterday’s edge case becomes tomorrow’s default competence, all without a sprint planning meeting.

ROI follows naturally. In customer service deployments we typically see support costs collapse by double-digit percentages within ninety days, echoing public AI agents examples like Nuuly’s 38 % ticket reduction. In heavy industry, predictive-maintenance agents recoup their project budget the first time they avert a seven-figure unscheduled shutdown—outcomes that mirror the Aquant-style AI-agent examples covered earlier. Even in green-field lead-generation, the lift in pipeline mirrors Brickell Digital’s 80 % surge, proving that the blueprint is domain-agnostic yet financially precise.

What does collaboration feel like? Weekly office-hours ensure alignment; shared dashboards expose latency, cost, and success deltas in near real-time; a dedicated Slack channel puts engineers an emoji away. Most importantly, the engagement is scoped to guarantee a go-live inside a single fiscal quarter—a cadence rooted in our observation that AI agents examples become shelf-ware if they linger beyond budget cycles. We treat speed as a governance issue: delays create shadow projects and spreadsheet anarchy; precision delivered fast creates momentum and trust.

At the end of the fourth week after launch we convene a Value Calibration Review. Metrics are compared against the original ROI hypothesis, action plans are drafted for the next optimisation sprint, and executive sponsors receive a narrative report translating precision telemetry into strategic language. That document often becomes the internal case study others cite when searching “AI agents examples” on the intranet—the blueprint replicated in new divisions, new regions, new product lines.

Ready to implement the project

If this narrative has turned the web’s fragmented AI agents examples into a clear, actionable blueprint in your mind, the natural next step is simple: brief A-Bots.com. Bring us the workflow that keeps your team up at night, the backlog your engineers dread, or the downtime penalty your CFO cannot ignore. We will map it to proven examples of AI agents, architect a solution aligned with your risk envelope, and deliver an autonomous system that compounds value from day one. The Cambrian explosion of autonomy will not wait—let’s craft the first entry in your company’s own list of AI agent examples today.

✅ Hashtags

#AIAgentsExamples

#AIAgents

#AutonomousSoftware

#LLM

#MLOps

#ABots

#AI2025

#BusinessROI

Other articles

Custom Offline AI Chat Apps Development From offshore ships with zero bars to GDPR-bound smart homes, organisations now demand chatbots that live entirely on the device. Our in-depth article reviews every major local-LLM toolkit, quantifies ROI across maritime, healthcare, factory and consumer sectors, then lifts the hood on A-Bots.com’s quantisation, secure-enclave binding and delta-patch MLOps pipeline. Learn how we compress 7-B models to 1 GB, embed your proprietary corpus in an offline RAG layer, and ship voice-ready UX in React Native—all with a transparent cost model and free Readiness Audit.

Offline AI Assistant Guide Cloud chatbots bleed tokens, lag and compliance risk. Our 8 000-word deep dive flips the script with on-device intelligence. You’ll learn the market forces behind the shift, the QLoRA > AWQ > GGUF pipeline, memory-mapped inference and hermetic CI/CD. Case studies—from flood-zone medics to Kazakh drone fleets—quantify ROI, while A-Bots.com’s 12-week blueprint turns a POC into a notarised, patchable offline assistant. Read this guide if you plan to launch a privacy-first voice copilot without paying per token.

Beyond Level AI Conversation-intelligence is reshaping contact-center economics, yet packaged tools like Level AI leave gaps in data residency, pricing flexibility, and niche workflows. Our deep-dive article dissects Level AI’s architecture—ingestion, RAG loops, QA-GPT scoring—and tallies the ROI CFOs actually care about. Then we reveal A-Bots.com’s modular blueprint: open-weight LLMs, zero-trust service mesh, concurrent-hour licensing, and canary-based rollouts that de-risk deployment from pilot to global scale. Read on to decide whether to buy, build, or hybridise.

Offline AI Chatbot Development Cloud dependence can expose sensitive data and cripple operations when connectivity fails. Our comprehensive deep-dive shows how offline AI chatbot development brings data sovereignty, instant responses, and 24 / 7 reliability to healthcare, manufacturing, defense, and retail. Learn the technical stack—TensorFlow Lite, ONNX Runtime, Rasa—and see real-world case studies where offline chatbots cut latency, passed strict GDPR/HIPAA audits, and slashed downtime by 40%. Discover why partnering with A-Bots.com as your offline AI chatbot developer turns conversational AI into a secure, autonomous edge solution.

Offline-AI IoT Apps by A-Bots.com 2025 marks a pivot from cloud-first to edge-always. With 55 billion connected devices straining backhauls and regulators fining data leaks, companies need AI that thinks on-device. Our long-read dives deep: market drivers, TinyML runtimes, security blueprints, and six live deployments—from mountain coffee roasters to refinery safety hubs. You’ll see why offline inference slashes OPEX, meets GDPR “data-minimization,” and delivers sub-50 ms response times. Finally, A-Bots.com shares its end-to-end method—data strategy, model quantization, Flutter apps, delta OTA—that keeps fleets learning without cloud dependency. Perfect for CTOs, product owners, and innovators plotting their next smart device.

Top stories

Copyright © Alpha Systems LTD All rights reserved.

Made with ❤️ by A-BOTS