Home

Services

About us

Blog

Contacts

Counter-Drone (C-UAV) Visual Tracking & Trajectory Prediction — What’s Already Working and How to Build It (Research Brief)

1.Problem framing: onboard perception for a chaser drone

2.Sensors that actually help (and why)

3.On-board compute & avionics that can carry it

4.Datasets & benchmarks specific to tiny-UAV tracking

5.What works today (algorithms that survive on the edge)

6.A production-grade perception stack (A-Bots.com blueprint)

7.Known pitfalls & how we mitigate them

8.Evaluation metrics & fielding

9.Where A-Bots.com fits

![]()

1.Problem Framing: Onboard Perception for a Chaser Drone

A counter-UAV interceptor does not merely “see” an intruder; it must hold the target under difficult conditions and feed a guidance loop with a reliable short-horizon state estimate. We define the onboard perception problem as three tightly coupled tasks running on the chaser drone:

- Detect a small, fast, often low-contrast UAV at long range under ego-motion and clutter;

- Track it through scale changes, partial occlusions, and abrupt maneuvers;

- Predict its near-future motion (0.5–2.0 s horizon) with quantified uncertainty to drive guidance and control.

Recent benchmarks confirm why this is hard: tiny-UAV tracking in complex scenes is far from “solved,” with state-of-the-art single-object trackers achieving only ~36% accuracy on a new thermal Anti-UAV benchmark, and long-standing anti-UAV challenges still stressing detection and tracking in real-world conditions. This makes a rigorous problem setup and sensor-algorithm co-design essential. arXiv, CVF Open Access

1.1 Engagement geometry and coordinate frames

Let WW be a world frame, BB the interceptor body frame, and CC a camera (or gimbal) frame. The intruder’s position and velocity are pt,vt ; the interceptor’s are po,vo. Perception works in relative coordinates:

r=pt−po, v=vt−vo.

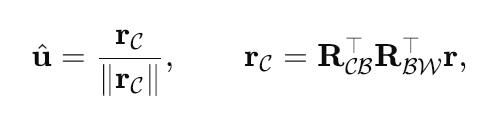

Cameras provide bearing to the target. Define the direction of the line-of-sight (LOS) in the camera frame as

where RBW is the interceptor attitude and RCB accounts for the gimbal/camera orientation. The image measurement z=[θ, ϕ]⊤ (azimuth/elevation) is a nonlinear function z=h(r,attitude)+η.

Bearing-only observability. With bearing measurements alone, the 3-D range is not directly observed; accurate range (and thus scale) becomes observable only if the observer/target performs suitable maneuvers. Classic bearings-only TMA results formalize these observability conditions and motivate deliberate interceptor maneuvers (e.g., gentle weaving or helical arcs) while tracking. In practice, we make range observable either by motion (interceptor maneuvers) or by adding a lightweight ranging sensor (e.g., mmWave radar or LiDAR). semanticscholar.org, the University of Bath's research portal

1.2 Sensors in scope (and why they’re combined)

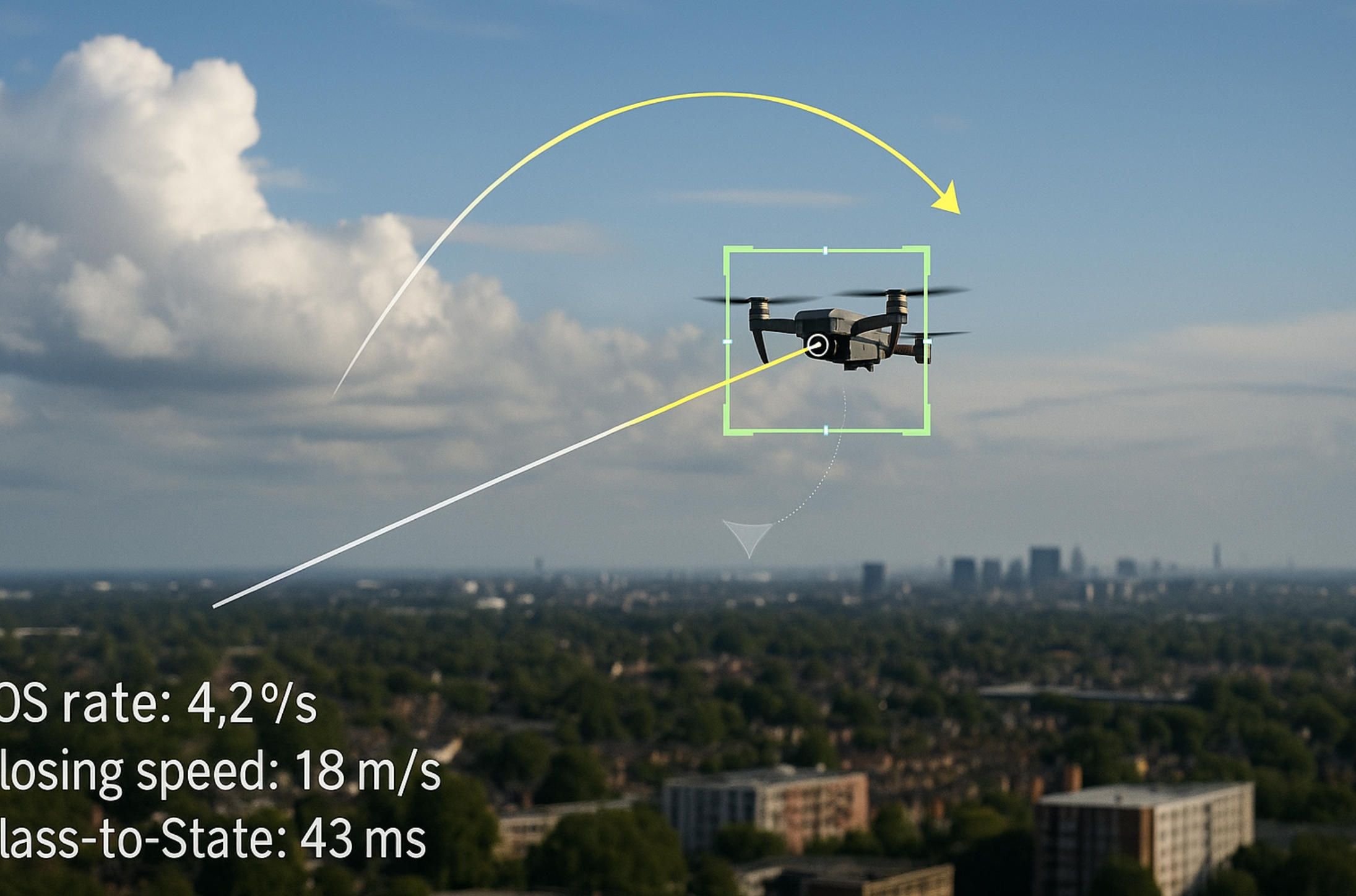

Perception is vision-first, but robust chasers are deliberately multimodal:

- EO (RGB) global-shutter on a stabilized gimbal for daytime appearance cues and long-focal-length coverage; this is where modern transformer-based single-object trackers and tracking-by-detection pipelines excel. ecva.net, arXiv

- Thermal LWIR for dusk/night/back-lighting; new anti-UAV thermal benchmarks specifically target tiny drones and show significant headroom, justifying RGB-T fusion from the outset. arXiv

- Event cameras (DVS) mitigate motion blur and latency during high-G chases and low-illumination scenes; visible-event benchmarks document the complementarity of events and frames. arXiv, GitHub

- Compact mmWave radar (e.g., TI IWR6843) provides metric range and radial velocity (Doppler) independent of lighting and texture, turning a fragile bearing-only problem into a well-conditioned fusion problem. Weight and power budgets fit small UAVs. Texas Instruments, DigiKey

- LiDAR is optional for precise short-range geometry. Acoustic and RF (AoA and signature) cues are useful for cueing and disambiguation but are rarely primary servo inputs on a rotorcraft due to self-noise and evasive RF tactics. Public RF and acoustic datasets nonetheless demonstrate feasibility as secondary modalities. ScienceDirect, ResearchGate, Hugging Face, PMC

We will detail sensor packages and fusion later; here we identify them because the problem framing assumes at least bearings ++ inertial ego-motion, and benefits materially from an auxiliary range/Doppler.

1.3 Motion and state estimation model

Perception outputs a target relative state x=[r,v,a]⊤ (often with a turn-rate parameter for coordinated-turn models). The dynamics are modeled as CV/CTRV/CTRA depending on target class; an Interacting Multiple Model (IMM) filter switches among them. The measurement model fuses:

- LOS angles from EO/thermal/event cameras;

- Range and radial velocity when available (mmWave/LiDAR);

- Ego-motion from a visual-inertial odometry (VIO) stack such as ORB-SLAM3 or VINS-Mono to stabilize the camera and de-rotate images under flight dynamics.

VIO is a solved subproblem at TRL suitable for embedded boards and is widely benchmarked on aerial datasets; it is the practical path to low-drift ego-motion and view stabilization on the interceptor. arXiv, rpg.ifi.uzh.ch

A minimal EKF/UKF update for bearings + optional range r is:

When r is missing, IMM maneuvering of the observer restores range observability and reduces covariance blow-up; when radar/LiDAR supplies r (and Doppler), the filter rapidly collapses range uncertainty and improves short-horizon prediction. semanticscholar.org

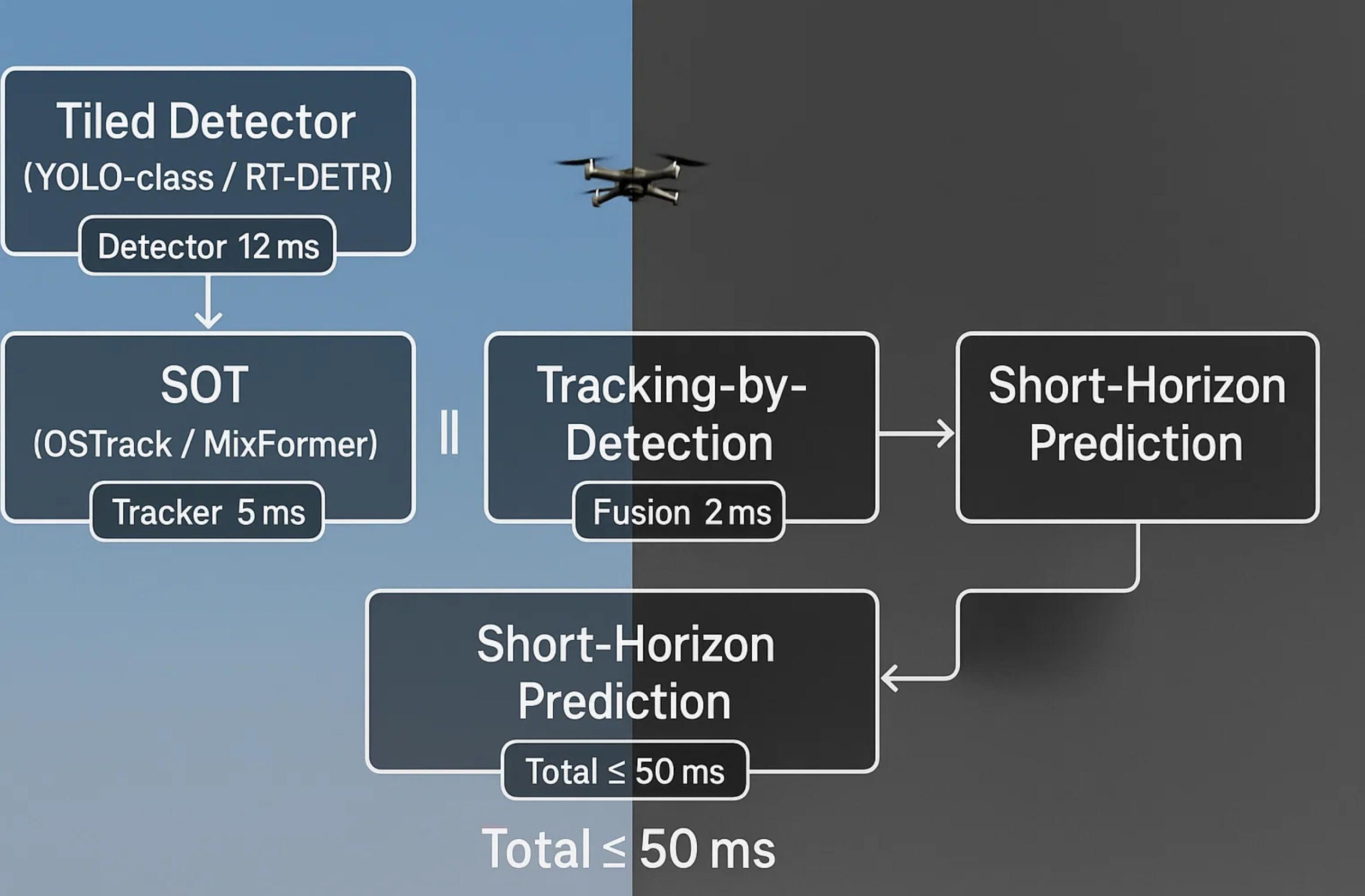

1.4 Detection–tracking–prediction loop (what the guidance actually needs)

Detection initializes and re-acquires the track. For tiny targets, small-object-aware detectors (tiling, FPN/BiFPN, SR pre-nets) supply seeds.

Tracking maintains lock with either (i) template-based SOT (e.g., OSTrack, MixFormer) or (ii) tracking-by-detection (e.g., ByteTrack) for robustness to drift and distractors. Both families are proven on modern benchmarks and can be quantized for embedded deployment. ecva.net, arXiv

Prediction feeds flight control with a short-horizon trajectory and uncertainty. A pragmatic baseline is IMM-CTRV/CTRA with a constant-turn parameter; learning-based motion heads may refine turn-rate or acceleration but should be bounded by the physics model.

Interface to guidance. Proportional Navigation (PN) and its variants still dominate uncooperative aerial intercepts: commanded lateral acceleration scales with LOS angular rate and closing speed, an=N Vc λ˙ (planar), with widely used 3-D vector forms. Filtering supplies λ˙ with covariance; guidance consumes it at high rate. secwww.jhuapl.edu, Wiki

1.5 Latency, throughput, and compute envelope

The perception loop must remain low-latency and thermally sustainable on a small airframe while ingesting multiple sensors. Two practical embedded baselines for this class of workload are:

- NVIDIA Jetson Orin NX running JetPack 6.2/6.2.1 “Super Mode” for higher sustained TOPS and memory bandwidth (INT8 TensorRT, multi-camera I/O).

- Qualcomm QRB5165 / Flight RB5 with heterogeneous CPU/GPU/DSP/NPU and up to seven concurrent cameras through the Spectra ISP, with ROS 2 support.

Both platforms are field-proven for multi-camera AI inference and VIO; choice is typically driven by power, I/O, and software stack preferences. NVIDIA Docs, Qualcomm, Qualcomm Documentation

1.6 Failure modes we must design around

- Tiny, low-contrast targets against sky or clutter: mitigate via tiling, motion-guided ROIs, and thermal fusion; recognize that even SOTA trackers underperform on thermal tiny-UAV datasets, so redundancy and re-acquire logic are non-negotiable. arXiv

- Ego-motion and gimbal dynamics: stabilize with VIO and tightly time-sync IMU↔camera; de-rotate images to keep search windows small. arXiv

- Range ambiguity: add mmWave/LiDAR or enforce observability via maneuver; propagate range uncertainty into guidance to avoid aggressive commands during scale drift. Texas Instruments

- Look-alikes (birds/balloons) & distractors: fuse short-term kinematics (wingbeat signatures, quasi-ballistic vs. wind-driven drift) with appearance cues and RF/acoustic hints where available. Hugging Face, PMC

1.7 What “success” means for the perception module

We evaluate not only classic tracker scores but engagement-relevant metrics:

- Time-to-first-lock and mean lock duration under platform motion;

- Time-to-reacquire after occlusion/contrast loss;

- End-to-end latency (sensor → state to guidance);

- HOTA/IDF1 for tracking-by-detection trials, success/precision for SOT;

- Closed-loop performance: PN accuracy given measured λ˙, miss distance statistics, and energy usage per minute of lock (for thermal limits). Modern anti-UAV and drone-vision benchmarks are appropriate starting points but should be extended with flight profiles that include back-lighting, gusts, and GPS-degraded turns. CVF Open Access, docs.ultralytics.com

Why this framing matters

This framing forces us to treat detection, tracking, state estimation, and guidance as a single control-quality pipeline with explicit assumptions about sensing and observability. It also anchors practical design choices: RGB-T as the default, mmWave (or LiDAR) when the platform can afford it, VIO-stabilized vision, and a filter that outputs LOS rate and short-horizon predictions suitable for PN or IBVS-style guidance.

A-Bots.com builds onboard C-UAV perception this way because it scales from dev kits to fieldable airframes while staying honest about tiny-target reality shown by current benchmarks.

2.Sensors That Actually Help (and Why)

An interceptor’s perception stack should be vision-first and rigorously multimodal. Each modality earns its place by fixing a specific failure mode—tiny targets at long range, low light, ego-motion, range ambiguity, or distractors. Below we outline what to fly and why it matters to tracking and short-horizon prediction.

2.1 Daylight backbone: EO (RGB) on a stabilized gimbal

For daytime engagements, a global-shutter RGB camera on a 2-axis or 3-axis gimbal is the workhorse. Global shutter avoids geometric distortion during rapid motion that can poison data association; rolling shutter artifacts (skew, “jello”) are well-documented in fast scenes, whereas global shutter reads the entire sensor simultaneously and preserves geometry for tracking and pose estimation. Oxford Instruments, Teledyne Vision Solutions, e-con Systems

Why it helps tracking. High-frequency, low-latency EO video feeds both single-object trackers (e.g., OSTrack/MixFormer-style) and tracking-by-detection loops. Long focal lengths (e.g., 25–75 mm equivalent) maintain pixel footprint on small, distant targets; the gimbal reduces search-window growth by stabilizing the region of interest. If budgets allow, fieldable UAV EO/IR gimbals with integrated LRFs exist in Group-1/2 SWaP, giving you stabilized, zoomable optics designed for target tracking. Unmanned Systems Technology, Defense Advancement

Design notes.

- Prefer global shutter and fast exposure to limit blur.

- Use narrow FOV for acquisition at range, then step down when the target closes to avoid over-zooming out of context.

- Timestamp camera and IMU tightly; feed VIO to de-rotate frames before tracking.

2.2 Night and back-lighting: Thermal LWIR (RGB-T fusion)

Tiny UAVs are notoriously hard at dusk, night, haze, and against sunlit clutter. A compact LWIR core (e.g., 640 × 512 @ 60 Hz) complements EO and enables RGB-T fusion. The 2025 CST Anti-UAV thermal SOT benchmark—built specifically for tiny drones in complex scenes—shows state-of-the-art trackers hovering around ~36% on its main score, a sober reminder that thermal tracking is still challenging but essential to cover the hardest conditions. arXiv

Why it helps tracking. Thermal gives high target salience when visual contrast collapses, improving persistence across illumination changes and smoke. Off-the-shelf LWIR cores like FLIR Boson provide 640×512 sensors with multiple lens options and 60 Hz video in SWaP-friendly modules. oem.flir.com

Design notes.

- Calibrate EO↔LWIR for mid-level fusion (shared ROI and motion priors).

- Run thermal at half-resolution if compute is tight; fuse confidences to avoid over-trusting thermal in hot backgrounds.

2.3 Microsecond motion sensing: Event cameras (DVS)

Event cameras report per-pixel brightness changes asynchronously with microsecond-scale latency and >120 dB dynamic range—two properties that matter precisely when a chaser and target perform high-G maneuvers or when glare/back-lighting would saturate a frame camera. Surveys and vendor specs consistently place modern devices (e.g., Prophesee, iniVation) in the sub-millisecond latency, 120 dB+ HDR regime. rpg.ifi.uzh.ch, iniVation

Why it helps tracking. DVS excels at motion cues with little or no blur, stabilizing lock during aggressive turns and enabling very tight control loops; prior robotics work demonstrated millisecond-class perception with event sensors under fast dynamics. Current 2025 work also targets drone detection specifically with events.

Design notes.

- Pair events with standard frames (hybrid sensors or coaxial cameras) to recover texture/appearance when needed.

- Budget a denoising front-end; event noise and annotation scarcity are active research problems.

2.4 Fixing range ambiguity: compact mmWave radar (60–64 GHz)

Vision alone gives bearings; range is poorly observable unless the interceptor or target maneuvers. A palm-sized FMCW mmWave module (e.g., TI IWR6843) provides metric range and radial velocity (Doppler) at low power and weight, with integrated MIMO in a single chip. Specs include operation in the 60–64 GHz band, multiple TX/RX channels, and built-in chirp engines suitable for embedded platforms—exactly what you need to collapse range uncertainty inside an EKF/UKF and de-alias closing speed for PN guidance. Texas Instruments

Why it helps tracking. Feeding even a 10–20 Hz range/Doppler into a bearings-only filter stabilizes the short-horizon prediction that guidance needs, especially at night or over low-texture backgrounds.

Design notes.

- Mount away from high-EMI sources; validate antenna FOV overlap with EO/LWIR to simplify data association.

- Use radar to cue vision when SNR is poor, and to confirm re-acquisition after occlusions.

2.5 3-D geometry at short ranges: LiDAR (solid-state)

Solid-state LiDAR contributes direct range and local shape independent of lighting. A 2024–2025 literature stream specifically reviews LiDAR-based drone detection and tracking, including scanning mechanisms and deep-learning pipelines over point clouds—useful both for close-in state estimation and for bird/balloon disambiguation via 3-D motion. ResearchGate, MDPI

Trade-offs. Onboard LiDAR adds mass and power; point density on a small drone at long range is sparse. It shines for terminal guidance (tens of meters), obstacle avoidance, and as a confidence sensor when EO/LWIR are degraded.

2.6 Acoustic arrays: useful cue, tough onboard

Arrays of MEMS microphones can estimate bearing to a rotor signature; research demonstrates onboard phased arrays with rotor-noise cancellation and beamforming. In practice, self-noise on a multirotor limits range, but late-fusion of an acoustic bearing with EO/LWIR increases robustness in low-altitude, low-wind scenarios. MDPI, PMC

2.7 RF sniffers: opportunistic angle-of-arrival (AoA)

When the intruder is emitting (control/video links), SDR-based AoA with switched-beam or MUSIC-style processing can deliver few-degree bearing accuracy in compact hardware—useful for cueing and for confirming that a visual target is in fact a drone on a live link. Recent 2024–2025 studies report <5° average error for OFDM/CW sources with lightweight arrays; just don’t rely on RF for servo if the opponent goes radio-silent or hops aggressively. MDPI, ScienceDirect, ResearchGate

2.8 What not to over-index on: consumer ToF depth

Active Time-of-Flight depth cameras are great indoors, but sunlight and limited non-ambiguity range make most consumer-class ToF unreliable for long-range outdoor tracking. Multiple studies note background-light sensitivity and limited range (often <10 m) unless specialist illumination and optics are used—constraints mismatched to air-to-air intercepts. MDPI, ResearchGate

2.9 A pragmatic sensor package (baseline vs. upgrades)

Baseline for a chaser UAV

- EO RGB, global shutter, stabilized gimbal, narrow-to-medium FOV

- LWIR thermal 640×512 @ 60 Hz

- IMU + precise timestamps (for VIO and de-rotation)

- Optional mmWave radar for range/Doppler fusion when SWaP allows

This baseline covers daytime/dusk/night and fixes the biggest estimator weakness—range. It also aligns with what current anti-UAV benchmarks show: thermal and small-object tracking remain tough, so redundancy and re-acquisition matter. arXiv

Upgrades for specific theaters

- Event camera for high-G chases and glare (microsecond latency, 120 dB HDR).

- LiDAR for terminal-phase 3-D lock and obstacle awareness.

- Acoustic + RF AoA as low-cost cueing channels for low-altitude urban missions.

Why this mix works

Each sensor closes a specific gap: EO for appearance, LWIR for low light, event for latency and blur, mmWave for depth and closing speed, LiDAR for terminal geometry, acoustic/RF for cueing. In combination, they deliver stable LOS angles + range/Doppler at rates and latencies compatible with an EKF/UKF + PN guidance loop—what an interceptor actually needs to hold a tiny, maneuvering target.

A-Bots.com typically integrates RGB-T + mmWave on Jetson/Qualcomm-class edge compute, adds events/LiDAR when mission rules justify SWaP, and ships a ROS 2 graph with time-synchronized topics for detector → tracker → fusion → predictor → guidance.

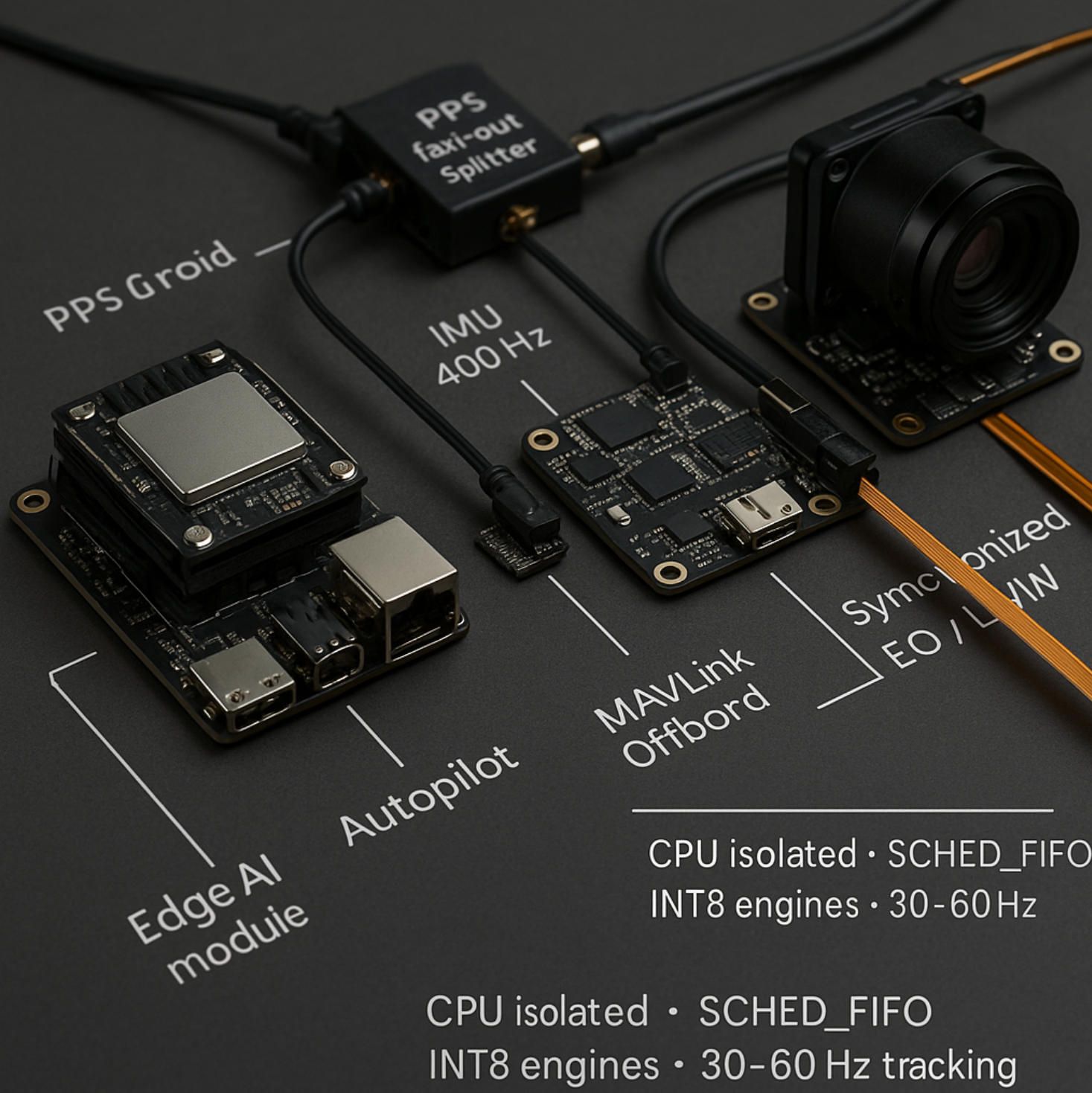

3.On-Board Compute & Avionics That Can Carry It

A chaser drone’s perception loop is ruthless about latency and determinism: multi-sensor ingest → detection → tracking → fusion → short-horizon prediction must close in tens of milliseconds while the airframe is vibrating, throttling, and EMI-noisy. Two proven embedded paths can carry this: NVIDIA Jetson Orin NX/Nano and Qualcomm QRB5165 (Flight RB5)—both fieldable on Group-1/2 UAVs, both with mature vision I/O and toolchains.

3.1 Compute platforms that actually sustain multi-camera perception

Jetson Orin NX/Nano (JetPack 6.2/6.2.1). With JetPack 6.2 “Super Mode,” Orin NX/Nano unlock higher sustained AI throughput (Jetson Linux 36.4.x), yielding up to ~70% TOPS uplift and higher memory bandwidth—headroom that matters when you run detector + tracker + VIO concurrently. Power is software-configurable; Orin NX 16 GB supports 10/15/25 W operation (and vendor boards expose 40 W “MAXN_Super” envelopes for short bursts during thermal validation). CUDA/TensorRT/cuDNN and VPI/OFA cover the acceleration stack.

Qualcomm QRB5165 / Flight RB5. A heterogeneous SoC (CPU/GPU/DSP/NPU) with 15 TOPS AI engine and support for up to 7 cameras on the flight reference design. The RB5 ecosystem ships Linux, ROS 2, and Qualcomm’s Neural Processing SDK, making it a practical low-power alternative where sustained heat flux is the limiter. Qualcomm, linuxgizmos.com, thundercomm.com

Why these two? Each handles multiple MIPI CSI cameras at 30–60 Hz, supports INT8 engines, and has well-trod carrier boards. If your mission is night-heavy and you need RGB-T + mmWave fusion at 40–60 Hz, pick Orin NX; if your envelope is power-starved or you want dense camera fan-out with excellent radios (5G/Wi-Fi 6) on a reference stack, RB5 is compelling. Connect Tech Inc., files.seeedstudio.com, ModalAI, Inc.

3.2 Sensor I/O, time sync, and clocks (what makes fusion trustworthy)

Camera buses. Prefer MIPI CSI over USB for deterministic latency and lower jitter; plan for 6–8 lanes aggregate on the companion (e.g., Orin NX carriers expose 8 lanes, often “up to 4 cameras / 8 via virtual channels”). Thermal cores typically provide parallel/video or CSI bridges; mmWave radar arrives over SPI/UART/CAN-FD.

Time synchronization. Sub-millisecond alignment across EO, LWIR, IMU, and radar is the difference between a stable IMM and a diverging predictor. Use hardware triggers and PPS where possible; on Ethernet sensors, use IEEE-1588 PTP (software PTP on Jetson is supported; some variants lack NIC hardware timestamping). Target < 500 µs skew camera↔IMU; validate with frame-to-IMU residuals and checkerboard flashes. RS Online, NVIDIA Developer Forums, Things Embedded, Teledyne Vision Solutions

ROS 2 and QoS. Keep DDS QoS explicit (reliable vs best-effort, history depth) and profile executor jitter with PREEMPT_RT. Academic and industrial evaluations show ROS 2 latency and jitter depend heavily on QoS, message sizes, and CPU isolation—measure end-to-end, don’t guess. SpringerOpen, docs.ros.org

3.3 Real-time budgeting (numbers you can live by)

A pragmatic closed-loop budget for air-to-air tracking on embedded:

- Ingest & preproc (demosaic, undistort, de-rotate via VIO): 4–8 ms with VPI/ISP;

- Detector (tiny-object tuned, INT8 @ 960–1280 px side): 8–16 ms;

- Tracker (SOT or Td association): 3–8 ms;

- Fusion (EKF/UKF + IMM) with mmWave range/Doppler when available: 1–3 ms;

- Publish PN-ready state/LOS-rate to guidance: < 1 ms.

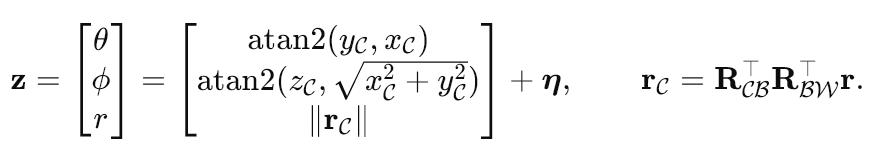

Aim ≤ 35–50 ms glass-to-state at 30–40 Hz; if you push 60 Hz tracking, drop the detector cadence and run tracker every frame with detector every N frames. Use HW blocks (NVIDIA VPI/OFA) for optical-flow-assisted stabilization where available. NVIDIA Docs

3.4 Avionics integration: flight stack, links, and control authority

Companion ↔ Autopilot. The canonical pattern is Pixhawk-class autopilot (PX4/ArduPilot) + companion computer over MAVLink (serial/Ethernet). PX4’s Offboard mode or ArduPilot’s guided interfaces let the companion command position/velocity/attitude setpoints at 20–100 Hz using the fused track state; the autopilot keeps the inner-loop stabilization, your code supplies guidance. docs.px4.io, ArduPilot.org

Routing and logging. Autopilots can route MAVLink and stream dataflash logs to the companion; use this to co-log estimator and control telemetry with perception outputs for flight test analysis. ArduPilot.org

micro-ROS/uXRCE-DDS. If you want ROS 2 topics on the flight controller side, PX4 speaks micro-ROS/uXRCE-DDS; keep an eye on scheduling and memory ceilings. docs.px4.io

3.5 Thermal, power, and EMI (the unglamorous issues that kill demos)

Thermals. Orin NX/Nano carriers document MAXN / MAXN_Super envelopes and case-temperature limits; treat vendor power tables as optimistic and leave 15–20% thermal margin for worst-case sun/wind. Heatsink-plus-ducted-air over the module and an isolated radar mount prevent recirculation and coupling. Validate with an external thermocouple during sustained chase-profiles. NVIDIA Developer

Power. Budget brownout events during high-G and radio TX bursts. Orin NX nominal modes are 10/15/25 W (8 GB variant 10/15/20 W); RB5 modules run materially lower for the same computer-vision load if you offload to the NPU/Hexagon DSP. Use separate rails (and LC filters) for cameras and radios to avoid image snow and DDS bursts under TX.

EMI. mmWave (60–64 GHz) sensors and high-rate CSI pairs don’t like unplanned grounds; maintain short returns, star ground to the carrier, and isolate radar antennas from high-speed digital traces. (TI’s IWR6843/6843AOP datasheets list CAN-FD/SPI/UART interfaces and chirp engines—plan your wiring and shielding accordingly.) Texas Instruments

3.6 Software topology: keeping the loop deterministic

- Two-tier scheduling. Run detector/tracker/fusion in a SCHED_FIFO process set with CPU isolation; push logging/telemetry/compression to best-effort threads. Avoid dynamic allocations in the hot path; ROSCon guidance is still right—pre-allocate, avoid blocking primitives and I/O on the RT path. roscon.ros.org

- QoS discipline. Best-effort, keep-last(1) for high-rate images; reliable, depth≥5 for state topics to guidance; bounded message sizes. Map these choices to executor and RMW that you’ve profiled. SpringerOpen

- Optical-flow assists. Use VPI/OFA where available to de-rotate frames and shrink search windows (saving tracker compute and improving lock during high-yaw turns).

3.7 Build & field ops: what makes it maintainable

-

Over-the-air (OTA) with rollback and per-node feature flags (detector cadence, fusion gains) so you can tune at the range without SSH gymnastics.

-

Golden flight scenarios (back-lighting, gusts, GPS-degraded) scripted as Offboard missions; log glass-to-state latency, time-to-lock, time-to-reacquire. docs.px4.io

-

Dataset taps. Record cropped ROIs and fused states for post-hoc hard-negative mining. Keep an eye on ROS 2 serialization overhead at high frame rates (it’s measurable); profile and prune. ResearchGate

What we typically ship (quietly A-Bots.com)

A-Bots.com delivers a ROS 2 graph pinned to your airframe: RGB-T ingest (CSI) with tight timestamps, mmWave radar over SPI/UART, a quantized detector (INT8 TensorRT on Orin or NPU on RB5), OSTrack/ByteTrack lock-on, EKF/IMM fusion (bearing + optional range/Doppler), and a guidance bridge that publishes PN-ready state to PX4/ArduPilot Offboard at 50–100 Hz. We hard-cap glass-to-state, validate PTP or PPS timing, and hand over flight-test scripts plus co-logging to make regression clear and boring.

![]()

4.Datasets & Benchmarks Specific to Tiny-UAV Tracking

Tiny-UAV tracking is not a generic “object tracking” problem: the target is small, fast, and often low-contrast, while the camera itself is moving. You need datasets that reflect exactly this regime (RGB/TIR, long standoffs, clutter, occlusions) plus sound evaluation protocols. Here is a practical map of what to train on, how to evaluate, and where the remaining gaps are.

4.1 Anti-UAV (RGB/TIR, multi-task benchmark)

What it is. Anti-UAV is the de-facto community benchmark for discovering, detecting, and tracking UAVs “in the wild” across RGB and thermal IR video, with explicit handling of disappearances/occlusions (invisibility flags). The official repo documents the multi-task setup and modalities; the core paper reports 300+ paired videos and >580k boxes (often cited as Anti-UAV410 in follow-ups). This is the most relevant general anti-UAV set you can start from. GitHub, ResearchGate

Why it matters. It is the only widely used benchmark that makes RGB↔TIR a first-class citizen for anti-drone perception, so it’s ideal for RGB-T fusion pipelines and for stress-testing re-acquisition after the target disappears behind clutter.

4.2 CST Anti-UAV (2025): Thermal SOT for tiny drones in complex scenes

What it is. A new thermal-infrared SOT dataset targeted squarely at our use case: tiny UAVs in complex scenes. It contains 220 sequences and >240k high-quality annotations with comprehensive frame-level attributes (occlusion types, background clutter, etc.). Critically, authors show that state-of-the-art trackers hit only ~35.92% state accuracy here, far below results on earlier Anti-UAV subsets (e.g., ~67.69% on Anti-UAV410), underlining how hard real tiny-target thermal tracking is. Use it to benchmark night/dusk scenarios and to justify RGB-T fusion in production.

4.3 Drone-vs-Bird detection challenge (WOSDETC/AVSS)

What it is. A long-running challenge/dataset focused on discriminating drones from birds at range—i.e., the most common false-positive in air-to-air surveillance. The series started in 2017 and has been updated across editions; the 2021 installment offered 77 training sequences and tougher test sets, with both static and moving cameras. Use it for hard-negative mining and classifier heads that filter look-alikes.

Why it matters. Even a perfect tracker is useless if your detector keeps confusing gulls with quadcopters. This dataset improves the “is it a drone?” judgment that gates your tracking loop.

4.4 General aerial benchmarks for pretraining and small-object robustness

- VisDrone. Large-scale drone imagery for detection/tracking with >2.6M annotations, 288 videos and 10k+ images—great for teaching detectors and trackers to live with tiny objects, clutter, and ego-motion before you fine-tune on anti-UAV specifics.

- UAVDT (ECCV’18). ~80k frames with annotations for DET/SOT/MOT, curated from ~10 hours of aerial video; still valuable for motion, scale change, and urban clutter pretraining.

- DOTA / ODAI. Not drone-centric, but the oriented box labeling on massive aerial imagery helps detectors cope with arbitrary orientations and tiny targets. Useful as a supplementary pretrain.

4.5 Event-vision: EV-Flying (2025)

If you plan to add a DVS/event sensor, EV-Flying contributes an event-based dataset with annotated birds, insects, and drones (boxes + identities). It’s tailor-made to study microsecond-latency tracking under high-speed motion and extreme HDR—exactly where events shine.

4.6 Evaluation protocols that actually tell you something

Single-Object Tracking (SOT).

Use OTB/LaSOT-style precision & success (center-error, IoU AUC) to remain comparable with mainstream trackers. For short-term tracking with built-in resets, include VOT metrics, especially EAO (Expected Average Overlap) as the primary score. These are standard and well-explained in LaSOT and VOT papers. CVF Open Access

Tracking-by-Detection (MOT).

Report MOTA, IDF1 (identity consistency), and HOTA (balanced detection/association/localization). HOTA is the current best single number to compare trackers; use the TrackEval reference implementation. For tiny-UAVs, IDF1 often reveals identity fragility during long-range re-acquires.

Engagement-level KPIs.

Augment the above with time-to-first-lock, mean lock duration, time-to-reacquire, and glass-to-state latency—these reflect what guidance actually “feels.” (Benchmarks won’t give you these out-of-the-box; log them in flight tests.)

4.7 A training recipe that works in practice

(1) Pretrain for small objects, then specialize.

Warm up detectors/trackers on VisDrone and UAVDT (mosaic/tiling, small-object anchors), then fine-tune on Anti-UAV and CST Anti-UAV (RGB-T/TIR). This two-stage approach consistently improves tiny-target recall before the difficult thermal fine-tune.

(2) Mine hard negatives.

Fold Drone-vs-Bird clips into training/validation as a separate “non-drone” class or via focal/contrastive losses to reduce avian false alarms without killing recall.

(3) Generate the examples you’ll never catch on camera.

Use Microsoft AirSim (Unreal-based) to synthesize long-range air-to-air passes, back-lighting, haze, and high-yaw maneuvers; render both RGB and pseudo-TIR domains for curriculum learning. AirSim is widely used to mass-generate drone perception data and integrates well with PX4/ROS.

(4) Augment for the real failure modes.

Apply tiny-object crops/oversampling, motion-blur synthesis, glare/haze, TIR noise patterns, and tiling at high resolutions so long-focal-length shots don’t collapse to single-digit pixels.

(5) Evaluate like you’ll fly.

Besides SOT/MOT metrics, run night/dusk subsets from CST Anti-UAV, mixed-lighting sequences from Anti-UAV, and a bird-rich validation from Drone-vs-Bird. Track re-acquire statistics after forced dropouts; that number predicts field performance better than a few points of AUC.

4.8 Baselines & references you can trust

-

Anti-UAV official (tasks, modalities, occlusion policy).

-

CST Anti-UAV (2025) (tiny-UAV thermal SOT; difficulty numbers).

-

VisDrone / UAVDT (scale, tasks, ego-motion). docs.ultralytics.com

-

Drone-vs-Bird (hard negatives, editions, sequence counts).

-

HOTA / TrackEval for MOT evaluation; LaSOT / VOT for SOT metrics.

-

AirSim for synthetic RGB/TIR generation. GitHub

How A-Bots.com uses this in real projects

We typically pretrain on VisDrone/UAVDT, fine-tune on Anti-UAV + CST Anti-UAV for RGB-T/TIR, inject Drone-vs-Bird for negative pressure, and fill gaps with AirSim. Evaluation blends OTB/LaSOT/VOT tracker scores with HOTA/IDF1 and flight-grade KPIs (time-to-lock, reacquire). This mix produces perception stacks that stay honest under tiny-target reality and transfer cleanly to Jetson Orin NX or QRB5165 payloads you can field.

5.What Works Today (Algorithms That Survive on the Edge)

The winning recipe on a chaser UAV is a detector-plus-tracker loop stabilized by VIO + filtering, with RGB↔Thermal fusion (and mmWave range/Doppler when SWaP allows). Below is the playbook we deploy in the field—and why each block earns its watts.

5.1 Detection: seed the lock, re-seed after loss

Small-object-aware one-stage detectors are the most practical seeds today:

- YOLOv10 (NMS-free training via dual assignments + efficiency-driven design) gives strong accuracy/latency on edge hardware; use S/M variants for 20–40 Hz at 960–1280 px with INT8.

- RT-DETR / RT-DETRv3 removes NMS at inference with a real-time transformer; supports speed/accuracy tuning by changing decoder layers, which is ideal for power-aware flight modes. GitHub

- YOLOv9 (GELAN + PGI) remains a solid alternative if your stack already standardizes on the YOLO family. e cva.net

Make them see tiny drones. Train with tiling/mosaic and SR-assisted heads; recent surveys and studies show super-resolution and multi-scale features materially lift small-object recall without blowing up latency when embedded smartly.

RGB-T readiness. For dusk/night, plan a dual-branch detector (shared neck, modality-specific stems) so thermal can carry acquisitions when RGB collapses; CST Anti-UAV numbers justify building for thermal from day one.

5.2 Tracking: hold the lock under ego-motion, clutter and scale jumps

Two families work well; we often ship both and arbitrage them by confidence.

(A) Template-based SOT (transformer trackers).

OSTrack, STARK, MixFormer/MixFormerV2 are the current “stickiest” SOT options thanks to global attention and lean heads. They run fast after INT8 and let you keep per-target templates for instant re-centering after scale change. Use them when the detector is sparse or when the target is isolated.

(B) Tracking-by-Detection (Td).

ByteTrack style association (keep almost every detection, even low-score ones) is robust to tiny targets and momentary detector weakness; it consistently lifts IDF1/HOTA on long, messy sequences. Anti-UAV workshop papers also report “strong detector + simple tracker” baselines doing surprisingly well—exactly what Td embodies.

Long-term behavior. Add a global re-detector (periodic full-frame scan or when confidence dips) and an explicit disappearance flag; Anti-UAV-style tracks appear/disappear often, and long-term methods with re-detection blocks outperform plain short-term SOT in these regimes.

Thermal tiny-target reality check. On the 2025 CST Anti-UAV thermal SOT benchmark, 20 SOT methods hover near mid-30% on the main score—so architect for re-acquire rather than wish for drift-free tracking at night.

5.3 Event-assisted tracking for high-G and glare

Event sensors give microsecond latency and >120 dB HDR; recent datasets like EV-Flying bring birds/insects/drones with identities to train on. We fuse events as a motion prior (event flow → ROI) to stabilize SOT/Td during high-yaw turns or back-lighting; vendors document <100 µs latency on HD sensors, which is exactly what guidance wants. PROPHESEE, AMD

5.4 State estimation: from pixels to a 3-D track the controller trusts

Filter core. Use an EKF/UKF with IMM over CV/CTRV/CTRA; IMM remains the standard for maneuvering targets and is easy to tune for drones (turn-rate/accel bounds). Bearings come from EO/TIR; mmWave/LiDAR, when present, supplies range and radial velocity to collapse depth uncertainty. jhuapl.edu

Bearings-only observability. If you fly vision-only, plan small observer maneuvers (weave/loiter) to make range observable; the classical TMA literature and recent UAV-vision analyses are blunt about this constraint.

Radar–vision fusion. For night/rain or low-texture sky, fuse mmWave with EO/TIR at fuse-before-track and in the tracker; surveys and recent EKF pipelines confirm large boosts in adverse conditions.

5.5 Short-horizon trajectory prediction (0.5–2.0 s)

Keep it physics-first and bounded:

- IMM-CTRV/CTRA gives a strong baseline with quantified uncertainty for LOS-rate and closing speed.

- A tiny GRU/Transformer motion head can refine turn-rate or accel, but must be constrained by the filter state to avoid hallucinations in sparse-range regimes.

- Interface to guidance: Proportional Navigation (PN) and its 3-D variants (TPN/MTPN/Biased PN) still dominate for uncooperative intercepts; all they need is a clean LOS rate λ˙ and closing velocity VcVc. AIP PublishingOnline Library, arc.aiaa.org

5.6 Make it run on the edge (and stay stable)

- Quantize & distill. INT8 TensorRT / NPU compilation plus tracker compression (e.g., recent transformer-tracker compression frameworks) retain accuracy while slashing memory/bandwidth—the main thermal enemy.

- Lower-res tricks. Dedicated work shows OSTrack-derived trackers remain competitive at lower input sizes with careful training; combine with gimbal-steered ROI to keep pixels on target.

- Cadence splitting. Run tracker every frame, detector every N frames (or on confidence dips). This preserves 40–60 Hz control without paying detector cost each frame—crucial on Orin/QRB-class boards.

- RGB-T scheduling. Half-res thermal + shared neck gives big wins in watts/°C without losing reacquire capability at night (CST evidence says you’ll need it).

5.7 A-Bots.com reference loop (field-tested)

- RGB-T detector (YOLOv10 / RT-DETR-S) with tiling + tiny-object curriculum.

- Dual tracker path: OSTrack/MixFormerV2 for sticky lock, ByteTrack for rapid re-acquire and distractor-heavy scenes. papers.neurips.cc

- Fusion: IMM-UKF on LOS angles + optional mmWave range/Doppler; bearings-only fallback uses observer maneuvers to restore observability.

- Prediction: short-horizon IMM state → PN/GPN bridge for PX4/ArduPilot Offboard.

- Recovery: global re-detector + disappearance flags; Anti-UAV-style “pop-in/pop-out” is normal, not an exception. CVF Open Access

Why this stack “survives”

- It treats acquisition, lock, and re-acquisition as first-class, not afterthoughts.

- It admits tiny-target reality (thermal SOT is still hard) and leans on fusion rather than wishing away physics.

- It maps cleanly to edge constraints with quantization, cadence splitting, and ROI steering, keeping glass-to-state well under control.

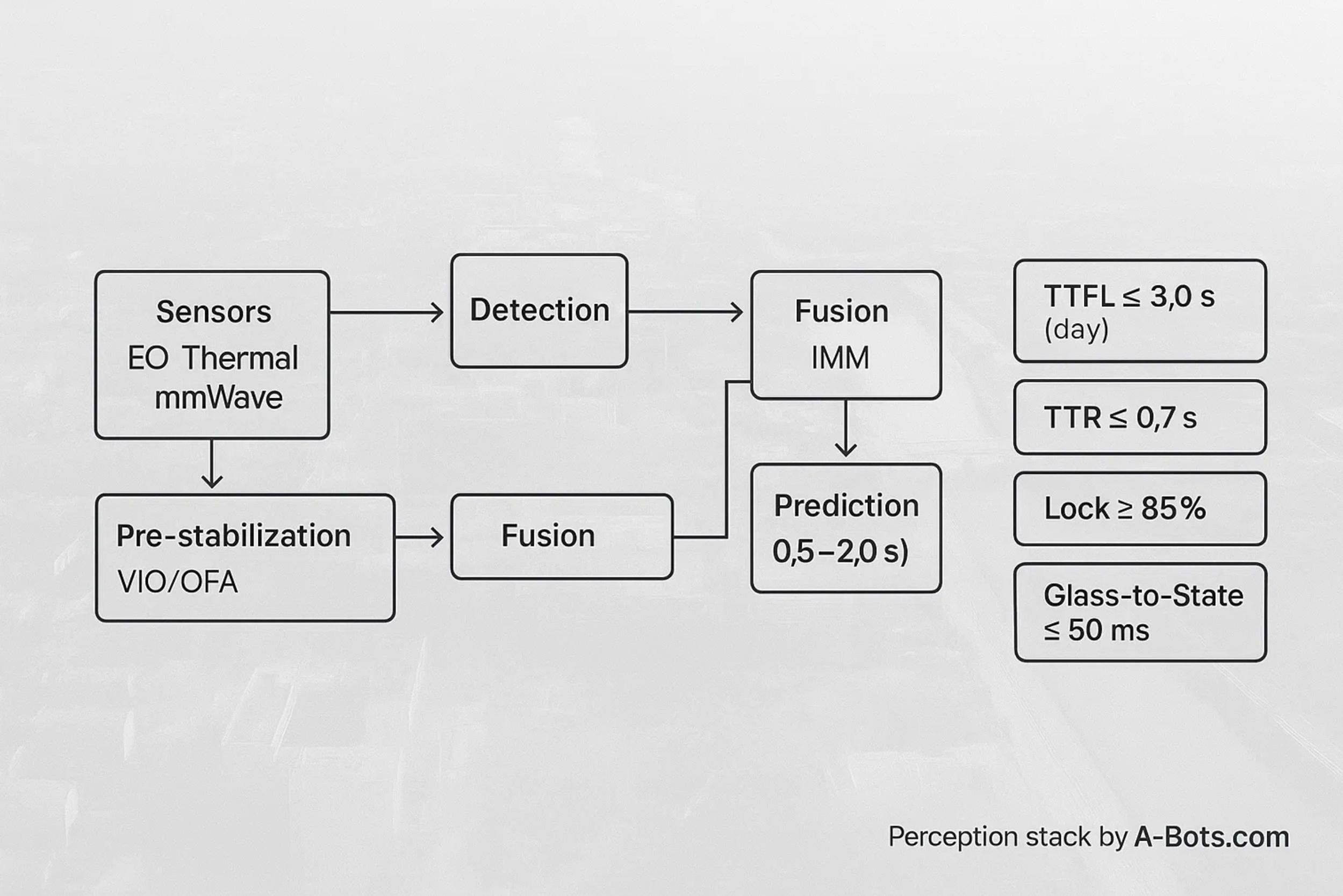

6.A Production-Grade Perception Stack (A-Bots.com Blueprint)

This is the reference stack we ship on Group-1/2 interceptor UAVs: sensor set, compute, ROS 2 graph, fusion, prediction, guidance interface, and the operational glue (timing, health, OTA). It is designed to keep glass-to-state ≤ 50 ms at 30–40 Hz in day/night conditions and to fail gracefully when any single modality degrades.

6.1 Mission goals & SLAs the stack is built to meet

- Time-to-First-Lock (TTFL): ≤ 3.0 s from first visual contact at ≥ 300 m slant range (EO daylight), ≤ 6.0 s at dusk (RGB-T).

- Mean Lock Duration: ≥ 85% of a 60-s chase with ego-maneuvers (±25°/s yaw, ±3 g bursts) in mixed backgrounds.

- Time-to-Reacquire (TTR): ≤ 0.7 s after a 1-s occlusion at 200–350 m.

- Glass-to-State Latency: ≤ 50 ms (nominal), ≤ 70 ms (worst-case thermal night).

- Prediction Horizon: stable, uncertainty-bounded 0.5–2.0 s state for guidance.

These KPIs drive every design choice below.

6.2 Sensors & mounting (baseline + options)

Baseline payload (vision-first, night-ready):

- EO RGB global-shutter camera on 2-axis gimbal; lens providing 25–75 mm eq. zoom. Hardware trigger available.

- LWIR thermal core 640×512 @ 60 Hz, coaxial or tightly boresighted with EO; calibrated for RGB-T fusion.

- IMU with sync line (PPS/trigger), temperature-compensated.

- Optional mmWave (60–64 GHz) FMCW module for range + radial velocity (Doppler) at 10–20 Hz.

Upgrades (theater-dependent):

- Event camera (DVS) coaxial with EO to survive high-G turns and glare.

- Solid-state LiDAR for terminal-phase geometry and obstacle awareness.

- Acoustic array (beamformed) and SDR AoA as cueing channels in low-altitude urban ops.

Mount EO/LWIR as close to the gimbal’s rotation center as possible; thermally isolate radar/LiDAR electronics; define and document lever arms for each sensor vs. IMU.

6.3 Compute, OS, and timing

- Edge compute: Jetson Orin NX (16 GB) or Qualcomm QRB5165. Both sustain multi-camera ingest and INT8 inference.

- OS: Ubuntu LTS with PREEMPT_RT where possible; CPU isolation for hot path; fixed governor (“performance”).

- Clocks & sync: PPS from GNSS (or autopilot) fan-out to EO trigger, IMU, and companion; PTP for Ethernet devices. Target < 500 µs skew camera↔IMU; verify by strobing a sync LED and cross-correlating timestamps.

6.4 ROS 2 graph (topics, QoS, cadences)

A minimal, fieldable topology:

# Perception graph (ROS 2 / CycloneDDS)

sensors:

/eo/image_raw # best_effort, keep_last(1), 30–60 Hz

/tir/image_raw # best_effort, keep_last(1), 30–60 Hz

/imu/data_raw # reliable, keep_last(50), 200–400 Hz

/radar/range_doppler # reliable, keep_last(10), 10–20 Hz (if present)

preprocess:

/eo/stabilized # de-rotated via VIO/OFA, rectified

/tir/stabilized

detection:

/det/roi # reliable, keep_last(5), 10–20 Hz (full-frame or tiled)

/det/full # low-rate global scan for re-detect

tracking:

/track/sot # best_effort, keep_last(1), 40–60 Hz

/track/td # reliable, keep_last(5), 20–30 Hz (detections + association)

/track/fused_state # reliable, keep_last(10), 40–60 Hz (EKF/UKF + IMM)

prediction:

/track/predicted_state # reliable, keep_last(10), 40–60 Hz (0.5–2.0 s horizon + covariance)

guidance_bridge:

/guidance/los_rate # reliable, keep_last(5), 50–100 Hz (for PN/GPN)

/guidance/health # reliable, keep_last(10), 5 Hz (confidence, temperature, watchdogs)

QoS discipline: images best-effort; states reliable with small bounded history. Hot path nodes are pinned to isolated CPUs under SCHED_FIFO.

6.5 Perception pipeline (detector → tracker → fusion → predictor)

(1) Stabilization & registration.

VIO (e.g., ORB-class, tightly fused with IMU) produces ego-pose; frames are de-rotated to stabilize ROIs. If available, use hardware optical-flow engines to pre-warp EO frames and shrink the template search window.

(2) Detection (seed + re-seed).

A small-object-tuned one-stage detector (e.g., YOLOv10-S / RT-DETR-S INT8) runs at 10–20 Hz on tiled crops at 960–1280 px. Dual stems allow RGB-T operation; thermal can take over at dusk. A full-frame global re-detector runs every N frames or when tracker confidence dips.

(3) Tracking (hold lock).

Two concurrent paths:

-

SOT (transformer-tracker) for “sticky” lock with template refresh on scale change.

-

Tracking-by-Detection (ByteTrack-style) for distractor-heavy or low-contrast scenes; we keep low-score detections to avoid losing tiny targets.

The arbiter publishes the best hypothesis based on confidence, temporal consistency, and motion gating.

(4) Fusion (EKF/UKF + IMM).

State x=[r,v,ψ˙] in the interceptor body or world frame; measurements are LOS angles (EO/LWIR/DVS) and optional range & radial velocity (mmWave or LiDAR). IMM switches CV/CTRV/CTRA; NIS/NEES tests gate outliers. Bearings-only mode commands gentle observer maneuvers (weave/loiter) to recover range observability.

(5) Prediction (0.5–2.0 s).

Baseline: IMM-CTRV with covariance-aware extrapolation; optional tiny GRU/Transformer motion head refines turn-rate but is clipped by physics bounds. Outputs feed the guidance bridge as LOS rate λ˙, closing speed Vc, and predicted intercept geometry.

6.6 Scheduling & cadence tricks that keep you under 50 ms

- Every-frame tracker, N-frame detector: run tracker at camera rate (40–60 Hz), detector at 10–20 Hz or on confidence dips.

- Gimbal-steered ROI: move the camera to keep the target near the principal point; reduce template search and detector tiling cost.

- Asymmetric RGB-T: thermal at half-resolution + shared neck; keep the thermal branch alive at night with minimal watts.

- INT8 everywhere: quantize detector & SOT; pin memory pools; avoid dynamic allocations in hot loops.

6.7 Health, confidence, and fail-safes

- Confidence bus: each node publishes a scalar confidence and contributing factors (texture, SNR, thermal clutter). The arbiter down-weights dubious measurements in the filter and can force re-detect.

- Watchdogs: per-node heartbeats; if a sensor stalls (e.g., thermal drops frames), the fusion node reconfigures to a degraded mode and raises an alert to guidance (e.g., restrict maximum chase angle).

- Thermals & power: companion monitors module temperature and current draw; switches to a “night-economy” profile (lower detector cadence, narrower ROI) when nearing thermal throttle.

- Disappearance protocol: explicit “target-invisible” flags propagate through the stack; the tracker maintains a motion-only hypothesis with inflating covariance until re-acquisition or timeout.

6.8 Guidance bridge (how perception talks to control)

- Interfaces: PX4 Offboard / ArduPilot Guided via MAVLink. The bridge publishes LOS rate, closing velocity, and predicted intercept point at 50–100 Hz, plus a track-quality bitfield (e.g., bearings-only / fused range / degraded).

- PN/GPN compatibility: we expose λ˙ and Vc directly, with covariance to gate commanded lateral acceleration when uncertainty spikes.

- Safety hooks: if covariance exceeds a mission-defined bound or ID switches exceed a threshold, the bridge requests a hold or reposition from the autopilot rather than driving into a bad estimate.

6.9 Training & modelOps (how we make it robust)

- Curriculum: pretrain on large aerial sets for small objects; fine-tune on anti-UAV RGB-T and thermal; inject bird/balloon negatives.

- Synthesis: generate long-range passes, back-lighting, haze, and high-yaw moves in a simulator (RGB + pseudo-TIR) to cover rare edge cases.

- Hard-negative mining: the logger crops ROIs and near-misses during flight; these feed an automated retraining loop.

- Compression: structured pruning + knowledge distillation before INT8 export (TensorRT / NPU backends). We keep a float32 “teacher” for continuous training and an INT8 “student” for flight.

6.10 DevOps in the field (so iteration is boring, not scary)

- A/B OTA with rollback: containers per node; feature flags for detector cadence, fusion gains, and re-detector interval.

- WORM audit logs: append-only event trail for detections, associations, filter residuals, and guidance outputs (privacy-scrubbed).

- Golden scenarios: scripted Offboard missions (back-lighting, gusts, GPS-degraded) run before each release; regressions are caught on time-to-lock, reacquire, and glass-to-state.

- Ground/mobile app: low-latency video with overlaid track box and state vectors; toggles for night profile, ROI gating, and debug overlays. (Yes, A-Bots.com also builds the operator app when you need it.)

6.11 What ships from A-Bots.com

- ROS 2 packages (detector, tracker, fusion, predictor, guidance bridge) with launch files for Jetson/QRB carriers.

- Quantized engines (INT8) for all models + calibration sets and export scripts.

- Calibration & timing kit: checkerboards, boresight procedure, PPS fan-out harness, and a timing validation tool.

- Test-range scripts & dashboards: record/replay, KPI dashboards (TTFL, TTR, lock %, latency), and dataset taps for retraining.

- Documentation: integration guide for PX4/ArduPilot, wiring and lever-arm diagrams, and the operational playbook (profiles, failure modes, quick-look charts).

Why this blueprint works

It meets the physics where they are: EO for appearance, LWIR for night, optional mmWave to crush range ambiguity; a detector-tracker pair that prioritizes re-acquire; fusion that admits bearings-only reality but exploits range/Doppler when SWaP permits; and a guidance bridge that provides exactly what PN/GPN needs, with uncertainty attached. Most importantly, it is operationalized: time-sync, health, OTA, and metrics are first-class citizens, so the system improves with each flight.

![]()

7.Known Pitfalls & How We Mitigate Them

C-UAV perception fails for boring reasons (timing, thermals, calibration) and for wicked ones (tiny targets, night clutter, evasive maneuvers). The cure is not a single model but a systemic playbook that keeps acquisition, lock, and re-acquisition stable under stress. Here are the main traps and the countermeasures we ship.

7.1 Tiny targets, blur, and optics reality

Pitfall. At long standoff, a drone is a few dozen pixels; any blur or rolling-shutter skew destroys features. Autofocus “hunts” during zoom, thermal boresight drifts with temperature, and narrow FOVs amplify gimbal vibration.

Mitigations.

- Global shutter + fast exposure with ISO discipline; lock focus at hyperfocal or run focus-from-contrast confined to the ROI (no full-frame hunts).

- Gimbal-steered ROI to keep the target near the principal point; smaller search windows → higher SNR tracking.

- Event camera assist for high-G turns and glare; microsecond latency prevents streaking.

- Thermal–RGB co-boresight & temperature compensation; quick pre-flight boresight pattern and a per-hour micro-adjust.

- Optics hygiene: anti-fog heater, hydrophobic coating, thin polarizer when glare dominates (trade carefully with light).

7.2 False positives: birds, balloons, and look-alikes

Pitfall. Detectors flag birds or airborne clutter, then trackers dutifully “lock” the wrong thing.

Mitigations.

- Kinematic filters: wingbeat micro-oscillations (2–15 Hz) and non-ballistic drift betray birds/balloons; reject with short-window spectral tests.

- Classifier head trained on “non-drone” hard negatives (birds, kites, debris) and thermal shape priors at night.

- Multi-hypothesis tracking (tracklet bank) with ID switches penalized; wrong hypotheses die when kinematics disagree.

- RF/acoustic cues as weak validators when available (bearing-only hints to down-weight false tracks).

7.3 Occlusions, pop-in/pop-out, and re-acquisition

Pitfall. Targets vanish behind clutter, sun glare, or attitude flips; pure SOT drifts, pure MOT loses identity.

Mitigations.

- Detector every N frames (or on confidence dips), tracker every frame; when confidence < τ, trigger global re-detector.

- Explicit disappearance state in the track (covariance inflation instead of hallucinated boxes).

- RGB-T arbitration: if EO confidence collapses, thermal branch seeds the re-acquire.

- Time-to-reacquire KPIs in the flight loop; alarms if TTR > budget.

7.4 Bearings-only depth blow-up

Pitfall. Without range, the EKF/UKF inflates along the LOS; prediction becomes useless at 1–2 s horizons.

Mitigations.

- Add a light ranging channel (mmWave / short-range LiDAR) whenever SWaP allows; even 10–20 Hz range/Doppler collapses depth.

- Observer maneuvers (gentle weave/loiter) to restore observability in vision-only mode; plan profiles in the guidance layer.

- Uncertainty-aware guidance: PN/IBVS commanded acceleration is gated by covariance; no aggressive chase on bad depth.

7.5 Time sync, clocks, and timestamp lies

Pitfall. Camera/IMU/radar skew >1 ms corrupts fusion; packet reordering introduces negative latency; drift accumulates over long sorties.

Mitigations.

- Hardware sync: PPS fan-out to camera trigger + IMU; PTP on Ethernet sensors.

- Jitter budget with drop-late policy: frames older than Δt never enter the filter.

- Sync validation: strobe a sync LED; log frame vs IMU residuals; alarm on drift >500 μs.

7.6 Calibration creep (intrinsics/extrinsics)

Pitfall. Hot/cold cycles shift intrinsics; gimbal flex shifts extrinsics; RGB↔TIR mis-registration breaks fusion.

Mitigations.

- Scheduled re-cal (intrinsics monthly, extrinsics weekly or after hard landings).

- Online micro-calibration: small, bounded extrinsic updates using background parallax; clamp to avoid runaway.

- Mechanical discipline: fixed lever-arm documentation, torque specs, thread-locker; no “mystery” shifts.

7.7 Thermal limits and power brownouts

Pitfall. Companion throttles under sun; battery sag plus radio TX spikes cause frame drops.

Mitigations.

- Economy profiles: halve detector cadence, narrower ROI, thermal half-res at high module temps.

- Power rails isolation (cameras/radios separate with LC filters), brownout logging, and pre-arm thermal soak.

- Heatsink + ducted airflow across the module; avoid recirculation inside the fuselage.

7.8 ROS 2 back-pressure & scheduling

Pitfall. DDS tries to be helpful and becomes a latency machine: queues swell, GC stalls, logging blocks the hot path.

Mitigations.

- QoS discipline: images best-effort keep_last(1); states reliable with tiny depth; bounded message sizes.

- SCHED_FIFO + CPU isolation for detector/tracker/fusion; telemetry/logging on best-effort threads.

- Zero-copy paths and pre-allocated buffers; never allocate in the hot loop.

7.9 Domain shift: lab → range → night

Pitfall. Models trained on daytime datasets fall apart at dusk/night or in haze.

Mitigations.

- RGB-T from day one; do not “add thermal later.”

- Curriculum training: large aerial sets → Anti-UAV/TIR fine-tune → hard-negative mining from flight logs.

- Simulation fills the tails (back-lighting, glare, high-yaw passes); validate with golden scenarios before every release.

7.10 Tracker-guidance mismatches

Pitfall. Great tracks that guidance can’t fly: sudden setpoint jumps, FOV saturation, actuator limits, or PN gains tuned for perfect LOS-rate.

Mitigations.

- FOV-aware PN/IBVS-PN: don’t command what the gimbal can’t keep in view.

- Setpoint smoothing and rate limits on Offboard commands; publish track-quality flags so guidance can down-weight bad states.

- Miss-distance telemetry and replay harness: close the loop on guidance gains with real fused states.

7.11 Weather and aerosols

Pitfall. Rain, fog, dust, and sun glare tank EO; thermal clutter overheats the scene; droplets on optics create false blobs.

Mitigations.

- Thermal dominance at night/fog, EO dominance in clear day; automatic branch weighting.

- Lens heating & hydrophobic coating; quick wipe protocol on landing.

- Temporal filters for thermal noise and dynamic background subtraction; glare masks near the sun vector.

7.12 ID stability and long chases

Pitfall. Over minutes, ID switches creep in; template staleness degrades SOT.

Mitigations.

- Periodic template refresh on SOT with scale/pose heuristics.

- Identity consistency loss in MOT training; appearance memory bank for re-ID after long occlusions.

- Track aging policies that retire stale hypotheses cleanly.

7.13 Safety rails (because bad estimates can be dangerous)

Pitfall. Overconfident filters drive aggressive intercepts into uncertainty.

Mitigations.

- NIS/NEES checks gate updates; covariance inflation on anomalies.

- “Quality-gated guidance”: if covariance or ID-switch rate exceeds thresholds, the bridge commands hold/reposition, not chase.

- Geofence and hard aborts owned by the autopilot, not the companion; companion is advisory when confidence drops.

7.14 Test discipline and observability

Pitfall. Teams “feel” performance but can’t prove regressions.

Mitigations.

- KPIs by design: Time-to-First-Lock, Time-to-Reacquire, Lock %, glass-to-state latency.

- WORM logs of detections, associations, filter residuals, and guidance commands; A/B OTA with rollback.

- Golden flight scripts (back-lighting, gusts, GPS-degraded, bird-rich passes) run before each release; block ship on KPI regressions.

The A-Bots.com angle (quietly)

Our deliverables bake these mitigations in: RGB-T + optional mmWave, global-shutter optics and gimbal ROI, SOT+MOT dual path with explicit disappearance states, IMM fusion with uncertainty-gated guidance, ruthless time-sync, and field ops (OTA, golden scenarios, WORM logs). The result isn’t a “demo that worked once,” but a repeatable perception loop that keeps lock, loses it gracefully, and gets it back fast—day or night.

![]()

8.Evaluation Metrics & Fielding

A perception stack that looks good offline but fails in the air is useless. We therefore evaluate on three concentric rings: offline datasets → closed-loop simulation/HIL → instrumented flight tests, and we gate releases on engagement-level KPIs rather than abstract AP scores. This section defines the metrics, the test protocol, and what “pass” actually means.

8.1 What we measure (and why guidance cares)

Acquisition & persistence

- Time-to-First-Lock (TTFL). Time from first visual evidence of target to a valid fused track (covariance below threshold).

- Lock % / Mean Lock Duration. Fraction of a chase spent with a valid track; mean continuous lock segment length.

- Time-to-Reacquire (TTR). Median time to recover lock after a forced invisibility/occlusion interval Δtocc.

Tracker quality (fit for control)

- LOS-rate error ϵλ˙=λ˙est−λ˙ref (planar or 3-D); guidance lives off λ˙.

- Closing-speed error ϵVc=Vc,est−Vc,ref.

- ID stability. ID-switch rate per minute; long-chase identity survival.

End-to-end dynamics

- Glass-to-State latency tg2s=tpublish−texposure; distribution at p50/p95.

- Setpoint jitter (variance of guidance-bridge outputs at steady target kinematics).

- FOV headroom H=1−max(∣u∣,∣v∣) for normalized image coords; guard against edge-of-frame control.

Safety & robustness

- Graceful-degradation score. Portion of time the system remains in a defined degraded but safe mode after a sensor drop (e.g., thermal loss, radar dropout), without hard faults.

- False-track minutes per hour (bird/balloon).

- Thermal headroom ΔT=Tthrottle−Tmodule and power brownout events (count).

Standard CV metrics for comparability

- SOT: precision @ 20 px, success AUC (OTB/LaSOT style), VOT EAO.

- MOT/Td: HOTA, IDF1, MOTA.

- Detection: AP on small objects (COCO-S), recall under tiling.

8.2 Offline: dataset protocols that predict field behavior

- Pretrain → specialize. Pretrain detectors/trackers on large aerial sets for small objects; fine-tune on anti-UAV RGB/TIR and thermal tiny-UAV subsets.

- Split by scene & time. Ensure day/dusk/night splits; report night separately (thermal is the failure regime).

- Long-term stress. Include disappearances/occlusions and report re-acquire rate (percentage of disappearances followed by a correct reacquire within ≤1.0s).

- Bird/balloon negatives. Maintain a non-drone class; track FPR at fixed TPR for long-range silhouettes.

- Export control-grade signals. Besides boxes/IDs, log λ˙, Vc, and per-frame covariance so you can rehearsal-test PN in replay.

Offline success ≠ ship; but it filters deltas before you burn battery.

8.3 SIL/HIL: close the loop before you fly

Software-in-the-Loop (SIL). Play back real sensor logs and synthetic edge cases; run the exact ROS 2 graph and guidance bridge. Score PN miss-distance in replay:

under measured λ˙, Vc, and actuator limits.

Hardware-in-the-Loop (HIL). Autopilot + companion on bench power, GNSS/IMU stimulators or visual-inertial rigs; verify latency/jitter, time sync, and MAVLink Offboard stability at 50–100 Hz. Fail HIL if tg2s p95 exceeds budget or PPS/PTP skew drifts > 500 µs over 30 min.

8.4 Flight test protocol (how we prove it)

Pre-flight instrumentation

- PPS fan-out to EO trigger and IMU; timestamp strobe visible to EO/LWIR.

- WORM logs for detections, associations, filter residuals, covariance, guidance outputs, temperatures, and current draw.

- Range with controlled target drone and scripted trajectories.

Golden scenarios (repeat every release)

-

Daylight, clear sky long standoff; back-lighting near solar azimuth; dusk/night with mixed background heat.

-

High-yaw chases (±80°/s), occlusions (0.3/0.7/1.5 s), bird-rich passes, GPS-degraded segments, and wind gusts.

-

Sensor dropouts: kill thermal for 10 s; mute radar for 10 s; verify degraded-mode behavior and TTR.

Run design

- ≥ 5 runs per scenario, ≥ 3 locations; randomized order to avoid operator bias.

- Record operator interventions; annotate “no-fly” aborts.

8.5 Acceptance thresholds (ship / no-ship)

Use the SLAs from Section 6 as gates; for clarity we restate the key ones and add statistical guards:

- TTFL: ≤ 3.0 s (day EO), ≤ 6.0 s (dusk RGB-T), both at p90.

- TTR (1.0 s occlusion): ≤ 0.7 s at p90.

- Lock %: ≥ 85% over a scripted 60-s chase (median of runs).

- Glass-to-State: ≤ 50 ms p50, ≤ 70 ms p95.

- LOS-rate error: RMSE(ϵλ˙)≤0.8∘/s day, ≤1.2∘/s night.

- ID switches: ≤ 0.2 / min (median) on long chases.

- False-track minutes: ≤ 1.0 / hour, none persisting > 5 s.

- Thermal headroom: ΔT≥10∘C throughout each run; zero brownouts.

A release fails if any p95 metric exceeds its cap, or if any safety rail (covariance gate, geofence) triggers more than once per run on ≥ 20% of runs.

8.6 Statistical treatment (trust the numbers)

- Distribution-aware reporting. Always publish p50/p90/p95, not just means.

- Bootstrap CIs. 10k resamples on per-run metrics; report 95% CI for TTFL, TTR, Lock %.

- Change detection. Pre- vs post-release deltas with paired tests (or overlapping CIs) on the same golden scenarios.

- Outliers. Keep them; annotate cause. Only exclude when instrumentation fault is proven (e.g., broken timestamp wire).

8.7 Sync, calibration, and health gates (prevent self-inflicted wounds)

- Sync gate: refuse arming if PPS/PTP skew > 500 µs or if any camera lacks a valid trigger in last 5 s.

- Calibration gate: boresight delta > 0.15° or extrinsic drift beyond clamp → re-cal prompt; block night missions if RGB↔TIR mis-registration exceeds ROI budget.

- Health gate: module temp within ΔT margin; detector cadence auto-drops when temp margin < 5∘C; log and signal “night-economy” mode.

8.8 Operator-visible telemetry (what the ground app must show)

- Track state with covariance cones and a single confidence scalar.

- LOS-rate and closing-speed gauges; PN “flyability” light (green/amber/red) tied to covariance.

- Degraded-mode banner (vision-only, thermal-only, no-radar) and sensor watchdogs.

- Latency meter (g2s p50/p95) and thermal headroom bar.

Operators fly better when they can see what the estimator believes and how certain it is.

8.9 Post-run analytics (how we improve each flight)

- Auto-scans for regressions against golden baselines: TTFL, TTR, Lock %, g2s latency, ID-switches.

- Hard-negative mining: export false tracks and near-miss ROIs into the retraining bucket.

- Energy & thermal plots vs. cadence; propose detector/tracker scheduling tweaks per theater (day vs night profiles).

- Issue triage: every fail tagged perception, sync, thermal/power, or guidance coupling with recommended fixes.

8.10 Quiet A-Bots.com angle

We ship these metrics and fielding tools with the stack: WORM logs, golden scenario scripts, a guidance-compatible KPI dashboard, and an operator app that surfaces confidence, latency, and headroom in real time. That’s how we keep the perception loop honest—and how teams move from “a demo that worked once” to a repeatable C-UAV capability that holds lock, loses it gracefully, and gets it back fast.

9.Where A-Bots.com fits

A-Bots.com fits into counter-UAV perception as a build-to-scale engineering partner that treats detection, tracking, and prediction as a single control-quality pipeline. The team’s remit is not “add a model to a drone,” but to deliver a synchronized sensing stack, an embedded inference graph, and a guidance-ready state estimator that hold a maneuvering intruder with honest latency and quantified uncertainty. The outcome is measured at the aircraft boundary—time-to-first-lock, time-to-reacquire, lock percentage, and glass-to-state latency—rather than by offline scorecards.

On hardware, A-Bots.com assumes ownership of the SWaP trade. They specify optics and focal lengths that keep a tiny target in a tractable pixel footprint, select EO/LWIR cores that survive thermal cycles, and decide when mmWave range/Doppler or LiDAR are worth the grams. Companion compute is standardized around Jetson Orin NX or Qualcomm QRB5165, with attention to camera I/O lanes, PPS/trigger distribution, and EMI-clean layouts. Boresight and lever-arm geometry are documented like avionics, not “best effort,” because fusion quality collapses if milliradian details drift.

On algorithms, the company ships a detector-plus-tracker loop augmented by VIO and an IMM-UKF that produces PN-ready LOS-rate and closing-speed estimates. RGB↔thermal fusion is first-class for dusk and night; mmWave, when available, collapses range ambiguity so short-horizon prediction remains stable. Models are quantized to INT8, trackers are compressed, cadences are split so the tracker runs every frame while the detector fires opportunistically, and gimbal-steered ROIs keep compute where the target actually is. The goal is not just accuracy but thermal sustainability over a full sortie.

Data and training are treated as an operations problem. A-Bots.com curates aerial small-object corpora, fine-tunes on anti-UAV RGB-T and thermal sets, injects bird/balloon hard negatives, and uses simulation to manufacture rare edge cases like back-lighting, haze, and high-yaw passes. Every flight feeds a WORM log that yields hard-negative mines and mis-association examples for the next training cycle. Distillation compresses teacher models into deployable INT8 students, and export pipelines produce deterministic TensorRT or NPU engines with fixed input shapes and latency envelopes.

Avionics integration is explicit. The company owns the guidance bridge into PX4 Offboard or ArduPilot Guided, publishing LOS-rate, closing velocity, predicted intercept geometry, and covariance so PN or IBVS-PN can fly within field-of-view and actuator limits. Uncertainty gates setpoint aggressiveness, disappearance states prevent hallucinated boxes from driving control, and health flags communicate when the system is in bearings-only or degraded-sensor modes. This is how perception and guidance stop arguing and start cooperating.

Fielding is engineered as a product, not an event. A-Bots.com provides OTA with rollback and feature flags, timing validation kits for PPS/PTP, and golden scenario scripts that reproduce back-lighting, gusts, GPS-degraded legs, and sensor dropouts. Acceptance is tied to the same KPIs used during design—TTFL, TTR, lock percentage, latency distributions, ID stability—so upgrades either ship or stand down based on numbers, not sentiment. An operator app presents low-latency video with track overlays, confidence and covariance cones, thermal headroom, and a simple flyability indicator for the guidance state.

Security and deployment constraints are handled without drama. Builds can run on-prem or air-gapped; logs are privacy-scrubbed; code and model artifacts can be escrowed under NDA; and documentation includes calibration procedures, timing harnesses, and maintenance playbooks so line crews can re-boresight and re-sync without a research engineer on site. Export and compliance concerns are treated as guardrails in planning rather than surprises at the end.

Commercially, A-Bots.com engages in staged programs: a flight-worthy proof of capability that hits the KPI floor, followed by an industrialization phase that hardens the stack, expands sensor options, and transfers know-how to in-house teams. Handover includes ROS 2 packages, quantized engines, calibration assets, test-range scripts, and a regression dashboard aligned with mission SLAs. The quiet promise is straightforward: fewer minutes lost to reacquire, tighter LOS-rate estimates under stress, and a perception loop that lets guidance do its job—day or night.

✅ Hashtags

#CounterUAV

#CUAV

#CUAS

#DroneSecurity

#ComputerVision

#EdgeAI

#SensorFusion

#ThermalImaging

#mmWaveRadar

#PX4

#ArduPilot

#Jetson

#RGBT

#A_Bots

Other articles

Forestry Drones: Myco-Seeding, Flying Edge and Early-Warning Sensors Forestry is finally getting a flying edge. We map micro-sites with UAV LiDAR, deliver mycorrhiza-boosted seedpods where they can actually survive, and keep remote sensor grids alive with UAV data-mule LoRaWAN and pop-up emergency mesh during fire incidents. Add bioacoustic listening and hyperspectral imaging, and you catch chainsaws, gunshots, pests, and water stress before canopies brown. The article walks through algorithms, capsule design, comms topologies, and field-hard monitoring—then shows how A-Bots.com turns it into an offline-first, audit-ready workflow for rangers and ecologists. To build your stack end-to-end, link the phrase IoT app development to the A-Bots.com services page and start a scoped discovery.

ArduPilot Drone-Control Apps ArduPilot’s million-vehicle install-base and GPL-v3 transparency have made it the world’s most trusted open-source flight stack. Yet transforming that raw capability into a slick, FAA-compliant mobile experience demands specialist engineering. In this long read, A-Bots.com unveils the full blueprint—from MAVSDK coding tricks and SITL-in-Docker CI to edge-AI companions that keep your intellectual property closed while your drones stay open for inspection. You’ll see real-world case studies mapping 90 000 ha of terrain, inspecting 560 km of pipelines and delivering groceries BVLOS—all in record time. A finishing 37-question Q&A arms your team with proven shortcuts. Read on to learn how choosing ArduPilot and partnering with A-Bots.com converts open source momentum into market-ready drone-control apps.

Building AI Drone Software This article shows how AI drone software becomes a production system, not a demo. We detail the open developer stack (PX4/ArduPilot + companion compute), UTM and Remote ID plumbing for BVLOS, remote operations with drone-in-a-box, and the data pipeline that turns pixels into defensible decisions. You’ll see how dual-spectrum (RGB+thermal) payloads are made “AI-ready” through calibration, synchronization, and fusion, how fleets are governed with versioned models and policy-first safety, and how C-UAS stacks fuse RF, radar, and EO/IR into explainable tracks. Finally, we map concrete industry playbooks—from utilities and renewables to construction and public safety—showing where disciplined engineering drives measurable outcomes. For organizations that need on-prem privacy and compliance without vendor lock-in, A-Bots.com productionizes the stack end-to-end so your AI drone software aligns with policy, operations, and the metrics that matter.

Drone Mapping Software (UAV Mapping Software): 2025 Guide This in-depth article walks through the full enterprise deployment playbook for drone mapping software or UAV mapping software in 2025. Learn how to leverage cloud-native mission-planning tools, RTK/PPK data capture, AI-driven QA modules and robust compliance reporting to deliver survey-grade orthomosaics, 3D models and LiDAR-fusion outputs. Perfect for operations managers, survey professionals and GIS teams aiming to standardize workflows, minimize field time and meet regulatory requirements.

Top stories

Copyright © Alpha Systems LTD All rights reserved.

Made with ❤️ by A-BOTS