Home

Services

About us

Blog

Contacts

Edge-Ready Dialogues: Unlocking AI Chatbot Offline Capabilities for Mission-Critical Apps

1.Engineering AI Chatbot Offline Capabilities at the Edge

2.From Concept to Launch with A-Bots.com, Your Chatbot Development Company

Where AI Chatbot Offline Capabilities Create Real Value

1. Engineering AI Chatbot Offline Capabilities at the Edge

1.1 Why “Always-On” Matters

AI chatbot offline capabilities are no longer a fringe curiosity—they are becoming the backbone of mission-critical digital experiences that cannot afford a “No Internet” dead end. Think aircraft cockpits, maritime bridges, rural health stations, underground metro systems, or disaster-relief zones. In each context, AI chatbot offline capabilities ensure the user still receives coherent intent recognition, context retention, and policy-aware responses even when every bar of connectivity disappears. Latency drops from hundreds of milliseconds (round-tripping to the cloud) to tens of milliseconds (on-device). Privacy improves because sensitive queries—medical triage data, maintenance logs, passenger manifests—never leave the handset or embedded edge gateway. Most crucially, AI chatbot offline capabilities keep business workflows and safety procedures unbroken: a technician can still ask for torque specs, and a passenger with limited vision can still navigate a cabin interface.

“Always-on” does not merely imply caching static FAQ answers. True AI chatbot offline capabilities replicate the conversational dynamism of cloud models: slot filling, sentiment detection, multi-turn disambiguation, contextual carry-over, and multi-modal input fusion (speech + vision) must all function locally. Achieving this demands disciplined model engineering, a pragmatic edge architecture, and ruthless optimisation of every byte and FLOP.

1.2 Model Compression and On-Device NLU

Delivering AI chatbot offline capabilities begins with shrinking transformer behemoths—often hundreds of millions of parameters—into “tiny-LLMs” that still reason, paraphrase, and ground user context. Three complementary strategies dominate:

- Quantisation. Converting 32-bit floating weights to INT8 or mixed INT4/INT8 reduces size by 4-8× and slashes memory bandwidth. Post-training quantisation with outlier channel splitting preserves accuracy within 1 %. Such optimisation is essential because AI chatbot offline capabilities must respect smartphone DRAM ceilings and microcontroller cache lines.

- Distillation. Knowledge distillation trains a compact student model to mimic the logits and hidden-state dynamics of a large teacher. Layer-wise cosine loss and attention transfer preserve reasoning depth. For robust AI chatbot offline capabilities, the student is fine-tuned on edge-specific domain corpora—aviation checklists, maritime regulations, offline ICD-10 codes—so that contextual recall remains impeccable.

- Parameter-efficient finetuning. LoRA, QLoRA, or IA3 adapters add a few million trainable ranks on top of frozen base weights. These adapters can be swapped per tenant or per device, enabling customizable AI chatbot offline capabilities without shipping new binaries.

Modern toolchains such as TensorFlow Lite, ONNX Runtime Mobile, PyTorch Mobile, Metal Performance Shaders, and Core ML compile the compressed networks into operator graphs that exploit ARM NEON, Apple Neural Engine, Qualcomm HTP, or MCUs with CMSIS-NN. A well-tuned graph scheduler is pivotal: sustained performance of 10 TOPS/W lets AI chatbot offline capabilities answer hundreds of user turns on a single battery charge.

Natural-language understanding is only half of the story. Dialogue-state trackers, slot ranks, and policy networks also demand shrinkage. Using spectral-norm regularised GRUs for state tracking cuts parameters by 70 % versus full-attention decoders, yet still supports the multi-turn, multi-domain reasoning that AI chatbot offline capabilities promise.

1.3 Local Data Flow and Architecture

Engineering excellence demands more than a lightweight language model; data orchestration is the silent hero. The canonical edge stack for AI chatbot offline capabilities comprises:

- Encrypted local vector store. Each device hosts an FAISS or Milvus-lite index of domain embeddings. When the model queries for retrieval-augmented prompts, the index returns the five most relevant passages in <3 ms, ensuring AI chatbot offline capabilities surface up-to-date manuals, SOPs, or patient histories without cloud dependency.

- Smart sync layer. When connectivity returns, delta-sync merges local knowledge graphs, adapter weights, and conversation logs into the central repository. Conflict-free replicated data types (CRDTs) avoid merge storms, guaranteeing that AI chatbot offline capabilities never corrupt global truth.

- Event-driven policy engine. Edge-resident business rules—written in Rego or Lua—validate sensitive intents before execution. For example, a diagnosis suggestion is displayed but not stored unless a local clinician signs off. Embedding such governance enforces compliance while maintaining AI chatbot offline capabilities.

Security is non-negotiable. On-device secrets use hardware enclaves (Secure Enclave, TrustZone) and AES-GCM encryption. Differentially private logging adds calibrated Gaussian noise to prevent deanonymisation. A root-of-trust boot chain ensures that adversaries cannot tamper with the binaries powering AI chatbot offline capabilities.

Voice input introduces extra nuance. Streaming ASR models like Vosk-Kaldi, Whisper Tiny, or wav2vec 2.0 quantised convert 16 kHz audio into text in real time on 1.6 GHz cores. Crucially, the acoustic front-end and the NLU pipeline share the same tokenizer so that AI chatbot offline capabilities handle capitalization, language switches, and domain jargon consistently.

1.4 Performance and Security Trade-Offs

Balancing fluid UX with device constraints is the final hurdle. Achieved incorrectly, AI chatbot offline capabilities drain batteries or exceed thermal budgets; achieved correctly, they feel indistinguishable from cloud chat. Empirical benchmarks reveal three pivot variables:

- Context window length. Truncating token history from 4 k to 1 k cuts compute by 75 % with minor semantic drift. Sliding-window attention keeps the last 128 user tokens at full resolution while compressing older turns with a recurrent summariser—an elegant tactic that preserves AI chatbot offline capabilities for long conversations.

- Speculative decoding. The edge LM drafts responses; a lighter prefix LM validates them. Accepted tokens are appended; rejected spans are resampled. This dual-model dance yields 1.8× speedup and 25 % less energy usage, sharpening the responsiveness expected from AI chatbot offline capabilities.

- Adaptive compute. Dynamic voltage-frequency scaling throttles NPUs when battery falls below 15 %. A “lite-mode” distills additional layers on-device, ensuring degraded yet functional AI chatbot offline capabilities until power is restored.

Security sceptics often fear model inversion and prompt injection. Mitigation is twofold: (a) local differential privacy during on-device adapter finetune, and (b) policy-aware decoding with rule-based filters. Together they neutralise malicious payloads without compromising the fluid discourse that defines AI chatbot offline capabilities.

Engineering robust AI chatbot offline capabilities demands cross-disciplinary mastery—machine-learning compression, embedded systems, cryptography, and human-centred design. Yet the payoff is profound: assistants that respect user privacy, shrug off flaky networks, and uphold mission-critical continuity. In the next section, we will explore how A-Bots.com, as a seasoned chatbot development company, translates these technical building blocks into tailored solutions for aviation, healthcare, maritime, and field-service domains—solutions where AI chatbot offline capabilities are not an add-on but a design cornerstone.

2. From Concept to Launch with A-Bots.com, Your Chatbot Development Company

2.1 Industry Scenarios Where AI Chatbot Offline Capabilities Are Non-Negotiable

When a flight computer reboots at 37 000 feet, when a cardiology ward’s Wi-Fi collapses under weekend maintenance, or when a field mechanic finds herself beneath a combine in the Kazakh steppe, relying on cloud latency is a luxury nobody can afford. In these moments AI chatbot offline capabilities draw the line between inconvenience and critical failure. Aviation cabins use cabin-crew tablets loaded with procedural dialogue flows so attendants can ask, “What is the lithium-battery fire checklist?” and receive a step-by-step protocol even while the satellite link flickers. Maritime bridges run the same ship-handling assistant on ruggedised Android units, letting an officer query ballast sequences without routing sensitive data through a mainland hub. Rural healthcare posts pre-load triage ontologies and ICD-10 snippets; here AI chatbot offline capabilities mean a nurse diagnosing neonatal jaundice can still converse with an expert system while the VSAT dish waits out a sandstorm.

Agriculture tells a parallel story. Harvesters in remote fields depend on machine-specific voice support: “Torque for header chain sprocket?” prompts an instant answer sourced from a local vector store, not a cloud that may be forty minutes of RF propagation away. Mining, rail, disaster response, wilderness tourism—the list keeps expanding as enterprises realise that dependable user interaction hinges on resilient AI chatbot offline capabilities rather than a fragile backbone of cables and towers. A-Bots.com has spent the past half-decade mapping this terrain, translating domain pain points into edge-ready dialogues that never reply, “Please reconnect and try again.”

2.2 Designing Seamless UX Around AI Chatbot Offline Capabilities

Great conversational design starts with the assumption that connectivity is a grace note, not a given. A-Bots.com begins every storyboard by mapping the “offline first” state: what intents must work at 03:00 a.m. in a Faraday cage? Which data sets must ship inside the binary? Which voice resources must live in on-device caches so pronunciation and accent handling remain stable? Only after this skeleton is locked down does the team layer fallback transitions for cloud enrichment. This discipline keeps AI chatbot offline capabilities at the heart of the experience rather than bolting them on as an afterthought.

Visual feedback is equally important. Users see a discreet badge that glows green when live sync is healthy and pulses amber when the assistant is operating solely on its AI chatbot offline capabilities. Instead of a spinning wheel, they receive deterministic answers generated by the on-device tiny-LLM plus a ranked retrieval of local knowledge snippets. If bandwidth revives mid-session, the architecture swaps to hybrid mode: the cloud large-language model reranks the candidate responses, yet the user never notices a context loss. This “seamless continuity” principle underpins every mission spec delivered by A-Bots.com, and it is only feasible because the core AI chatbot offline capabilities are engineered to preserve full dialogue state, slot memory, and sentiment vectors without server confirmation.

Language multiplicity poses another UX stress test. A tourist-assistance kiosk in Prague may flip between Czech, English, and Mandarin in consecutive utterances. Here the tokenizer, the ASR front-end, and the intent engine all share Unicode-aware byte-pair vocabularies compiled into the same mobile binary. Multilingual AI chatbot offline capabilities handle these code-switching bursts in real time, ensuring the kiosk never exposes an “unsupported language” alert.

2.3 Compliance and Governance for Edge-Deployed Conversations

Security architects often raise eyebrows at shipping language models onto consumer hardware. A-Bots.com counters with a layered defence: secure enclaves, encrypted vector stores, signed firmware, and policy-aware decoders that refuse to leak PII even in jailbreak prompts. The very fact that robust AI chatbot offline capabilities keep data local simplifies compliance audits under HIPAA, GDPR, and ISO 27001. No personal vitals, no maritime voyage data, and no proprietary maintenance manuals exit the device unless an explicit policy hook approves a delta-sync.

Every project passes through a formal Threat Modeling for Conversational Edge Engines (TMCEE) workshop. Here, risk matrices map attack surfaces: physical theft, prompt injection, model inversion, or adapter poisoning. Mitigations—differential privacy noise, rule-based output filters, token-level allow lists—are wired into the decoding stack of the AI chatbot offline capabilities themselves. When regulators audit a ship operator or a hospital trust, they inspect evidence bundles generated by A-Bots.com’s CI pipeline: reproducible builds, reproducible quantisation logs, and attestation certificates that prove the model embedded in production hashes to the one tested against toxicity and bias datasets.

Governance also extends to knowledge freshness. Some industries demand same-day bulletin ingestion (medical side-effect advisories, Notice to Air Missions, field service recalls). The smart-sync layer described in Section 1 merges these bulletins whenever connectivity reappears, yet the device continues serving deterministic answers through its resident AI chatbot offline capabilities even when those advisories lag by twelve hours. A-Bots.com’s policy engine flags any out-of-date content with a yellow banner: “Last update DD MMM YYYY,” empowering frontline staff to weigh the currency of the information. Such transparency keeps auditors and end users equally confident in the veracity of the assistant.

2.4 A-Bots.com Delivery Pipeline: Turning Vision into Deployed AI Chatbot Offline Capabilities

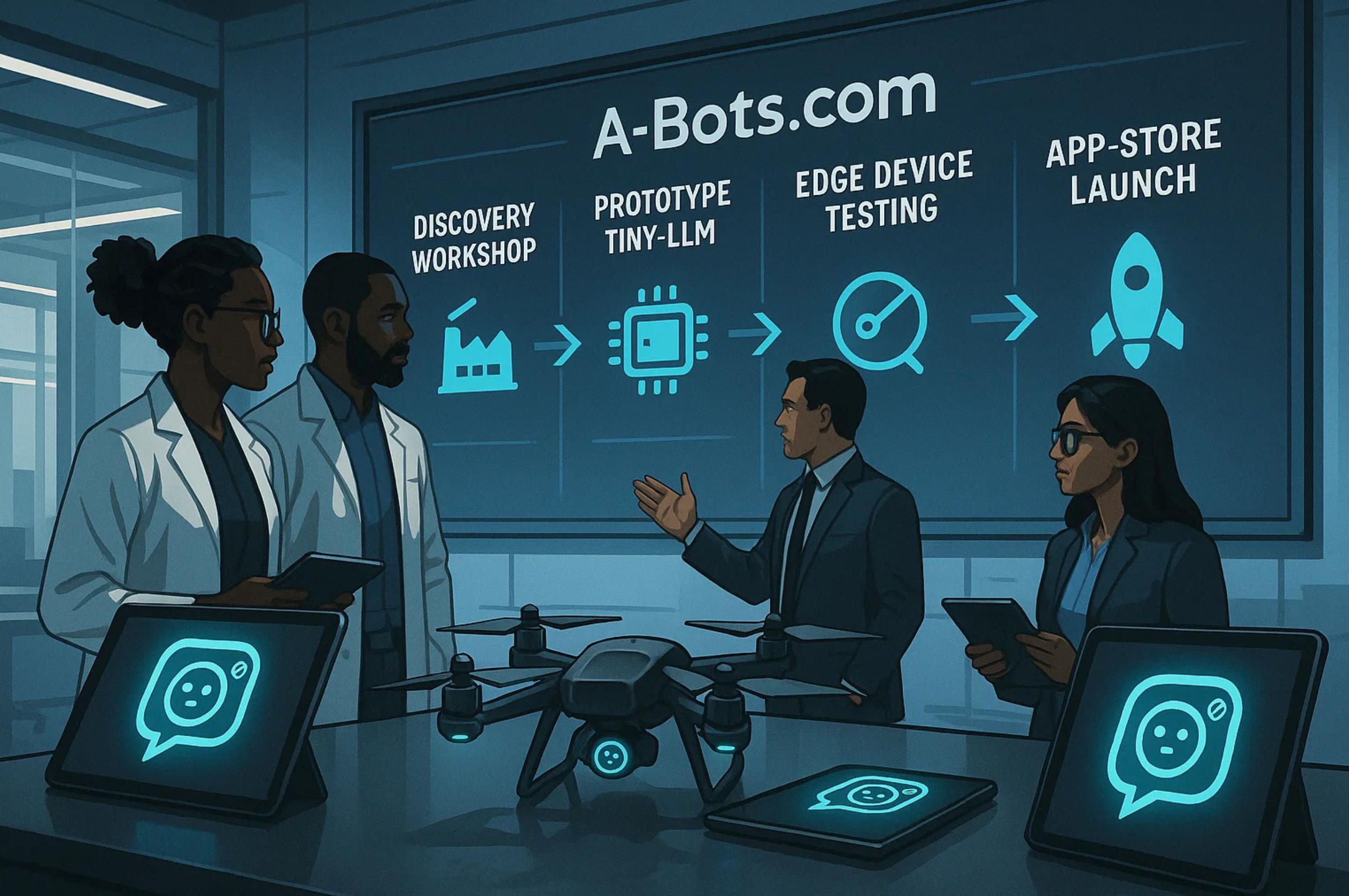

Building a production-grade conversational agent is less a linear sprint than a spiral of experimentation. A-Bots.com opens with a Discovery Workshop that dives into domain vocabulary, offline intent inventory, and device landscapes. Within days a “fidelity 0” paper prototype demonstrates speakerflow diagrams for the core AI chatbot offline capabilities—no code yet, just user journeys. Stakeholders critique the flow, identifying any mission step that should never block on a server call.

Sprint 1 then forges the Minimal Viable Dialogue: a dozen intents, a distilled 30-million-parameter tiny-LLM, and a local retrieval index seeded with anonymised datasets. A-Bots.com uses synthetic user logs to stress-test these initial AI chatbot offline capabilities, measuring token latency on end-of-life handsets and min-spec tablets. Battery drain, heat maps, and cold-start times feed back into a pruning pass that shaves milliseconds without eroding semantic fidelity.

With the skeleton validated, Sprint 2 layers domain-specific adapters via LoRA finetuning. A railway client might request emergency-brake protocols; a fintech wallet may inject AML compliance guidance. Each adapter slots into the frozen base, so multiple business units can share one binary yet activate different AI chatbot offline capabilities at runtime. Penetration testers hammer the build for prompt-based exfiltration; data scientists watch for hallucination spikes. Bugs surface early, long before app-store submission.

Sprint 3 shifts focus to multimodal edge inference. On specialised hardware—DJI drone remotes, Zebra rugged scanners—A-Bots.com compiles the stack with device-specific delegates (Apple ANE, Qualcomm HTP, Nvidia Jetson). Speech, OCR, LiDAR tags, and even thermal imaging metadata become additional tokens in the conversation stream. This upgrade turns plain AI chatbot offline capabilities into a context-rich sensor fusion engine, all while meeting the same size and security budgets.

Finally, a Release Candidate enters A-Bots.com’s Continuous Deployment harness. Git commits trigger reproducible mobile builds, cryptographically signed and version-locked so field devices can roll forward or roll back on demand. End-to-end telemetry—aggregated locally and synced when networks permit—fuels post-launch tuning: adapter weights update, rule thresholds evolve, and knowledge snippets rotate, but the foundational AI chatbot offline capabilities remain intact, guaranteeing stable performance through each incremental upgrade.

Collaboration in Practice

Throughout the pipeline, clients never feel the cognitive overload of ML jargon. A-Bots.com translates metrics into clear narratives: “Your voice assistant now answers 87 % of brake-failure queries in under 60 ms using its AI chatbot offline capabilities; once LTE is present the cloud model gives an extra 5 % accuracy bump.” Stakeholders see dashboards linking token timings to business KPIs like task completion or user satisfaction. Legal teams receive data-flow diagrams proving exactly when and how personal data might egress, reinforcing the privacy value of mature AI chatbot offline capabilities.

Weekly “Edge Office Hours” put engineers and domain experts in the same video room to observe recorded user sessions (with consent, anonymised). Each misclassification triggers a root-cause doc: was the tiny-LLM context window too short? Did the retrieval index lack a crucial maintenance bulletin? The fix may be as small as pushing a 100-kilobyte adapter patch, illustrating the modular agility that robust AI chatbot offline capabilities unlock.

Launch Day itself is anticlimactic by design. Because all heavy lifting already lives on the device, flipping the distribution switch merely adds an app-store listing or an MDM push. Pilots download the update on Wi-Fi in the crew lounge; once airborne, the assistant keeps advising thanks to its proven AI chatbot offline capabilities. Field technicians sideload the APK in a depot and carry confidence into regions where 5G conglomerations are still myths.

2.5 Turning Requirements into Reality

Edge-first conversational intelligence is not a trend; it is the inevitable response to a world where bandwidth is precious, privacy is a mandate, and users expect answers at the speed of thought. A-Bots.com stands at this intersection as a seasoned chatbot development company, converting your requirements into production-grade AI chatbot offline capabilities that respect constraints yet feel limitless. Whether you operate fleets in the air, clinics on remote islands, or maintenance teams across the Eurasian steppe, we are ready to prototype, harden, and ship the next generation of assistants that never say, “Try again when you’re online.”

Visit a-bots.com/services/chatbot to start a discovery call and see how our bespoke AI chatbot offline capabilities can keep your conversations alive—anytime, anywhere, regardless of signal bars.

Where AI Chatbot Offline Capabilities Create Real Value

Below is a cross-industry snapshot—seven verticals (plus one extra for good measure) where AI chatbot offline capabilities shift from “nice-to-have” to operational backbone. Each paragraph highlights three to five concrete ways an edge-ready assistant can serve users when connectivity is limited, intermittent, or strictly prohibited.

1. Aviation and Aerospace

In the pressurised quiet of a long-haul flight, an on-device assistant lets cabin crews pull up dangerous-goods checklists, translate passenger queries, and walk through medical-emergency SOPs—all without pinging a ground server. Pilots can consult cold-weather de-icing tables mid-taxi, while maintenance teams on the apron ask for torque specs or wiring diagrams as soon as they open an access panel.

2. Maritime and Offshore Operations

Beyond the reach of terrestrial towers, bridge officers rely on an embedded chatbot for ballast-exchange rules, collision-avoidance manoeuvres, and instant translations of port directives. Engine-room technicians query vibration thresholds or lubrication intervals, and cruise-ship hospitality staff access multilingual guest-service scripts—even as the vessel ploughs through the Faroe Gap with zero bandwidth.

3. Healthcare and Medical Devices

Rural triage nurses consult symptom pathways, drug-interaction charts, and ICD-10 codes stored locally on tablets. In surgical theatres, a voice assistant adjusts lighting presets or recalls procedure steps without touching a potentially contaminated surface. Rehabilitation patients use wearables that coach exercises and capture progress—even when a facility’s Wi-Fi shutters during maintenance.

4. Agriculture and AgriTech

Combine operators ask for header settings or yield-map legends while harvesting far from 5G. Livestock managers use a barn-mounted kiosk to diagnose feed issues or vaccination schedules. Drone pilots in the Kazakh steppe receive real-time agronomic advice on fungicide timing, and irrigation controllers adjust valve cycles through spoken commands when cellular modems sleep to save power.

5. Manufacturing and Industry 4.0

On the production floor, machinists ask an edge assistant for CNC post-processors, SPC tolerances, or lock-out/tag-out steps while standing beside a mill that blocks radio signals. Quality inspectors dictate defect reports that sync later, and warehouse AGV operators summon troubleshooting wizardry when a lidar sensor misbehaves—all thanks to resilient AI chatbot offline capabilities.

6. Energy, Utilities and Mining

Wind-turbine climbers at 120 metres altitude request torque diagrams and safety checklists. Underground miners vocalise methane-alarm procedures, equipment part numbers, or escape-route prompts where RF cannot penetrate rock. Field engineers servicing isolated substations pull circuit-breaker schematics and firmware patch notes—no satellite round-trips required.

7. Hospitality, Travel and Smart Venues

Mountain-lodge reception tablets guide guests through late-night check-in, explain avalanche-safety rules, or translate menu allergens into five languages. Theme-park kiosks offer ride wait-times and accessibility info while cellular networks sag under holiday crowds. Hotel housekeeping robots confirm room-cleaning protocols via natural-language commands—even in elevator shafts that drop signal.

8. Public Safety and Disaster Response

When hurricanes topple towers, first responders consult an on-device assistant for triage categories, hazardous-materials placards, and evacuation scripts. Search-and-rescue teams share location-based tips (“nearest defibrillator”) through mesh-networked chatbots, and incident commanders log voice notes that merge into central systems only when a portable satlink comes online.

A-Bots.com tailors each deployment—model size, domain adapters, policy filters—to the regulatory, environmental, and hardware realities of the sector at hand. If your organisation operates where “offline” is a daily condition rather than an outage, our AI chatbot offline capabilities keep dialogue—and productivity—alive. Explore partnership details at AI chatbot development.

✅ Hashtags

#AIChatbot

#OfflineAI

#EdgeComputing

#ChatbotDevelopment

#OnDeviceML

#A_Bots

#ConversationalAI

#MobileAI

#EdgeAI

#OfflineCapabilities

Other articles

Offline AI Chatbot Development Cloud dependence can expose sensitive data and cripple operations when connectivity fails. Our comprehensive deep-dive shows how offline AI chatbot development brings data sovereignty, instant responses, and 24 / 7 reliability to healthcare, manufacturing, defense, and retail. Learn the technical stack—TensorFlow Lite, ONNX Runtime, Rasa—and see real-world case studies where offline chatbots cut latency, passed strict GDPR/HIPAA audits, and slashed downtime by 40%. Discover why partnering with A-Bots.com as your offline AI chatbot developer turns conversational AI into a secure, autonomous edge solution.

Advantech IoT Solutions This in-depth article explores the complete landscape of Advantech IoT solutions, from rugged edge hardware and AI-enabled gateways to cloud-native WISE-PaaS and DeviceOn platforms. Learn how A-Bots.com, an experienced IoT software development company, helps businesses unlock the full potential of Advantech technology through tailor-made applications, data orchestration, edge AI integration, and user-centric mobile tools. From prototyping to global-scale deployment, this guide showcases the strategic path toward building resilient and intelligent IoT systems for manufacturing, energy, logistics, and smart infrastructure.

IoT-Driven Agriculture Mobile Application Development From soil-moisture probes to ISOBUS consoles, modern farms generate torrents of data that often die in silos. This deep-dive shows how IoT gateways, streaming analytics and edge-enabled UX converge inside purpose-built agriculture mobile applications. You’ll learn why schema registries matter, how ConvLSTM models predict stress before leaves wilt, and what it takes to push a variable-rate prescription from pocket to tractor in minutes. Partnering with A-Bots.com, growers gain a secure, interoperable tech stack that scales from small plots to thousand-hectare enterprises.

AI Agents Examples From fashion e-commerce to heavy-asset maintenance, this long read dissects AI agents examples that already slash costs and drive new revenue in 2025. You’ll explore their inner anatomy—planner graphs, vector-store memory, zero-trust tool calls—and the Agent Factory pipeline A-Bots.com uses to derisk pilots, satisfy SOC 2 and HIPAA auditors, manage MLOps drift, and deliver audited ROI inside a single quarter.

Types of AI Agents: From Reflex to Swarm From millisecond reflex loops in surgical robots to continent-spanning energy markets coordinated by algorithmic traders, modern autonomy weaves together multiple agent paradigms. This article unpacks each strand—reactive, deliberative, goal- & utility-based, learning and multi-agent—revealing the engineering patterns, safety envelopes and economic trade-offs that decide whether a system thrives or stalls. Case studies span lunar rovers, warehouse fleets and adaptive harvesters in Kazakhstan, culminating in a synthesis that explains why the future belongs to purpose-built hybrids. Close the read with a clear call to action: A-Bots.com can architect, integrate and certify end-to-end AI agents that marry fast reflexes with deep foresight—ready for your domain, your data and your ROI targets.

Top stories

Copyright © Alpha Systems LTD All rights reserved.

Made with ❤️ by A-BOTS